Wikipedia research: How studies shape the world's largest encyclopedia

When you look up a fact on Wikipedia, a free, collaborative encyclopedia built by volunteers and shaped by research-driven policies. Also known as the free encyclopedia, it doesn’t just collect information—it tests it, debates it, and rewrites it based on real-world evidence. This isn’t guesswork. Every edit, policy change, and community decision is backed by Wikipedia research, systematic studies on how knowledge is created, shared, and challenged online. These aren’t academic papers buried in journals—they’re live experiments happening right now, in real time, as editors fight misinformation, fix bias, and push back against AI systems that steal their work.

Behind every article is a chain of evidence. Reliable sources, books, peer-reviewed journals, and trusted news outlets that meet Wikipedia’s strict criteria are the foundation. But what counts as reliable? Research shows that secondary sources—like analysis and summaries—are preferred over raw data or firsthand accounts. That’s why journalists use Wikipedia not as a source, but as a map to the real ones. Meanwhile, AI ethics, the growing debate over whether machines should edit human knowledge is turning into one of the biggest threats to Wikipedia’s integrity. Studies reveal AI encyclopedias often cite sources that don’t actually support their claims. They miss context. They erase minority views. And they don’t care about copyright. Meanwhile, Wikipedia’s own research teams track how often articles are vandalized, who edits them, and why some topics—like Indigenous history or women’s achievements—still lag behind.

It’s not just about fixing errors. It’s about fixing power. Research on encyclopedia bias, how systemic gaps in representation shape what’s considered "common knowledge" led to task forces that now focus on adding missing voices. It’s why Wikidata exists—to make sure facts about a person in Swahili, Arabic, or Quechua all line up the same way. And it’s why the Wikimedia Foundation spends time on Wikimedia Enterprise, a service that sells Wikipedia data to corporations, sparking heated debates about who profits from free knowledge. These aren’t side issues. They’re core to whether Wikipedia stays trustworthy.

What you’ll find below isn’t just a list of articles. It’s a window into how real research—done by volunteers, journalists, and data scientists—keeps Wikipedia alive. From how copy editors clear 12,000 backlog articles to how AI is quietly rewriting history without permission, every post here answers one question: Who gets to decide what’s true? And how do we make sure they’re right?

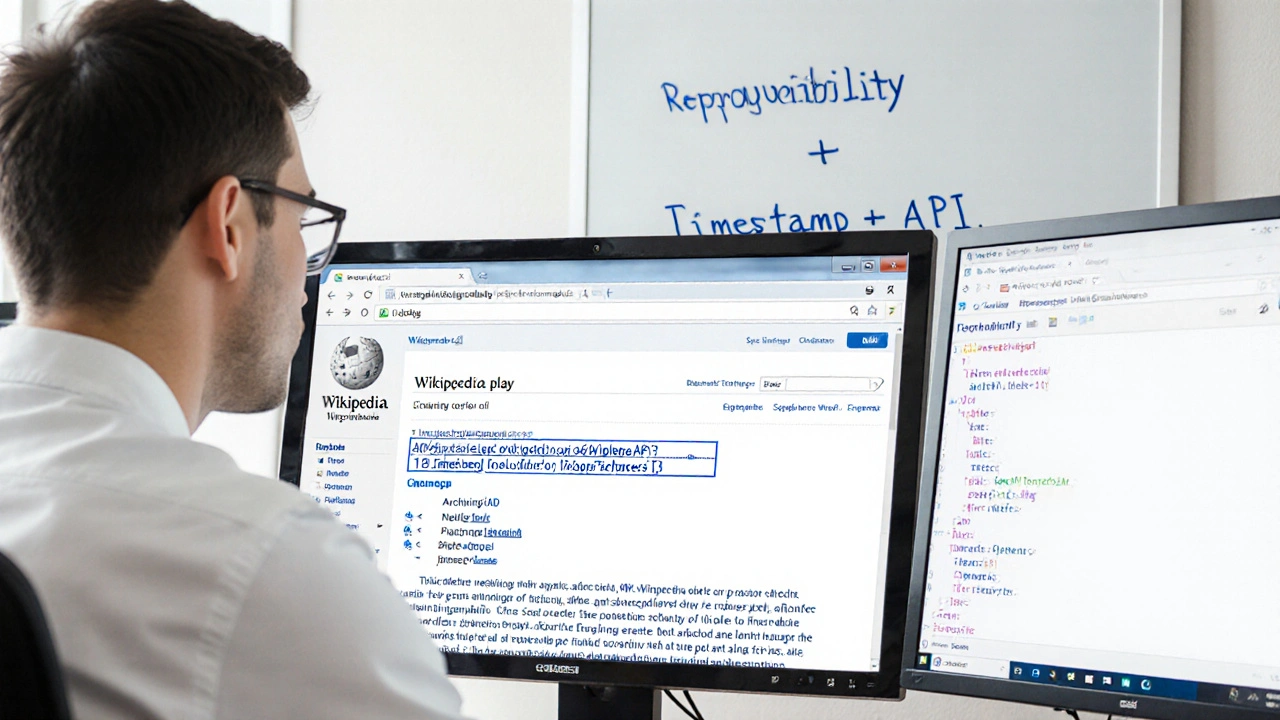

Reproducibility in Wikipedia Research: How to Share Code and Data Effectively

Wikipedia research often fails reproducibility because code and data aren't shared. Learn how to properly document, archive, and share your analysis to make your work verifiable and trustworthy.

Data Ethics and Privacy Considerations in Wikipedia Studies

Wikipedia research often uses public edit data without consent, risking editor privacy and safety. Ethical studies must prioritize anonymity, consent, and community trust over convenience.

Desk Research with Wikipedia: A Practical Guide for Students and Researchers

Learn how to use Wikipedia effectively for academic desk research-find credible sources, avoid common mistakes, and build a solid foundation for papers and projects without wasting time.

Wikipedia Editor Behavior Studies: What Really Happens Behind the Scenes

Wikipedia editor behavior studies reveal how real people shape the world’s largest encyclopedia-through collaboration, conflict, and quiet dedication. Learn what drives edits, why some articles thrive, and how anyone can contribute.

Reproducibility in Wikipedia Research: How to Avoid Common Mistakes and Follow Best Practices

Learn how to make Wikipedia-based research reproducible by saving page versions, using revision IDs, and avoiding common pitfalls that invalidate academic studies. Essential for students and researchers.

Peer-Reviewed Journals Specializing in Wikipedia and Open Knowledge

Peer-reviewed journals are now publishing rigorous research on Wikipedia and open knowledge systems, treating them as legitimate subjects of academic study. These journals promote transparency, open access, and community-driven scholarship.

Future Directions in Wikipedia Research: Open Questions and Opportunities

Wikipedia research is shifting from traffic metrics to equity. Learn the open questions about bias, AI, offline access, and how to make global knowledge truly inclusive.

Academic Research About Wikipedia: A Survey of Major Studies

Academic research on Wikipedia reveals surprising truths about its reliability, editor demographics, and role in education. Studies show it's often as accurate as traditional encyclopedias, but faces bias and sustainability challenges.