When you ask an AI answer engine a question like "What caused the fall of the Roman Empire?", it doesn’t send you to Wikipedia. It gives you a quick summary-three sentences, maybe four-right on the screen. No clicking. No scrolling. Just the answer. It feels efficient. But is it better?

What AI Answer Engines Do to Wikipedia

AI answer engines like Perplexity, You.com, and even Google’s AI Overviews now pull from Wikipedia as one of their main sources. They read the full article, digest it, and spit out a condensed version. This isn’t just copying. These systems use models trained on billions of sentences to rewrite facts in plain language, often adding context from other trusted sources like academic papers or government reports.

But here’s the catch: Wikipedia isn’t just a pile of facts. It’s a living document. Every edit is tracked. Every claim has citations. Every controversy is documented in the talk pages. When an AI summarizes it, all that context disappears. You get the conclusion, but not the debate. You get the date, but not the disputed theories.

Take the claim that "Julius Caesar was assassinated in 44 BCE." That’s true. But what if you want to know why some historians argue the assassination was less about tyranny and more about economic inequality? The AI might leave that out. It’s optimized for speed, not depth.

Why Linking Still Matters

Linking to the original Wikipedia article isn’t outdated. It’s a safety net. When you link, you give the reader control. They can decide how deep they want to go. They can check the references. They can read the edit history. They can see how the article changed over time.

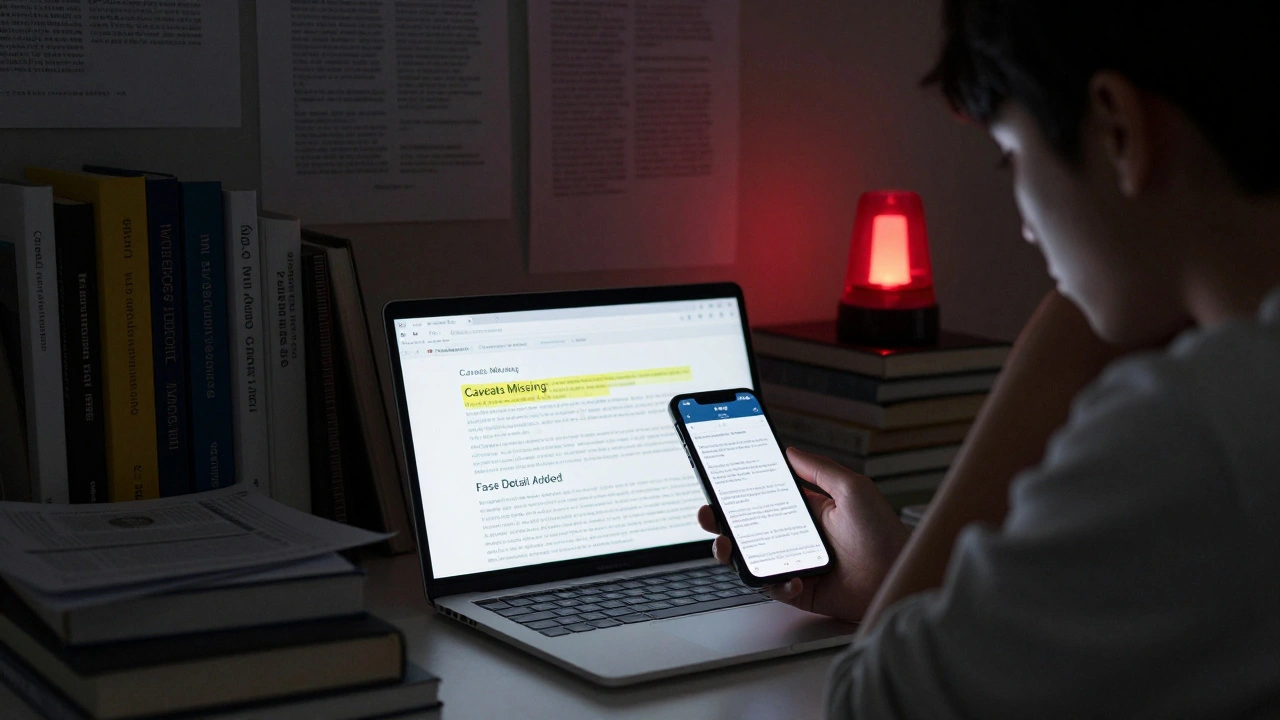

In 2023, researchers at Stanford analyzed 500 AI-generated summaries of Wikipedia pages on medical topics. They found that 17% of the summaries omitted critical caveats-like "evidence is limited" or "this is still debated." In 8% of cases, the AI added false details that sounded plausible but weren’t in the original article. These weren’t mistakes. They were hallucinations-fabrications born from pattern-matching, not understanding.

Linking doesn’t just point to a source. It points to accountability. If a Wikipedia page gets updated to reflect new research, the link still works. The AI summary? It’s frozen in time. Unless the system reprocesses it-which most don’t do automatically-it stays wrong.

The Hidden Cost of Convenience

AI summaries feel fast. But they’re making us lazy. A 2025 survey of 1,200 college students showed that 68% used AI summaries as their only source for research assignments. When asked to find the original source, only 29% could. They didn’t know how to search for it. They didn’t even know what to look for.

This isn’t just about students. It’s about how we think. When we stop clicking, we stop questioning. We stop seeing the structure behind knowledge. Wikipedia’s layout-headings, subheadings, citations, references-isn’t accidental. It’s designed to teach you how to evaluate information. An AI summary strips that away.

Think of it like eating pre-chewed food. It’s easy. But you’re not learning how to chew.

When AI Summaries Work Well

That doesn’t mean AI summaries are useless. They’re great for quick checks. Need to know the population of Tokyo? The AI gives it to you instantly. Need to understand what quantum entanglement means in plain terms? A good summary can help.

For factual, uncontroversial topics-dates, names, basic definitions-AI summaries are reliable. They’re faster than typing "Wikipedia" into your browser. And if you’re on a phone, walking to a meeting, or just need a quick fact, that’s fine.

But here’s the rule: if the topic has any history, debate, or complexity, skip the summary. Go to the source.

What’s at Stake

The real danger isn’t that AI gets facts wrong. It’s that we stop caring about where facts come from. When every answer is served on a silver platter, we forget how to dig. We forget how to verify. We forget that knowledge isn’t a product-it’s a process.

Wikipedia survives because millions of people edit it, argue over it, and cite it. AI answer engines survive because they make us feel smart without making us think.

If we keep choosing summaries over links, we’re not just changing how we find information. We’re changing how we understand truth.

The Middle Ground

You don’t have to choose between AI summaries and links. You can use both.

Use the AI summary to get the gist. Then ask: "Where did this come from?" Most AI engines now show their sources. Click them. Read the Wikipedia page. Check the references. If the summary left out something important, you’ll notice.

Some tools, like Perplexity, now include a "Show source" button right under the summary. That’s a good start. But it’s not enough. We need systems that don’t just show sources-they *explain* why they chose them.

Imagine an AI that says: "I summarized this from Wikipedia because it’s the most comprehensive source on this topic. Here are three key citations it used. Two of them are peer-reviewed journals. One is a government report. There’s a disagreement in the talk page about this point-here’s what it says."

That’s not science fiction. It’s possible. And it’s what we should demand.

What You Can Do Today

Here’s how to stay sharp in an age of AI summaries:

- Always check the source-even if the summary feels right. If it came from Wikipedia, open the page.

- Look at the references-not just the article. Click the numbers. See what the original authors said.

- Check the edit history-scroll to the bottom of the Wikipedia page. See when it was last updated. Was it changed after a major event?

- Compare summaries-ask the same question on two different AI engines. If they disagree, you know something’s missing.

- Teach others-show a friend how to read a Wikipedia citation. It’s a skill that’s disappearing.

Knowledge isn’t something you consume. It’s something you build. And you can’t build it if you never look under the hood.

Are AI summaries of Wikipedia more accurate than just reading the article?

No, not always. AI summaries can miss context, remove caveats, or even invent details. Wikipedia articles include citations, edit histories, and debates that AI often ignores. Reading the original gives you full transparency. AI gives you convenience-at the cost of depth.

Can AI answer engines replace Wikipedia?

No. Wikipedia is a collaborative, editable, citable source with a transparent process. AI engines are black boxes that pull from it but don’t contribute back. They can’t fix errors, update with new research, or explain why a fact is disputed. Wikipedia survives because people care enough to edit it. AI doesn’t care-it just processes.

Why do AI engines summarize Wikipedia instead of linking to it?

Because it keeps you on their platform. If you click a link, you leave. If they give you the answer, you stay. It’s a business model, not a knowledge model. They want your attention, not your curiosity.

Is it okay to use AI summaries for schoolwork?

Only if you use them as a starting point. Never submit an AI summary as your own work. Always go back to the original source. Teachers and professors can spot AI summaries-they look too clean, too generic, and lack citations. Using them as a shortcut risks plagiarism and poor learning.

Do AI engines update their summaries when Wikipedia changes?

Rarely. Most AI systems don’t re-scan Wikipedia pages automatically. A summary from six months ago might still be showing outdated info-even if the Wikipedia page was updated yesterday. Linking to Wikipedia ensures you always see the latest version.

What’s the best way to use AI and Wikipedia together?

Use the AI to get a quick overview. Then, click the source link and read the full Wikipedia article. Check the references. Look at the edit history. If the AI left something out, you’ll find it there. This gives you speed and depth-not just one or the other.