Wikipedia doesn’t break news. It reports it-after the fact, and only when it’s been confirmed by multiple trustworthy outlets. If you’ve ever seen a breaking news event pop up on Wikipedia within minutes of happening, you might think it’s magically fast. But that speed comes from strict rules, not guesswork. Wikipedia’s policy on breaking news sourcing isn’t about being first-it’s about being right.

Why Wikipedia Won’t Publish Breaking News Right Away

When a major event happens-a plane crash, a political resignation, a natural disaster-social media explodes. Twitter threads, livestreams, and news alerts flood the internet. But Wikipedia editors don’t rush to add it. Why? Because misinformation spreads faster than facts.

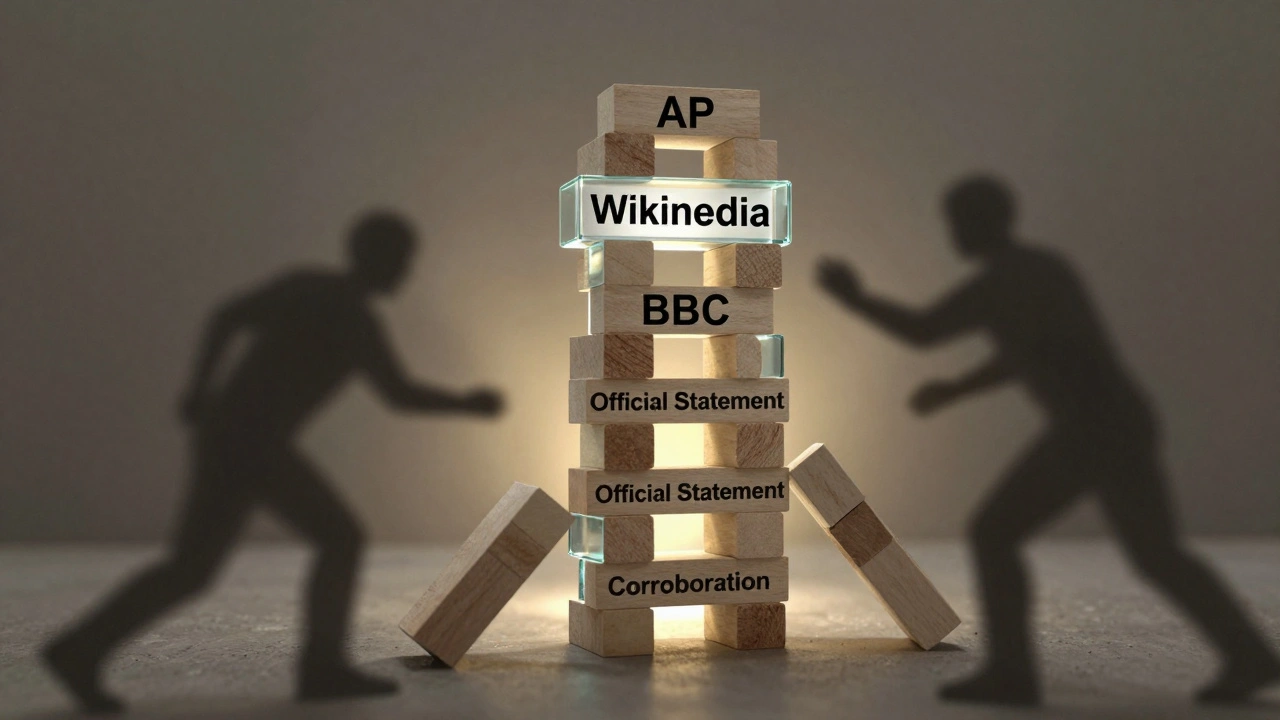

Wikipedia’s core rule is: reliable sources. That means established media organizations with editorial oversight, not blogs, anonymous Twitter accounts, or press releases. A tweet from a user claiming a building collapsed doesn’t count. A report from the Associated Press, BBC, or Reuters does.

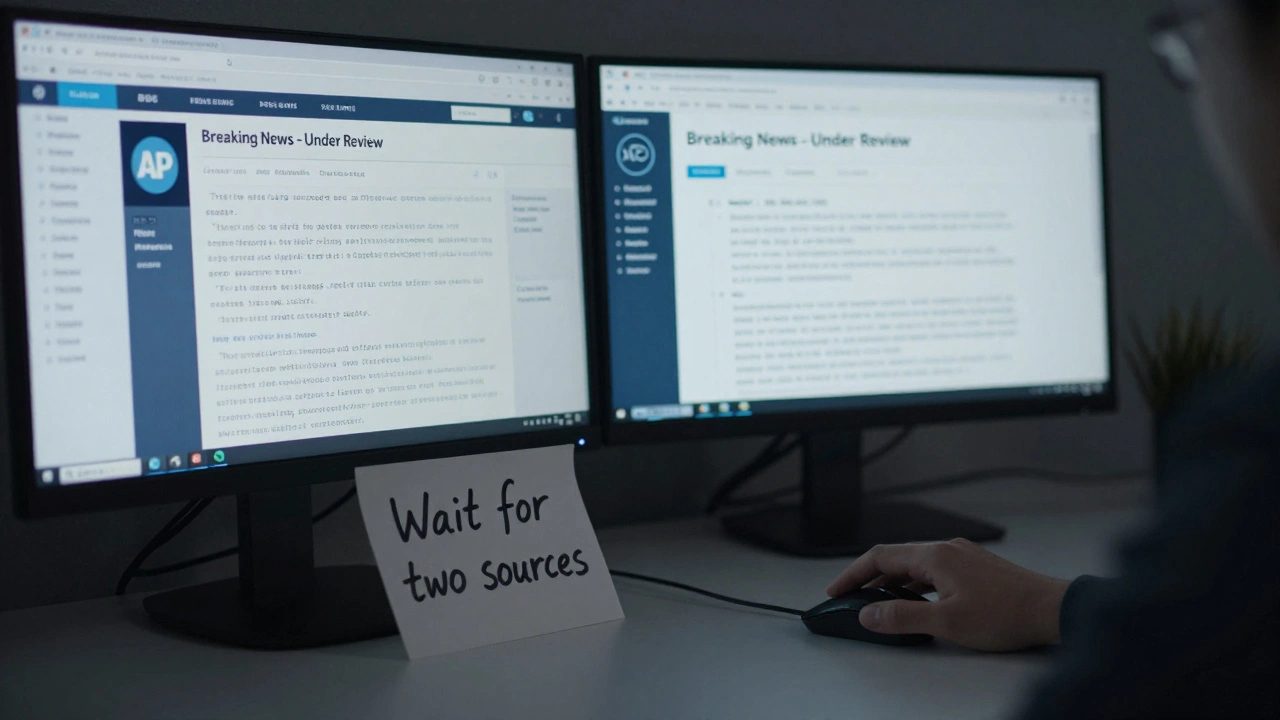

Editors wait until at least two independent, reputable sources confirm the same basic facts. That’s not bureaucracy-it’s damage control. In 2020, a false report of a U.S. senator’s death spread across social media. Wikipedia editors held off for over an hour until the Senate’s official site and major news outlets confirmed it was a hoax. That delay saved the article from becoming a permanent error.

What Counts as a Reliable Source for Breaking News

Not all news outlets are created equal. Wikipedia has a clear hierarchy for what counts as reliable during breaking events:

- High-quality news organizations: AP, Reuters, Bloomberg, CNN, BBC, The New York Times, The Guardian, Le Monde, and other outlets with professional journalism standards.

- Official statements: Government agencies, police departments, or public institutions that issue verified press releases or hold live briefings.

- Academic or institutional reports: For events like scientific discoveries or public health emergencies, peer-reviewed journals or university announcements qualify.

What doesn’t qualify?

- Unverified social media posts

- Anonymous sources

- Corporate press releases without independent corroboration

- Opinion blogs or commentary sites

- Wikipedia itself or other wikis

Even major outlets can make mistakes. If two outlets report conflicting details, Wikipedia editors don’t pick a side. They note the discrepancy and wait for consensus. For example, during the 2023 earthquake in Turkey, early reports from local TV stations gave wildly different death tolls. Wikipedia kept the article blank until three international agencies-AP, BBC, and Al Jazeera-reported similar figures.

How Wikipedia Handles Unconfirmed Reports

Just because something isn’t confirmed doesn’t mean it’s ignored. Editors use talk pages and draft articles to track developing stories. If a major outlet reports a developing situation, editors may create a temporary draft with a note like: “This article is a work in progress. Sources are being verified.”

These drafts stay hidden from the public until they meet the reliability threshold. That’s how Wikipedia avoids becoming a rumor mill while still staying responsive. The draft system lets editors collaborate without misleading readers.

Some editors even set up automated alerts for breaking news from trusted sources. When Reuters publishes a headline about a major fire, the alert triggers a checklist: “Is there a second source? Is it official? Is it detailed enough?” Only then does the article get updated.

Real Examples of Breaking News on Wikipedia

In January 2024, a fire broke out at a major data center in Virginia. Within 20 minutes, local news sites reported it. But Wikipedia didn’t update until 90 minutes later-after the fire department confirmed the location, the number of responders, and the status of evacuations via their official Twitter account and a live press conference. The article included direct quotes from the fire chief and linked to the official press release.

Compare that to a 2023 incident where a celebrity’s death was falsely reported by a tabloid blog. The rumor spread across Reddit and TikTok. Wikipedia editors quickly blocked the edit, flagged it as a hoax, and locked the page. Within hours, the blog retracted the story. Wikipedia never published it.

Another example: the 2025 U.S. presidential inauguration. Wikipedia editors had a draft ready for months, but the article remained empty until the official White House schedule was published and confirmed by three major news networks. Only then did the article update with exact times, attendees, and ceremonial details.

The Role of Editors and Community Oversight

Wikipedia’s breaking news policy isn’t enforced by bots. It’s enforced by volunteers-editors who spend hours monitoring trusted news feeds, checking sources, and debating edits on talk pages. Many of them are former journalists, librarians, or researchers.

There’s no central authority deciding what gets published. Instead, the community follows a set of clear guidelines: Wikipedia:Reliable sources, Wikipedia:Verifiability, and Wikipedia:No original research. These aren’t suggestions-they’re binding rules.

When a controversial edit is made, it gets reviewed within minutes. If it violates sourcing rules, it’s reverted. If the editor disputes the reversion, the case goes to mediation. This system keeps Wikipedia accurate, even when the world is in chaos.

What Happens When Sources Conflict or Change

Breaking news isn’t static. Facts change. A missing person is found. A casualty count is revised. A suspect is cleared. Wikipedia updates in real time-but only with verified changes.

For example, during the 2024 London bridge incident, initial reports said three people were injured. Later, emergency services confirmed one person was treated and released. Wikipedia editors updated the article within 15 minutes of the official statement, citing the London Fire Brigade’s press release.

But if a source retracts a claim, Wikipedia doesn’t just delete it. It adds a note: “Earlier reports stated X, but this was later corrected by [source].” Transparency matters more than neatness.

Why This Matters for Readers

People turn to Wikipedia during crises because it’s fast, free, and easy to access. But if it were to publish unverified rumors, it would do more harm than good. Imagine someone searching for details about a school shooting and finding a false name listed as the shooter. That’s not just inaccurate-it’s dangerous.

Wikipedia’s delay isn’t a flaw. It’s a feature. It’s the difference between a news alert and a historical record. The goal isn’t to be the first to report. It’s to be the last to be wrong.

When you read a Wikipedia article about a breaking event, you’re not seeing raw data. You’re seeing a curated, verified, and cross-checked summary. That’s why millions of people, from students to first responders, trust it during emergencies.

How You Can Help

If you’re reading this and you’re tempted to edit a breaking news article, pause. Ask yourself: “Do I have two independent, reliable sources confirming this?” If not, don’t edit. Instead, check the article’s talk page. If the event isn’t covered yet, you can suggest sources there. Experienced editors monitor those pages closely.

Don’t report rumors. Don’t post links to unverified social media. Don’t assume your local news blog counts as a reliable source. If you’re unsure, wait. The system works best when people follow the rules.

Wikipedia’s strength isn’t its speed. It’s its accuracy. And that’s built on patience, discipline, and a refusal to publish anything until it’s been proven.

Can I edit a Wikipedia article during a breaking news event?

You can, but only if you have two or more independent, reliable sources confirming the information. Editing based on social media, rumors, or single reports will be reverted. If you’re unsure, use the article’s talk page to suggest sources instead.

Why doesn’t Wikipedia use press releases as sources?

Press releases aren’t reliable on their own because they’re written by the subject of the news, not independent journalists. They can be misleading, exaggerated, or incomplete. Wikipedia requires independent verification-usually from a news outlet that has reviewed and confirmed the release’s claims.

What if a major news outlet reports something false?

If a reputable outlet makes an error, Wikipedia will update the article once the error is corrected by the same outlet or another reliable source. Editors track retractions and corrections. The article will reflect the final, verified version-not the initial mistake.

Are Wikipedia’s breaking news rules the same worldwide?

Yes. The sourcing policy is global and applies to all language versions of Wikipedia. However, local language editions may rely on regionally trusted sources-for example, Le Monde for French Wikipedia or Yomiuri Shimbun for Japanese Wikipedia. The standard is the same: independent, reputable, and verified.

How long does it usually take for breaking news to appear on Wikipedia?

It varies. For major events with clear, immediate confirmation from multiple trusted outlets, updates can appear in under 30 minutes. For complex or disputed events, it can take hours or even days. The goal isn’t speed-it’s accuracy. If sources are conflicting or unclear, Wikipedia waits.