Wikipedia doesn’t run ads. It doesn’t have a PR team pushing updates to mainstream news outlets. Yet every year, major changes-new policies, platform upgrades, or even high-profile disputes-spread across the internet faster than most corporate press releases. How? The answer isn’t in traditional media. It’s in the noise of social platforms.

Wikipedia’s announcements aren’t made for the headlines

When Wikipedia makes an official announcement-like the rollout of a new mobile interface, a ban on a major editor, or the discovery of systemic bias in article coverage-it’s posted on Meta-Wiki, the Wikimedia Foundation’s internal wiki. These aren’t press releases. They’re technical notes, community votes, or policy drafts written in plain, dry language. No emojis. No calls to action. No sensationalism.

But here’s what happens next: someone reads it. Maybe a longtime editor. Maybe a journalist who follows digital culture. Maybe a student researching misinformation. They see something important, share it on Twitter, Reddit, or Mastodon, and suddenly it’s trending.

That’s how the 2023 decision to restrict editing from Russian state IP addresses went viral. The official notice was buried in a 12-page policy update. But a single Reddit thread in r/Wikipedia, shared by a user who’d been editing for 15 years, got 47,000 upvotes. Within 12 hours, it was picked up by The Guardian, BBC, and Wired-not because they were sent a press kit, but because thousands of users were already talking about it.

Why social platforms work better than press releases

Traditional media doesn’t cover Wikipedia unless there’s scandal, controversy, or a celebrity involved. But social platforms don’t need a hook. They reward relevance, not prestige.

Twitter (now X) became the default hub for Wikipedia community updates because it’s where editors, researchers, and fact-checkers live. When the Wikimedia Foundation announced in late 2024 that it would begin flagging AI-generated content in articles, the first wave of discussion didn’t happen in a press room. It happened in a thread by user @WikiWatcher, who broke down the technical implications in plain terms. That thread was retweeted over 22,000 times. Journalists didn’t call the Foundation-they called the user.

Reddit’s r/Wikipedia and r/technology subreddits act as early-warning systems. A post about a new anti-vandalism tool in 2025 got 18,000 comments. Many were skeptical. Some were angry. But the discussion forced the Foundation to clarify its approach publicly-something it wouldn’t have done without the pressure.

Unlike traditional media, social platforms don’t need to be convinced. They don’t need to be pitched. They just need to be triggered. And Wikipedia’s community is full of people who know exactly what triggers attention: transparency, conflict, and the sense that something important is being hidden.

The amplification chain: from wiki to world

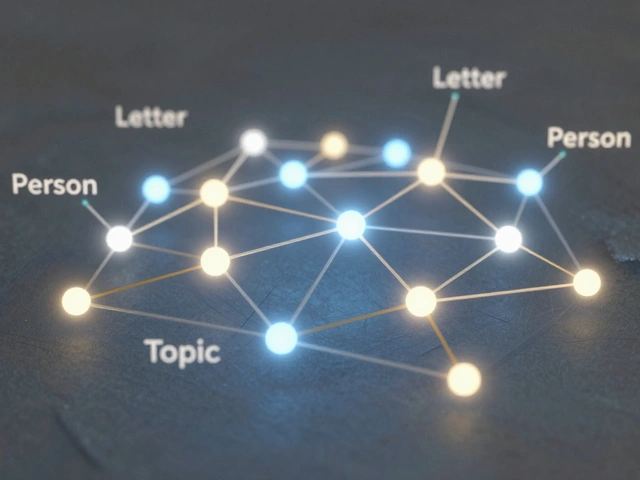

There’s a clear pattern in how Wikipedia announcements go viral:

- Internal posting - Announcement appears on Meta-Wiki or a mailing list.

- Community discovery - Editors, researchers, or curious users spot it and share it on social media.

- Amplification by influencers - Accounts with large followings in tech, education, or media retweet or re-post it.

- Media pickup - Outlets like The New York Times, The Verge, or Axios pick up the story, often citing social media as their source.

- Global spread - Non-English communities translate and repurpose the content, leading to coverage in outlets like Le Monde, Der Spiegel, or El País.

This isn’t accidental. It’s the result of Wikipedia’s open structure. Anyone can read the raw data. Anyone can comment on it. Anyone can share it. And because the platform has no paywall, no login, and no algorithmic gatekeeping, the signal stays pure.

In 2024, when Wikipedia added a new tool to detect plagiarism in citations, the announcement was posted in a small newsletter. A university librarian in Canada shared it on Mastodon with a breakdown of how it could help students. Within a week, the tool was being taught in 17 universities across Europe and North America. No email campaign. No webinar. Just one post and the right audience.

What gets amplified-and what doesn’t

Not every announcement spreads. Some fade into the background. The ones that stick have three things in common:

- They affect real people - Changes to editing rules, content moderation, or access restrictions trigger strong reactions.

- They challenge power - Announcements about censorship, government interference, or corporate influence get attention because they feel like resistance.

- They’re counterintuitive - When Wikipedia does something unexpected-like blocking edits from a country, or allowing AI-generated content under strict conditions-it creates cognitive friction, which drives sharing.

Conversely, updates about backend improvements, UI tweaks, or internal staffing changes rarely break out unless they’re tied to controversy. The 2025 upgrade to the VisualEditor interface? Barely mentioned. But when the Foundation admitted it had accidentally deleted 300,000 citations during the rollout? That trended for three days.

Why traditional press misses the story

Newsrooms still think of Wikipedia as a static reference. They expect press releases, press conferences, or spokesperson quotes. But Wikipedia’s leadership doesn’t operate that way. There’s no spokesperson. No media relations team. No embargoed briefings.

Journalists who rely on official channels often miss the real story. They wait for an email. Meanwhile, the real conversation is happening in Discord servers, Twitter threads, and Reddit comment sections. The most accurate reporting on Wikipedia’s recent policy shifts came from independent journalists who followed the chatter-not the emails.

In 2023, a major investigation into gender bias in biographies was published after a researcher shared a dataset on Mastodon. The Wikimedia Foundation hadn’t issued a statement. But the data was public. The conversation was public. The truth was already out there-just not where traditional media was looking.

The hidden cost of viral attention

Amplification isn’t all good. When an announcement goes viral, it often gets distorted. A nuanced policy change about citation standards becomes “Wikipedia bans all academic sources.” A minor tweak to edit filters turns into “Wikipedia is censoring conservatives.”

Wikipedia’s community tries to correct misinformation, but they’re outnumbered. In 2025, after the Foundation announced it would begin testing AI-assisted editing tools, over 60% of social media posts misrepresented the scope of the test. Some claimed it would replace human editors entirely. Others said it would delete all conservative viewpoints. Neither was true.

The Foundation responded by publishing a simple FAQ on its website. But no one read it. The viral falsehoods stuck. That’s the paradox: the same platforms that amplify Wikipedia’s voice also amplify its misrepresentations.

What’s next for Wikipedia’s outreach

Wikipedia isn’t trying to become a news organization. It doesn’t want to compete with The Washington Post. But it can’t ignore the fact that its most important updates now travel through social media, not press releases.

Some within the Foundation are pushing for better social media coordination. A small team now monitors trending topics related to Wikipedia and responds with clear, short posts. They don’t try to control the narrative. They just try to correct the worst lies.

Others argue that Wikipedia should stay quiet. That its power comes from being unpolished, unmanaged, and unfiltered. That trying to “manage” its announcements would ruin its authenticity.

The truth is probably somewhere in between. The platform thrives because it’s open. But if its announcements keep getting twisted, the public’s trust will erode. The challenge isn’t to control the message-it’s to make sure the original message is easy to find, simple to understand, and hard to ignore.

For now, the best strategy is simple: keep posting openly. Let the community share. And trust that when something matters, people will find it-and spread it.

Do Wikipedia’s official announcements ever go viral on their own?

No. Wikipedia’s official announcements are written for internal use and rarely attract attention without being shared by users first. Viral spread happens because editors, researchers, or journalists find the announcement and repost it on social media-not because Wikipedia promotes it.

Which social platforms are most important for spreading Wikipedia news?

Twitter (X) and Reddit are the most influential. Twitter is where journalists and editors react in real time. Reddit, especially r/Wikipedia and r/technology, hosts deep discussions that often lead to media coverage. Mastodon and Bluesky are growing in influence among academic and technical users, but they’re still niche compared to the bigger platforms.

Why don’t journalists contact Wikipedia directly for stories?

Wikipedia doesn’t have a traditional press office. There’s no spokesperson, no media relations team, and no official channel for interviews. Journalists who wait for an official response often miss the story entirely. The real sources are the editors and users who post on social media-so smart reporters follow the chatter, not the email list.

Can Wikipedia control how its announcements are shared?

No. Wikipedia’s open model means anyone can share, quote, or reinterpret its content. The Foundation can’t control the narrative. Instead, it tries to respond quickly to major misconceptions with clear, plain-language posts. But it doesn’t try to censor or suppress-because that would go against its core principles.

What kind of Wikipedia announcements tend to go viral?

Announcements that involve censorship, bias, access restrictions, or AI are most likely to spread. Things that feel like power struggles-like banning edits from a country, removing a popular editor, or letting AI help write articles-trigger strong reactions. Technical updates, like interface changes, rarely go viral unless something goes wrong.