Wikipedia doesn’t have editors in suits sitting in offices approving every change. It’s run by millions of volunteers, and that means things get messy. Someone adds a fake birthdate to a celebrity’s page. Another user reverts it. Then a third person brings back the fake date with a sarcastic comment. Suddenly, the article is locked. The edit war has begun.

What Counts as Vandalism?

Vandalism on Wikipedia isn’t just graffiti on a wall. It’s any edit meant to disrupt, not improve. That includes adding nonsense like "The moon is made of cheese," deleting entire sections of well-sourced content, inserting offensive language, or spamming links to unrelated websites. It’s not always obvious. Sometimes it’s subtle-changing a politician’s party affiliation without evidence, or tweaking a statistic to make a company look better.

Wikipedia’s community identifies vandalism fast. Bots scan every edit in real time. One bot, ClueBot NG, has blocked over 10 million edits since 2007. It catches the obvious stuff: random strings of letters, repeated insults, or links to known spam domains. But bots aren’t perfect. They miss nuanced edits, like rewriting a paragraph to sound more favorable to a corporation while keeping it technically true. That’s where humans come in.

How Edit Wars Start

Edit wars happen when two or more editors keep reverting each other’s changes, often over controversial topics. Think politics, religion, history, or celebrity scandals. It’s not always malice. Sometimes it’s sincere disagreement. One editor believes a source is reliable; another thinks it’s biased. Both revert. Back and forth. The article becomes a battleground.

Wikipedia’s rules say: don’t revert more than once in 24 hours unless it’s vandalism. But that rule gets ignored. In 2023, over 12,000 edit wars were flagged on English Wikipedia alone. Most lasted less than a day. A few dragged on for weeks. The article on the Israel-Palestine conflict had over 2,000 edits in a single month during a major escalation. Each edit was reverted. Each reversion was debated.

The Role of Administrators

When edit wars escalate, administrators step in. These aren’t paid staff-they’re trusted volunteers who’ve earned the tools to lock pages, block users, and mediate disputes. To become one, you need to demonstrate consistency, calmness, and knowledge of Wikipedia’s policies. Less than 1% of active editors ever become admins.

When an article is locked, it’s not permanent. It’s usually semi-protected: only registered users with at least four days of account age and 10 edits can edit. That stops anonymous vandals and new accounts from jumping in. Full protection-only admins can edit-is rare. It’s reserved for high-profile pages under constant attack, like those of sitting heads of state or major disasters.

Administrators don’t decide what’s true. They enforce process. If two editors can’t agree, they’re directed to the article’s talk page. There, they must cite reliable sources. No opinions. No "I think." Only verifiable references. If one side can’t back up their claim with a published book, peer-reviewed journal, or major news outlet, their edit gets removed-not because it’s unpopular, but because it doesn’t meet Wikipedia’s standards.

Conflict Resolution Beyond Locks

Locking a page is a bandage, not a cure. Wikipedia has deeper tools for long-term conflict resolution.

- Dispute resolution noticeboards: Editors can post a link to a stalled article on the Administrators’ Noticeboard or the Third Opinion board. Neutral editors review the edits and suggest compromises.

- Mediation: Trained mediators from the community facilitate private discussions between warring editors. They don’t take sides. They help find common ground.

- Arbitration Committee: For the worst cases-repeat offenders, harassment, coordinated sockpuppet campaigns-the Arbitration Committee steps in. It’s like a court. Edits are reviewed. Evidence is presented. Users can be banned for months or permanently.

In 2024, the Arbitration Committee handled 89 cases. Half involved persistent edit warring. Four resulted in lifetime bans. The rest led to warnings, probation, or temporary blocks.

Why It Works

Wikipedia’s system isn’t perfect. It’s slow. It’s messy. But it’s resilient. Why? Because it’s built on trust, not control.

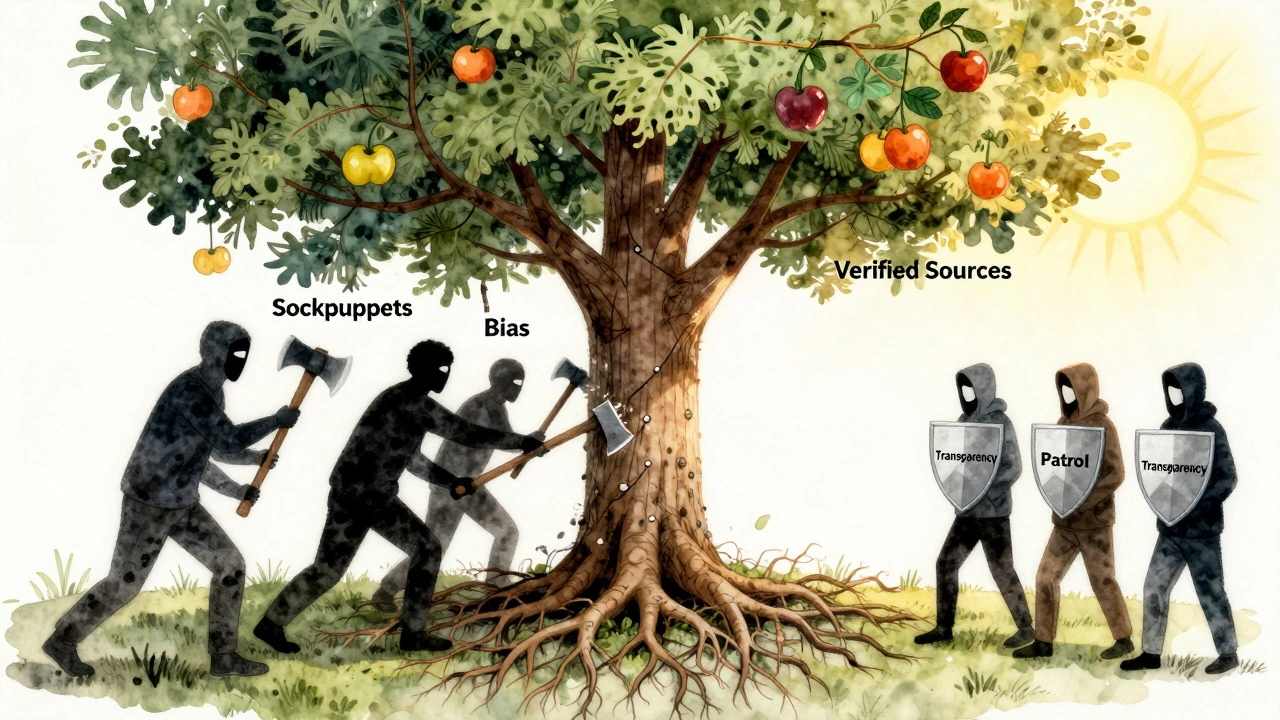

Every edit is public. Every user’s history is visible. If someone keeps adding false claims, others see the pattern. The community remembers. A user who edits 20 times a day to push a political agenda will eventually be flagged-not by a bot, but by a dozen other volunteers who’ve noticed the same behavior.

Wikipedia doesn’t rely on authority. It relies on transparency. You can’t hide behind anonymity for long. Even if you create a new account after being blocked, your editing style, timing, and choice of targets often give you away. Experienced editors learn to recognize patterns. It’s like a fingerprint.

And then there’s the culture. Most editors don’t want to fight. They want to build something lasting. A well-written, accurate article is a point of pride. When a dispute arises, many will step back and say, "Let’s get this right," not "Let’s win."

The Human Element

Behind every edit war is a person. Sometimes they’re a student who just learned a new fact and thinks it’s important. Sometimes they’re a lobbyist trying to shape public perception. Sometimes they’re just bored and trolling.

Wikipedia’s system gives everyone a chance to be heard-but only if they follow the rules. You can argue. You can challenge. You can even be wrong. But you can’t lie, you can’t harass, and you can’t keep reverting without justification.

One editor, who goes by "User:JennyFromTheBlock," spent six months working on the article about a local historical figure. She found obscure newspaper archives, interviewed descendants, and cited primary sources. Then someone from another country, with no connection to the topic, started deleting her citations and replacing them with vague blog posts. She didn’t retaliate. She posted her evidence on the talk page. Three other editors reviewed it. The blog posts were removed. Her version stayed.

That’s the quiet success of Wikipedia. It’s not about who’s loudest. It’s about who’s most thorough.

What Doesn’t Work

Wikipedia’s system breaks down when outsiders try to game it. Corporate PR teams, political groups, and activists sometimes coordinate edits to push narratives. They use multiple accounts. They cite unreliable sources. They pressure editors through social media.

In 2022, a major pharmaceutical company was caught using a network of 17 sockpuppet accounts to rewrite drug safety pages. The edits were subtle-changing "may cause nausea" to "rarely causes nausea." They didn’t add fake data. They just softened language. It took three months for volunteers to trace the pattern. The company was publicly named. The accounts were banned. The article was locked for six months.

Wikipedia doesn’t have legal power. But its transparency gives it moral authority. When the public sees how a company tried to manipulate an encyclopedia, the backlash is real. That’s a deterrent.

What’s Changing Now

In 2025, Wikipedia is testing new tools. One is AI-assisted conflict detection. It flags articles where edit frequency spikes and edit summaries become hostile. Another is a "revert ratio" metric that shows how often an editor’s changes are undone. If someone’s edits are reverted over 70% of the time, they get a gentle warning: "Your edits are being reverted frequently. Consider discussing on the talk page."

These tools don’t make decisions. They just surface patterns. The human judgment still matters most.

Wikipedia’s biggest threat isn’t vandalism. It’s apathy. If too many experienced editors leave, the system weakens. That’s why the community focuses on mentoring new users. A 17-year-old who makes one good edit gets a message: "Thanks. Want to learn how to cite sources?" That’s how Wikipedia stays alive.

How does Wikipedia know if an edit is vandalism?

Wikipedia uses bots to catch obvious vandalism-nonsense text, spam links, or repeated insults. But humans are still the main line of defense. Volunteers monitor recent changes, and experienced editors recognize patterns. If an edit lacks sources, contradicts reliable information, or is clearly meant to disrupt, it’s flagged and reverted.

Can anyone lock a Wikipedia page?

No. Only administrators-volunteers who’ve earned trust through consistent, fair contributions-can lock pages. Locks are temporary and usually applied only when edit wars or vandalism become persistent. Semi-protection stops anonymous and new users from editing; full protection is rare and reserved for extreme cases.

Why don’t Wikipedia editors just vote on what’s true?

Wikipedia doesn’t allow voting because truth isn’t decided by majority opinion. A popular belief can still be false. Instead, editors must cite reliable, published sources. If a claim is backed by a peer-reviewed study or a major news outlet, it stays. If it’s just someone’s opinion-even if many people agree-it gets removed. The goal is accuracy, not popularity.

What happens to users who keep starting edit wars?

Repeated offenders get warnings, then temporary blocks. If they continue, they may be referred to the Arbitration Committee, which can impose longer bans or even permanent blocks. The system focuses on behavior, not intent. Even if someone believes they’re right, repeatedly reverting others without following policy will lead to consequences.

Is Wikipedia’s system still effective today?

Yes, but it’s under pressure. The number of active editors has declined since its peak in 2007, and coordinated manipulation attempts have increased. However, the core system-transparency, sourcing, and community moderation-still works. Most vandalism is caught within minutes. Most disputes are resolved without formal intervention. The system survives because volunteers still care enough to show up and fix it.

What You Can Do

If you use Wikipedia, you’re part of the system. You don’t need to become an editor to help. If you see vandalism, click "undo." If you see a disputed edit, check the talk page. If you’re curious about how something was decided, read the edit history. Wikipedia’s strength isn’t in its technology. It’s in its people.