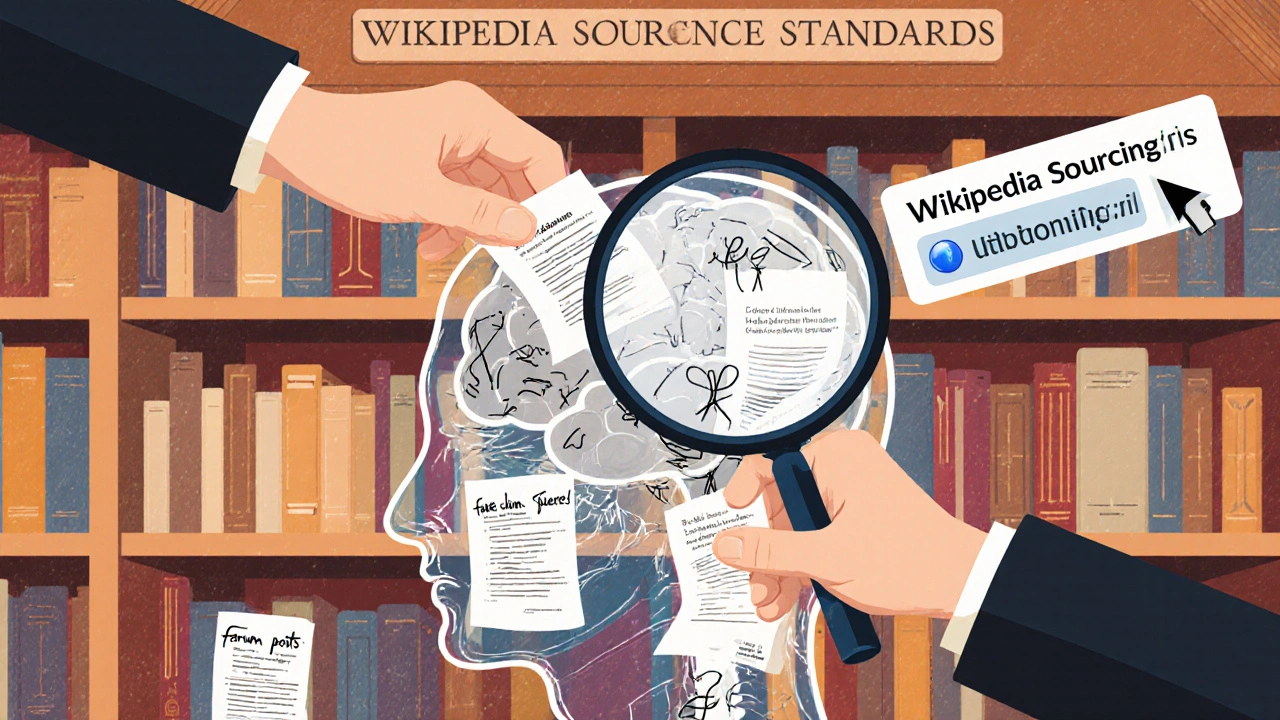

Every day, AI tools spit out answers that sound smart but are completely wrong. You ask about the history of the Berlin Wall, and it cites a fictional study from a non-existent university. You ask who won the 2020 U.S. presidential election, and it lists three candidates with made-up vote counts. These aren’t glitches-they’re systemic failures. AI doesn’t know what’s true unless it’s trained on sources that actually verify truth. That’s where Wikipedia’s sourcing standards come in-not as a replacement for AI, but as the missing rulebook it never had.

Why AI Gets Things So Wrong

Large language models don’t think. They predict. They scan billions of sentences and guess the most likely next word. If a fake quote appears 12,000 times on blogs, Reddit, and YouTube comments, the AI will treat it like fact. A 2024 Stanford study found that 68% of AI-generated responses about recent historical events contained at least one verifiable falsehood. And the worst part? Most users don’t know how to check.

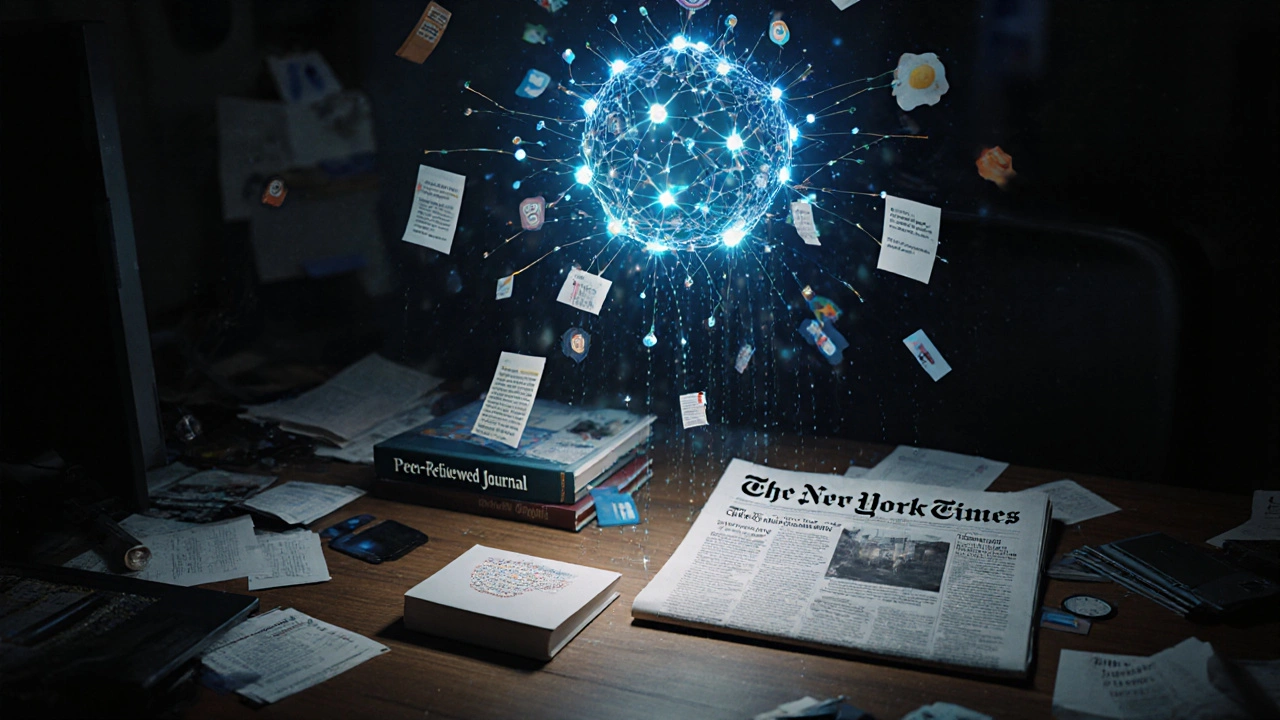

Unlike humans, AI doesn’t ask: "Where did this come from?" It doesn’t care if the source is a peer-reviewed journal, a biased blog, or a tweet from someone who’s never held a history book. It only cares about patterns. That’s why AI hallucinations are so common in areas like science, law, and politics-places where accuracy matters and misinformation spreads fast.

What Wikipedia Gets Right That AI Doesn’t

Wikipedia doesn’t claim to be the source of truth. It claims to be the source of verifiable truth. Every claim on Wikipedia must be backed by a reliable, published source. That means books from academic presses, peer-reviewed journals, major newspapers, government reports-anything that has editorial oversight. Personal blogs, forums, and social media posts? Not allowed.

Here’s how it works in practice. If someone edits a Wikipedia page to say "The moon landing was faked," that edit gets reverted within minutes. Why? Because there’s no credible source to back it up. Not because Wikipedia is biased, but because it has a rule: no original research. Everything must be cited. And those citations are public. Anyone can click them and see the original material.

AI has no equivalent. It doesn’t show you where its answer came from. It doesn’t let you trace the claim back to its origin. It just gives you a smooth, confident-sounding paragraph-and you’re left wondering if it’s real.

How Wikipedia’s Rules Can Be Applied to AI Training

Fixing AI misinformation isn’t about making AI smarter. It’s about making it more disciplined. The solution isn’t a new algorithm-it’s a new standard. Here’s how you’d build it:

- Only train on sources that meet Wikipedia’s reliability criteria-no unmoderated forums, no self-published eBooks, no Reddit threads. Stick to peer-reviewed journals, established news outlets, and official publications.

- Require source attribution-every AI response should include a link or citation to the source material, just like Wikipedia does. If the AI can’t cite it, it shouldn’t say it.

- Build a "trust score" for sources-assign weights based on editorial standards. A peer-reviewed paper from The Lancet gets a higher score than a blog post from Medium. AI should prioritize high-trust sources.

- Flag unsupported claims-if an AI can’t find a reliable source for a statement, it should say: "I can’t verify this." Not guess. Not invent. Not bluff.

Some companies are already trying this. Google’s Gemini now includes citations for many responses. Anthropic’s Claude has a "source mode" that shows references. But these are still optional features, not core rules. They’re like putting seatbelts in a race car-useful, but not mandatory.

Real-World Example: Correcting a Common AI Lie

In early 2025, multiple AI models claimed that the U.S. Food and Drug Administration (FDA) had approved a new weight-loss drug called "VitaSlim" with 92% effectiveness. The drug didn’t exist. No clinical trials were registered. No FDA announcement was made.

But the lie kept spreading. Why? Because AI had been trained on scraped forum posts, product ads, and fake news sites that repeated the same false claim. When users searched for "VitaSlim FDA approval," AI confidently answered with details that never happened.

Wikipedia’s approach would have stopped this. If someone tried to add "VitaSlim" to the FDA-approved drugs page, the edit would be rejected instantly. No reliable source. No entry. No room for error.

Imagine if AI systems were trained the same way. They’d only "know" about drugs that appear in FDA databases, peer-reviewed journals, or official press releases. The lie would never have been trained in the first place.

Why This Isn’t Just About Accuracy-It’s About Trust

People don’t just want correct answers. They want to know they can rely on them. When AI gives you a wrong answer about your health, your finances, or your rights, the damage isn’t just factual-it’s emotional. You start doubting everything.

Wikipedia has built trust over 20 years by being transparent. You see the sources. You see the edits. You see the discussion. You can even help fix mistakes. That’s why, despite its flaws, 90% of students and researchers still use it as a starting point for research.

AI has none of that. It’s a black box. You ask a question. It answers. You have no way to verify, challenge, or improve it. That’s not helpful. It’s dangerous.

What’s Holding Back AI from Adopting These Standards?

There are three big reasons:

- Cost-curating high-quality, licensed sources is expensive. Most AI companies scrape the open web because it’s free.

- Speed-training on messy, unfiltered data is faster. Clean data takes time to sort and verify.

- Profit-if AI starts saying "I don’t know" more often, users might switch to competitors who still answer confidently-even if they’re wrong.

But here’s the catch: the cost of getting it wrong is higher. A single AI-generated medical error can cost lives. A false legal claim can ruin someone’s career. A fabricated historical fact can fuel real-world violence.

Wikipedia didn’t become the most trusted reference site by being the fastest or cheapest. It became trusted by being the most careful.

The Path Forward: A New Standard for AI

It’s time to treat AI like a library, not a fortune-teller. Libraries don’t guess. They reference. They cite. They verify.

Here’s what needs to happen:

- AI developers should adopt Wikipedia’s verifiability and no original research policies as baseline standards.

- Regulators should require source citations in AI responses for health, legal, and educational use cases.

- Users should demand transparency. If an AI can’t show you where it got its answer, don’t trust it.

This isn’t about replacing AI. It’s about making it responsible. AI can be incredibly useful-if it learns to respect truth instead of just mimicking it.

Wikipedia didn’t fix misinformation by banning lies. It fixed it by building a system that makes lies impossible to sustain. AI can do the same. It just needs to start citing its sources.

Why can’t AI just learn from Wikipedia directly?

AI can’t just copy Wikipedia because Wikipedia is a summary, not a source. The real sources are the citations at the bottom of each article-journals, books, news reports. AI needs to train on those original sources, not Wikipedia’s rewritten versions. Otherwise, it’s learning from a secondhand account, which defeats the purpose.

Do any AI tools already use Wikipedia-style sourcing?

Some, like Google’s Gemini and Anthropic’s Claude, now offer optional citations in certain modes. But these are not enforced by default. Most consumer-facing AI tools still give answers without showing sources. Until citing reliable sources becomes a requirement-not a bonus-AI will keep making the same mistakes.

Can Wikipedia’s rules prevent all AI hallucinations?

No system can prevent every error. But Wikipedia’s model drastically reduces them. By requiring reliable, published sources and rejecting unsupported claims, it cuts off the most common sources of AI hallucinations-blogs, forums, and fake news. It doesn’t eliminate mistakes, but it makes them rare and easy to catch.

What if a reliable source is wrong?

That’s why Wikipedia uses multiple sources. If one journal makes a claim, but three others contradict it, Wikipedia notes the disagreement. AI should do the same. If sources conflict, the AI should say so. It shouldn’t pick one and pretend it’s the truth. Truth isn’t always a single answer-it’s often a range of evidence.

How can regular users push for better AI sourcing?

Ask for sources. Report answers without citations. Choose tools that show their references. Support companies that prioritize accuracy over speed. If enough users demand transparency, companies will have to respond. Right now, most people don’t know to ask. Once they do, the pressure will change the industry.