Wikipedia has over 60 million articles in more than 300 languages. Every day, thousands of edits happen-some made by bots, many by volunteers, and now, an increasing number are suggested by AI. But here’s the catch: no AI system, no matter how advanced, can replace the collective judgment of human editors who’ve spent years learning what counts as reliable, neutral, and verifiable. That’s where human-in-the-loop workflows come in. They don’t replace humans with machines. They make machines work for humans.

How AI Suggests Edits on Wikipedia

Tools like WikiGrok and EditBot now scan Wikipedia articles and propose changes based on patterns learned from millions of past edits. These systems can fix typos, update dates, add citations from recent studies, or flag statements that lack sources. In 2024, AI-generated suggestions accounted for nearly 12% of all proposed edits on the English Wikipedia-up from just 3% in 2021.

But here’s what most people don’t realize: almost none of these suggestions go live automatically. Instead, they land in a queue. A human editor-often a volunteer with a background in history, science, or journalism-reviews them. They check if the edit matches Wikipedia’s five pillars: neutrality, verifiability, no original research, civility, and consensus.

For example, an AI might suggest adding a claim like “Climate change is a hoax” because it found a blog post citing a discredited paper. A human editor sees that, checks the source, and rejects it. Another time, AI might recommend updating a biography with a new award won last week. The human editor confirms it with a reputable news outlet before approving.

Why Consensus Matters More Than Algorithms

Wikipedia doesn’t run on majority vote. It runs on consensus. That means if 10 editors agree a fact should stay because it’s well-sourced, and one editor argues to remove it because they don’t like the source, the fact stays. AI doesn’t understand nuance like that. It sees numbers, not context.

Take the case of the article on the 2023 Turkey-Syria earthquake. An AI bot noticed that a few news outlets had updated the death toll to 55,000 and suggested changing the number on Wikipedia. But other outlets still reported 52,000. The Wikipedia community didn’t just pick the highest number. They waited. They checked official government releases. They reviewed how each source collected its data. Only after three weeks, when multiple authoritative bodies confirmed 55,000, did the number change.

AI can’t do that. It doesn’t know when to wait. It doesn’t understand that in sensitive topics-politics, health, history-speed can hurt accuracy. Humans do. That’s why human-in-the-loop systems are not optional. They’re essential.

The Real Role of Humans in the Loop

Human editors aren’t just gatekeepers. They’re trainers. Every time a human accepts or rejects an AI suggestion, they’re teaching the system. This feedback loop is how AI gets better-not by reading more data, but by seeing what real editors value.

At the Wikimedia Foundation, teams track which AI suggestions are accepted or rejected. They found that suggestions involving medical claims were rejected 78% of the time if they came from non-peer-reviewed sources. So now, the AI is trained to flag those automatically and add a warning: “This claim needs a peer-reviewed source.”

Another insight: AI is great at updating dates and names. But it fails at detecting tone. It might rewrite a dry paragraph to sound “more engaging,” but accidentally make it sound biased. Humans catch that. One editor told me they once rejected 42 AI edits in a row because the system kept replacing “alleged” with “reported”-a tiny word change that removed critical nuance about unproven claims.

How Editors Use AI Tools in Practice

Most active Wikipedia editors don’t wait for AI to suggest edits. They use tools to speed up their own work. For example:

- RefCheck highlights references that are broken or missing. AI scans the text and flags citations that link to 404 pages.

- WikiProof suggests better phrasing for sentences that sound like opinion, not fact.

- ConflictDetector warns editors when they’re about to edit an article that’s under dispute or has pending mediation.

These tools don’t make decisions. They just give editors more information. One editor in Berlin told me she uses RefCheck every morning before she starts editing. It cuts her fact-checking time by 40%. But she still reads every source herself. “The AI finds the broken link,” she said. “I decide if the source was worth linking to in the first place.”

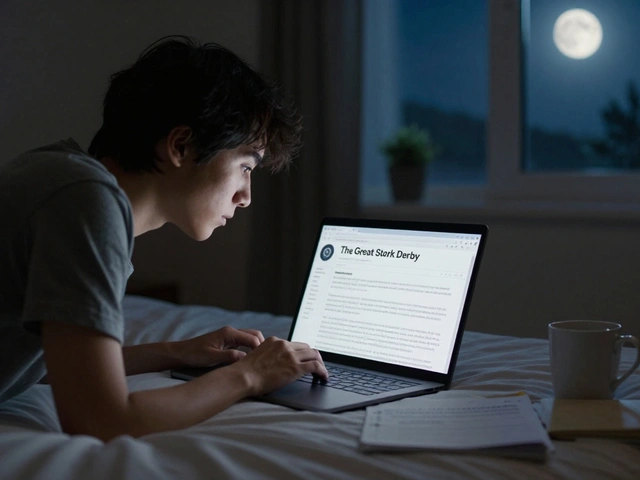

What Happens When Humans Are Left Out

There are cautionary tales. In 2023, a startup launched an AI-powered encyclopedia that auto-published edits based on AI confidence scores. Within months, it was full of inaccuracies: it listed Napoleon as born in 1771 (he was born in 1769), claimed the Eiffel Tower was built in 1920, and inserted fake quotes from Einstein.

Why? Because the system trusted sources that sounded authoritative but weren’t. It didn’t know the difference between a university press book and a self-published blog. It didn’t care about consensus. It only cared about confidence percentages.

Wikipedia avoided this by keeping humans in the loop. Every edit, even the smallest one, passes through someone who cares about accuracy-not just speed.

Why This Model Works for the Long Term

AI will keep getting smarter. But Wikipedia’s strength isn’t in its technology. It’s in its community. And that community has built a system that doesn’t fear AI-it absorbs it.

Human-in-the-loop workflows are scalable. As more people join Wikipedia, the system gets better. More editors mean more feedback for AI. More feedback means smarter suggestions. And smarter suggestions mean less work for editors. It’s a virtuous cycle.

Compare that to closed systems like corporate knowledge bases or AI chatbots trained on Wikipedia data without human oversight. They get stale. They drift. They hallucinate. Wikipedia doesn’t. Because every claim, every date, every word is checked by someone who has skin in the game.

There’s no magic formula. No algorithm that can replace a human who’s read 20 sources on a topic and still isn’t sure. But there is a powerful hybrid: AI that finds the needle, and humans who know which haystacks are worth searching.

How You Can Help

You don’t need to be a scholar to contribute. You just need to care about truth. If you’ve ever corrected a typo on a Wikipedia page, you’ve already taken part in this system. Here’s how to get involved:

- Go to wikipedia.org and click “Edit” on any article.

- Use the AI suggestion tool (it’s turned on by default for logged-in users).

- Review each suggestion. Ask: Is this sourced? Is it neutral? Does it add value?

- If you’re unsure, leave a note on the article’s talk page. Others will help.

- Over time, you’ll learn what works-and you’ll help train the AI too.

There are over 100,000 active editors on Wikipedia. Most of them aren’t professionals. They’re teachers, nurses, students, retirees. They’re people who believe information should be free-and accurate.

AI won’t replace them. It will help them do more. And that’s the future of knowledge.

Can AI edit Wikipedia without human approval?

No. Wikipedia does not allow fully automated edits to go live without human review. Even bots that fix typos or add dates must be approved by the community and operate under strict rules. AI suggestions appear as proposals in the edit interface, but only humans can click “Publish.”

Are AI-generated edits more accurate than human edits?

Not consistently. AI excels at surface-level fixes-grammar, formatting, broken links. But humans are far better at judging context, bias, and reliability. Studies from the Wikimedia Foundation show that human-approved edits have a 92% accuracy rate over six months, while AI-only edits drop to 67% after three months due to undetected errors.

How does Wikipedia prevent AI from spreading misinformation?

Wikipedia uses multiple layers: AI suggestions are flagged if they come from low-reliability sources, editors are alerted when an edit contradicts recent consensus, and high-risk articles (like those on medicine or politics) are protected from automated edits. The community also manually reviews all AI-generated content in the “Recent Changes” feed.

Do I need to be a tech expert to use AI tools on Wikipedia?

No. The AI tools are built into the standard edit interface. You’ll see suggestions highlighted in light blue. You can accept, reject, or ignore them with one click. No coding or special skills are needed-just critical thinking.

What’s the difference between AI suggestions and bots on Wikipedia?

Bots are automated programs that make direct edits under strict rules-like fixing spacing or updating templates. AI suggestions are recommendations shown to humans for review. Bots act. AI suggests. Humans decide.