Wikipedia’s promise of neutrality isn’t just a guideline-it’s the foundation of its credibility. But when editors from different backgrounds, cultures, or ideologies clash over how to describe a sensitive topic, neutrality can feel impossible. In recent years, high-profile disputes over articles on climate change, political figures, and historical events have sparked heated debates across talk pages, arbitration committees, and even mainstream media. These aren’t just technical disagreements-they’re fights over truth, representation, and who gets to decide what counts as fact.

What NPOV Really Means on Wikipedia

NPOV stands for Neutral Point of View. It doesn’t mean avoiding opinion altogether. It means presenting all significant viewpoints fairly, without endorsing any. If a scientist says climate change is human-caused, and a minority group of researchers disagrees, Wikipedia must include both-but only if they’re backed by reliable sources and have enough support to be considered significant. The rule sounds simple. Applying it? Not so much.

Take the article on climate change. In 2024, a group of editors pushed to add language suggesting the scientific consensus was "uncertain," citing a single non-peer-reviewed paper. Opponents pointed to the Intergovernmental Panel on Climate Change’s 2023 report, which stated with over 99% confidence that human activity is the dominant cause. After weeks of edits, reversions, and mediation, the Arbitration Committee stepped in. They ruled: only consensus positions from major scientific bodies could be presented as facts. Minor dissenting views could be mentioned, but only with clear context about their lack of acceptance. The article was locked for a month while edits were reviewed. When it reopened, the language was clearer, better sourced, and firmly aligned with NPOV.

The Ukraine-Russia War Article: A Battle of Narratives

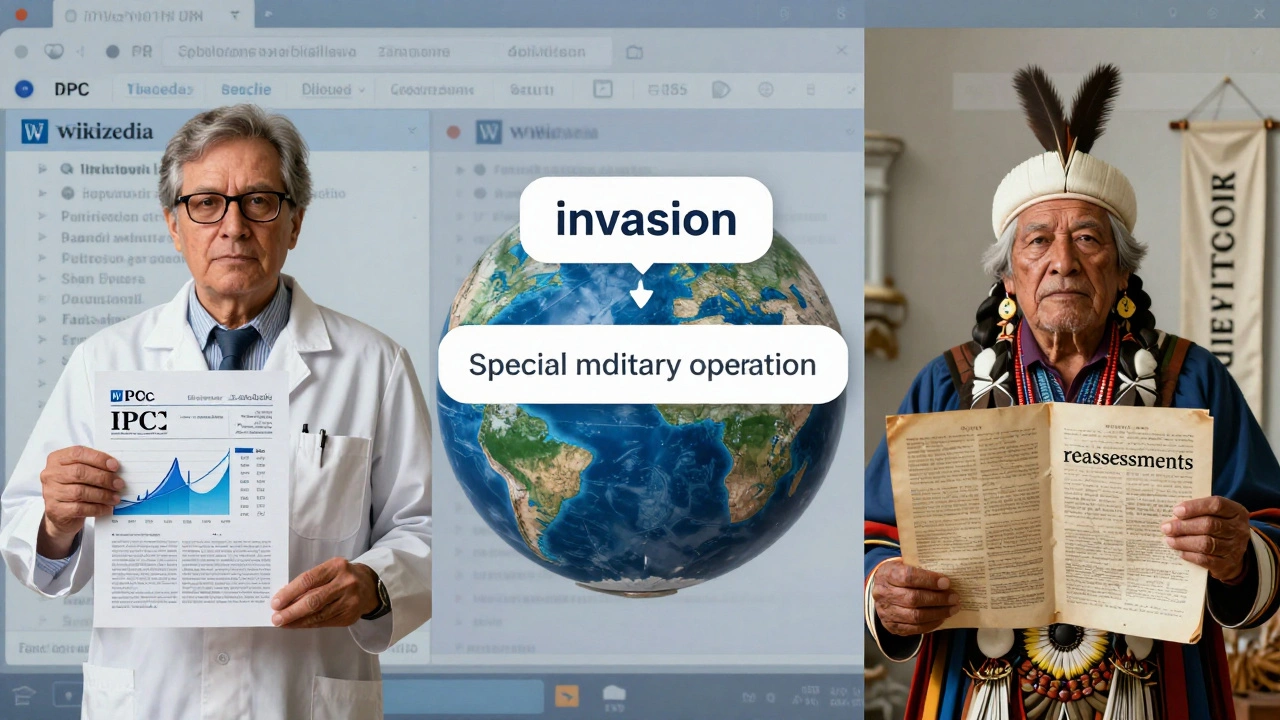

One of the most intense NPOV battles in 2025 centered on the Wikipedia article about the Ukraine-Russia war. Editors from Russian-speaking backgrounds, Western media-trained editors, and Ukrainian diaspora contributors each pushed different narratives. One side used terms like "special military operation," others called it an "invasion." Some added details about Ukrainian military actions that others saw as justifying aggression. The article became a battleground.

What saved it wasn’t a vote or a majority rule. It was a policy called "No Original Research" combined with "Reliable Sources." Administrators locked the article and asked editors to cite only major international outlets-BBC, Reuters, AP, Al Jazeera, and Ukrainian and Russian state media-but only when used to represent a viewpoint, not to state facts. For example, if the Kremlin claimed a city was "liberated," that claim could be noted-but only alongside reports from independent journalists on the ground showing civilian displacement. The solution? Transparency. Every contested claim was tagged with its source and context. The article now reads like a mosaic of verified statements, not a single story.

Historical Figures and Cultural Sensitivity

Another flashpoint emerged in early 2025 around the article on Christopher Columbus. Some editors wanted to remove all positive language, calling him a "colonizer" and "genocidal." Others argued the article should reflect his role in global exploration and the historical context of his time. The dispute escalated when editors from Indigenous communities in North and South America raised concerns about erasure and harm.

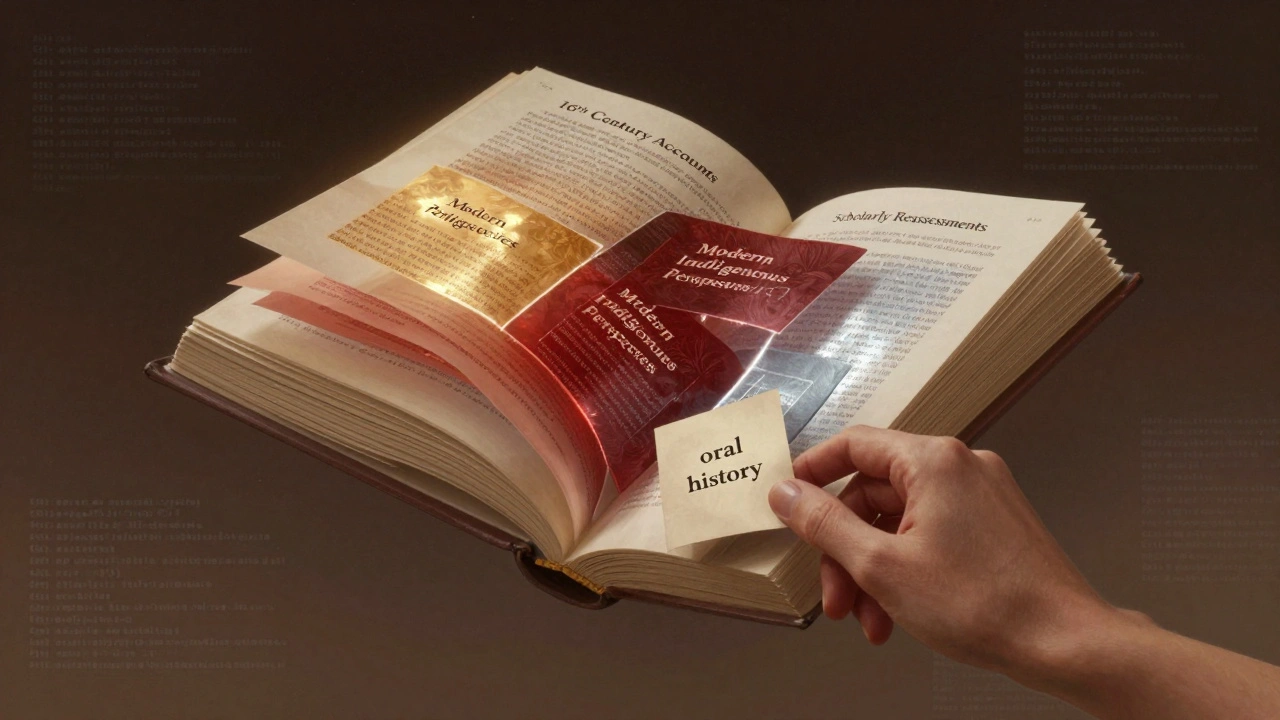

Wikipedia’s solution wasn’t to pick a side. It was to restructure the article entirely. The lead section now opens with a summary of how perceptions of Columbus have changed over time. Then it breaks into subsections: "Historical Accounts from the 16th Century," "Modern Indigenous Perspectives," "Scholarly Reassessments Since the 1990s," and "Public Commemorations." Each section cites primary sources, academic studies, and oral histories. The article doesn’t say Columbus was good or bad. It says: here’s how people have understood him, and here’s why those understandings changed. The result? A 3,000-word article that feels less like a biography and more like a documentary.

How Wikipedia Actually Resolves These Disputes

Most people think Wikipedia disputes are settled by votes. They’re not. The real tools are:

- Mediation - A neutral third-party editor helps conflicting sides find common ground. No one wins. Everyone listens.

- Arbitration - For serious, repeated conflicts, a panel of experienced editors reviews edit histories, sources, and tone. They can lock pages, ban users, or mandate restructuring.

- Source-based consensus - If two editors disagree, the deciding factor isn’t who’s louder. It’s who cites the most credible, widely accepted sources.

- Page protection - Temporary locks prevent edit wars. This isn’t censorship. It’s a cooling-off period.

There’s no perfect system. But the process works because it’s transparent. Every edit, every comment, every arbitration decision is public. You can read the full logs of how the Columbus article was changed, down to the minute. That openness is what makes Wikipedia different from every other encyclopedia.

Why This Matters Beyond Wikipedia

These disputes aren’t just about Wikipedia. They’re a mirror for how society deals with truth in the digital age. When a TikTok video or a YouTube video distorts history, people turn to Wikipedia to find the real story. If Wikipedia fails at neutrality, it loses its role as a public reference.

That’s why the recent resolutions matter. They didn’t silence voices. They didn’t favor one side. They forced everyone to ground their claims in evidence. They reminded editors that neutrality isn’t about being boring-it’s about being honest about what’s known, what’s disputed, and where the evidence lies.

What Happens When NPOV Breaks Down

Not all disputes end well. In 2024, an article on a controversial political figure in Brazil was repeatedly vandalized with false claims. Despite multiple protections, the article became unusable. The community voted to archive it temporarily and rebuild from scratch using only verified sources. It took three months. The result? A clean, sourced, neutral article that now serves as a model for similar topics.

That’s the hidden truth: Wikipedia’s strength isn’t in its editors. It’s in its process. Anyone can edit. But only evidence, not emotion, can win.

What happens if an editor keeps pushing a biased viewpoint on Wikipedia?

Repeatedly pushing a biased viewpoint, even with sources, can lead to warnings, temporary blocks, or even permanent bans. Wikipedia doesn’t ban opinions-it bans disruption. If an editor ignores mediation, refuses to cite reliable sources, or engages in edit wars, the community can restrict their editing rights. The goal isn’t to silence them, but to protect the integrity of the article.

Can a minority viewpoint be included in a Wikipedia article?

Yes-but only if it’s supported by reliable, published sources and represents a significant minority position. For example, a small group of scientists who reject climate change can be mentioned, but only if their views appear in peer-reviewed journals or major publications. You can’t include fringe theories just because someone believes them. The bar is high: significance, not popularity.

How does Wikipedia handle conflicting sources?

Wikipedia doesn’t choose between sources. It presents them. If one source says a law passed in 2020 and another says 2021, the article will note both claims and cite each source. Editors then work to find which source is more credible-usually by checking the original document, official records, or multiple independent outlets. The final version reflects the best available evidence, not guesswork.

Are Wikipedia editors paid to be neutral?

No. Wikipedia editors are volunteers. Their neutrality comes from policy, not payment. The site has strict rules against paid advocacy, and editors who are found to be editing for financial gain can be banned. Most contributors are passionate about accuracy, not ideology. That’s why the system works-it’s built on trust, not money.

Can I trust Wikipedia if it’s edited by anyone?

You can trust it more than you think. While anyone can edit, the most viewed articles are monitored by hundreds of experienced editors. Changes are flagged, reviewed, and often reverted within minutes. Studies show that Wikipedia’s accuracy on factual topics matches or exceeds traditional encyclopedias. The key is to check the references. If an article has solid citations from reputable sources, it’s trustworthy-even if a random user made the last edit.

What You Can Do If You Want to Help

If you’ve ever thought Wikipedia is biased, try this: edit with sources. Find an article you care about-climate, history, politics-and look at the talk page. See what’s disputed. Then go find reliable sources that back up your view. Don’t just change the text. Explain why on the talk page. Be patient. Listen. The system works when you engage with it, not against it.

Wikipedia’s neutrality isn’t perfect. But it’s the most transparent, evidence-based system we have. And right now, that’s worth protecting.