Wikipedia isn’t just a collection of articles-it’s a living system run by volunteers who spend hours checking facts, fixing errors, and shutting down bad actors. In the past year, administrators have taken stronger steps than ever to clean up the site. The goal isn’t to silence dissent. It’s to stop people who treat Wikipedia like a personal soapbox, a playground for harassment, or a tool for propaganda.

What counts as abusive behavior on Wikipedia?

Not every disagreement is abuse. But when someone repeatedly adds false information, deletes well-sourced content, or targets other editors with personal attacks, that’s when admins step in. Common patterns include:

- Inserting biased or misleading claims about living people, especially politicians or public figures

- Creating sockpuppet accounts to fake consensus or evade blocks

- Editing articles about sensitive topics (like wars, religions, or health) to push agendas

- Engaging in edit wars-repeatedly reverting others’ changes without discussion

- Using Wikipedia to harass individuals through article talk pages or user pages

These aren’t minor mistakes. They’re systemic attempts to manipulate a public resource. And they’re becoming more organized.

How admins are responding in 2025

This year, Wikipedia’s volunteer admins have moved from reactive fixes to proactive prevention. One major shift: automatic detection tools now flag suspicious edits before they go live. AI models trained on decades of vandalism patterns can now spot fake citations, sudden bursts of edits from new accounts, and coordinated campaigns targeting specific articles.

When these tools trigger, admins review the flagged edits within hours-not days. In some cases, edits are blocked before they even appear on the live site. For example, in March 2025, a coordinated group tried to rewrite the article on the 2024 U.S. presidential election results. The system flagged 87 edits from 12 different accounts within 48 hours. All were reverted, and the accounts were permanently blocked.

Another change: longer blocks. In the past, a typical ban lasted 30 days. Now, repeat offenders-especially those who return under new usernames-face indefinite blocks. In October 2025, an editor known for inserting conspiracy theories into medical articles was banned after 14 separate attempts to circumvent previous blocks. His last account was tied to a known troll network based in Eastern Europe.

Accountability through transparency

Wikipedia admins don’t act in secret. Every block, warning, or restriction is logged publicly on the Administrators’ Noticeboard. Anyone can see why someone was banned. There’s no mystery. If you’re blocked, you’ll find:

- The exact edits that triggered the action

- Links to Wikipedia policies that were violated

- Previous warnings you received

- Whether the block is temporary or permanent

This transparency isn’t just fair-it’s necessary. It lets the community hold admins accountable. If a block looks unjust, other editors can challenge it. In 2025, over 12% of proposed bans were overturned after community review.

Who’s being targeted?

It’s not just random trolls. The biggest targets are organized groups:

- Political operatives trying to shape narratives around elections

- Corporate PR teams altering product pages or executive biographies

- Extremist networks pushing hate speech under the guise of "historical revisionism"

- Spam farms inserting fake citations and links to low-quality websites

One case from November 2025 involved a network of 43 accounts linked to a single IP range in Southeast Asia. They were systematically editing articles on vaccines, climate change, and LGBTQ+ rights-all to insert misinformation. The admins traced the pattern through editing styles, timing, and language quirks. All accounts were blocked. The IP was added to a global block list.

Even high-profile users aren’t safe. In July 2025, a well-known academic with over 10,000 edits was permanently blocked after repeatedly inserting unverified claims into his own biography. He claimed he was "correcting errors," but his changes ignored multiple reliable sources. The community reviewed his edits. They agreed: this wasn’t editing. It was self-promotion disguised as scholarship.

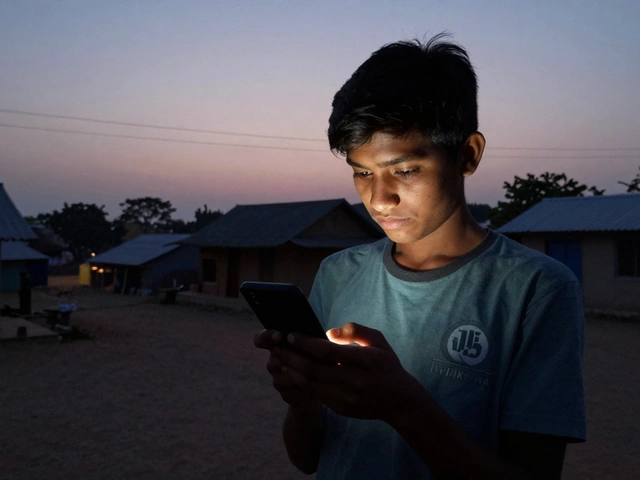

Why this matters beyond Wikipedia

Wikipedia is the most visited reference site in the world. Over 2 billion people visit it every month. When misinformation spreads there, it doesn’t stay there. It gets copied into school textbooks, news articles, and social media posts. That’s why admins treat vandalism like a public health crisis.

Studies from the University of Oxford and MIT show that Wikipedia edits influence real-world beliefs. A 2024 paper found that users who read a manipulated Wikipedia article on climate change were 37% more likely to reject scientific consensus-even after reading peer-reviewed sources elsewhere.

That’s why the recent crackdown isn’t just about rules. It’s about protecting the integrity of knowledge itself.

What happens if you’re wrongly blocked?

Wikipedia isn’t perfect. Mistakes happen. If you believe you were blocked unfairly, here’s what to do:

- Read the block notice carefully. It will tell you exactly what you did wrong.

- Go to the Appeals page. Don’t just complain-explain what you misunderstood.

- Don’t create a new account. That’s an automatic violation.

- Wait for a response. Appeals are reviewed by a different group of admins, not the ones who blocked you.

- If you’re still blocked after 30 days, you can request a formal review by the Arbitration Committee.

Most blocks are upheld. But some are lifted. In 2025, 19% of appeals resulted in partial or full reinstatement-usually when the editor showed genuine willingness to follow rules.

How regular users can help

You don’t need to be an admin to help. Here’s how anyone can contribute:

- Report suspicious edits using the "Report vandalism" button on any article

- Use the "Recent Changes" page to spot edits that look rushed or biased

- Leave polite, sourced comments on talk pages instead of reverting edits

- Don’t engage with trolls. Blocking them is the admin’s job, not yours

- Help new editors learn the rules. A friendly tip can stop a problem before it starts

Wikipedia survives because of its community. The admins are just the last line of defense. The real power lies in millions of quiet editors who check facts, cite sources, and fix typos every day.

What’s next for Wikipedia moderation?

The next big step? Machine learning will soon predict which users are likely to become abusive before they make their first bad edit. Early tests use factors like:

- Editing history from other platforms

- Language patterns linked to hate speech

- Geolocation data and connection patterns

It’s controversial. Some worry it’s too invasive. But admins argue: if we can stop abuse before it happens, we save hours of cleanup and protect the trust of readers.

For now, the message is clear: Wikipedia is not a free-for-all. It’s a shared space built on trust, evidence, and respect. And those who break those rules are being removed-faster, harder, and more permanently than ever before.

How do I know if a Wikipedia edit is vandalism?

Vandalism includes adding false information, deleting content without reason, inserting spam links, or making nonsensical changes. If an edit removes well-sourced facts, adds conspiracy theories, or looks like it was made in a hurry with no explanation, it’s likely vandalism. Check the edit history-repeated reversals or edits from new accounts are red flags.

Can I get banned for making a mistake?

No. Wikipedia encourages new editors. Mistakes are expected. Warnings are given first. You’ll only be blocked if you ignore multiple warnings, repeat the same violation, or intentionally break rules. First-time errors are usually corrected with a friendly note, not a ban.

Why do some users get banned for years while others get light warnings?

It depends on history and intent. Someone who accidentally adds a wrong date once gets a reminder. Someone who creates 50 fake accounts to push a political agenda gets a permanent ban. Admins look at patterns, not single actions. Repeat offenders, especially those who evade blocks, face much harsher consequences.

Are Wikipedia admins paid for this work?

No. Wikipedia admins are volunteers. They’re regular users who’ve earned trust through consistent, helpful editing. They don’t get paid, promoted, or given special privileges beyond the ability to block users and protect pages. Their power comes from community respect, not authority.

Can I appeal a block if I think it’s unfair?

Yes. Every block notice includes instructions to appeal. Go to the Wikipedia Appeals page and explain your side calmly and factually. Don’t argue. Don’t insult. Just state what happened and what you’ve learned. Many appeals are reviewed and overturned if the block was based on misunderstanding.

What happens to banned users’ edits?

All edits made by banned users are reviewed. Harmful or false edits are reverted. Neutral or helpful edits may be kept, even if they came from a banned user. Wikipedia values content over the person who created it. If a sentence is accurate and sourced, it stays-even if the editor is gone.

If you want to contribute to Wikipedia, start by reading the rules. Edit with care. Cite your sources. Be patient. The system works best when everyone plays fair.