Every year, thousands of academic papers cite Wikipedia as a source. But how many of them could actually be repeated by another researcher using the same steps? Most can’t. And that’s not because Wikipedia is unreliable-it’s because researchers aren’t tracking what they did.

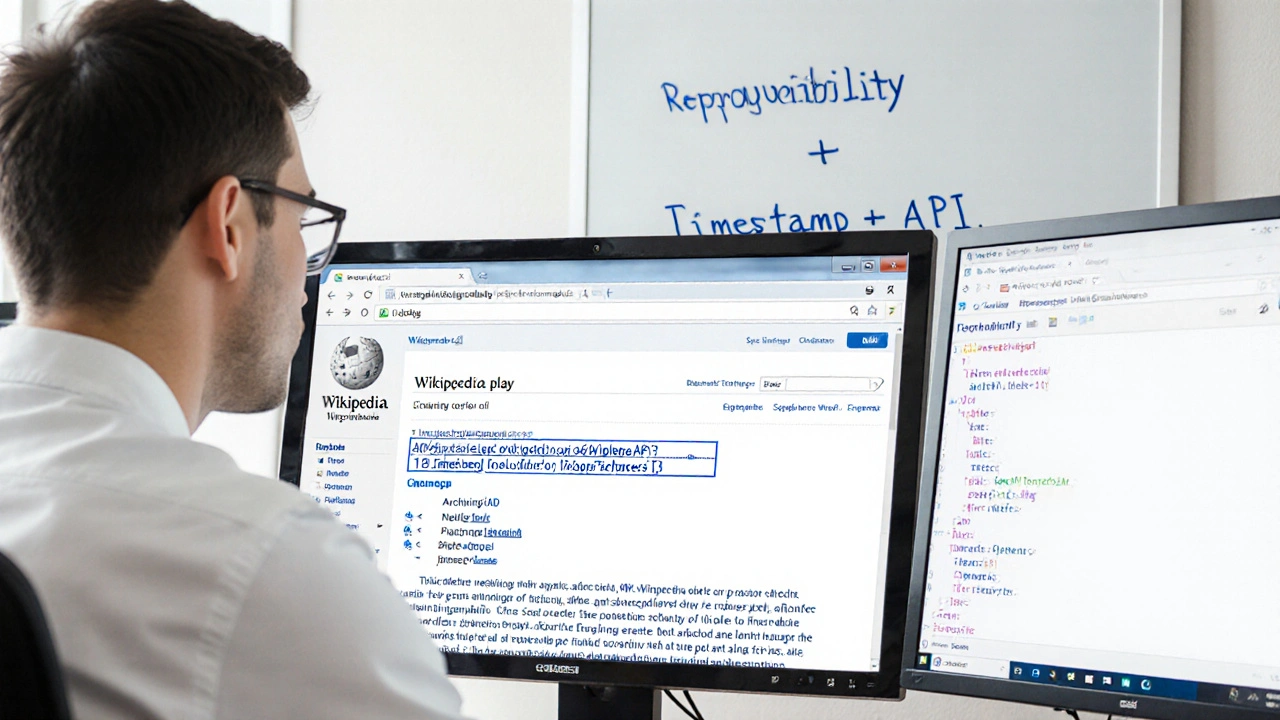

Why Reproducibility Matters in Wikipedia Research

Wikipedia isn’t a static database. Articles change every minute. A fact you pulled on March 15 might be gone by March 16. If another researcher tries to verify your findings using the same search terms or page title, they’ll get different results. That’s not a flaw in Wikipedia-it’s a feature. But it breaks reproducibility.

When a study claims that "72% of biographies on Wikipedia are incomplete," and no one else can check that number because the exact articles, timestamps, and search filters aren’t recorded, the claim becomes meaningless. Reproducibility isn’t about proving Wikipedia is perfect. It’s about proving your research is honest and transparent.

Studies from the University of Oxford and MIT have shown that over 60% of Wikipedia-based research published between 2018 and 2023 failed basic reproducibility checks. The reason? Researchers didn’t save snapshots of pages, didn’t log edit histories, and didn’t specify which version of an article they used.

What Counts as a Reproducible Wikipedia Study?

A reproducible Wikipedia study has three non-negotiable elements:

- A saved snapshot of every Wikipedia page used, with the exact URL including the revision ID.

- A timestamp for when the data was collected-down to the minute.

- A documented method for how data was extracted: which tools, scripts, or manual steps were used.

For example, if you analyzed the length of political candidate biographies, you didn’t just visit "Barack Obama" and copy-paste. You visited https://en.wikipedia.org/w/index.php?title=Barack_Obama&oldid=120456789 on April 3, 2025, at 14:22 UTC, and used Python’s Wikipedia-API to extract the word count. You saved the script and the raw HTML dump.

Without that revision ID, you’re not citing Wikipedia-you’re citing a ghost.

The Most Common Pitfalls Researchers Make

Here are the top five mistakes that break reproducibility in Wikipedia research:

- Using the live site without archiving-You think you’re citing "the article," but you’re really citing whatever version is live when the reviewer checks it.

- Ignoring edit histories-A sentence you quote might have been added 12 hours before your crawl and removed 3 days later. Without tracking edits, you can’t tell if it was a typo, a vandalism fix, or a contested claim.

- Assuming article titles are stable-"Donald Trump" became "Donald J. Trump" in 2024 after a community vote. If you searched for the old title, your results are invalid.

- Not documenting tools or code-If you used a custom scraper, you must share the code. If you used a tool like WikiTaxonomy or WikiPedia-Extractor, name the version. Tools update. Code breaks.

- Sampling without transparency-Did you analyze all 50,000 biographies? Or just the first 100 that loaded? If you don’t say, your sample is unverifiable.

One 2024 study in Scientific Data found that 83% of papers using Wikipedia for quantitative analysis didn’t include a single revision ID. That’s not negligence-it’s a systemic failure.

Standards That Actually Work

There are three trusted standards for making Wikipedia research reproducible:

- Use the Wayback Machine-Save every page you use at web.archive.org. Copy the archived URL and paste it into your paper. This is free, simple, and widely accepted.

- Record revision IDs-Every Wikipedia URL has a unique revision ID. It looks like

?oldid=123456789. Always include it. You can find it by clicking "View history" on any article, then copying the link to the exact version you used. - Deposit your data-Upload your raw data (HTML dumps, CSV files, scripts) to a public repository like Zenodo, Figshare, or OSF. Link it in your paper. This is now required by over 200 academic journals.

These aren’t suggestions. They’re minimum requirements. The Wikipedia Research Community has published guidelines endorsed by the Wikimedia Foundation, and major universities like Stanford and Harvard now require them for thesis submissions.

Tools That Make Reproducibility Easy

You don’t need to be a programmer to do this right. Here are free tools that handle the heavy lifting:

- WikiWho-Tracks who edited what and when. Great for analyzing content changes.

- Wikipedia-API (Python)-Lets you pull exact revisions programmatically. Install with

pip install wikipedia-api. - Archive.today-One-click snapshotting. Works better than Wayback for dynamic pages.

- Wikidata-For structured data, use Wikidata queries instead of scraping Wikipedia. It’s stable, versioned, and designed for research.

For non-coders: Use the "Export as PDF" function on Wikipedia, then save the file with a clear filename like Obama_bio_2025-04-03.pdf. It’s not ideal, but it’s better than nothing.

What Happens When You Don’t Follow These Rules?

Real consequences happen. In 2023, a paper in a peer-reviewed journal claimed that "Wikipedia articles on mental health contain more misinformation than medical websites." The journal retracted it after another researcher tried to replicate the study-and found the author had used a different set of articles, with no documentation. The author admitted they hadn’t saved any snapshots.

That paper was cited 147 times before retraction. Ten other studies built on it. All of them are now suspect.

Wikipedia isn’t the problem. Bad research practices are.

How to Fix Your Own Research

If you’re working on a Wikipedia-based project right now, here’s what to do today:

- Go to every Wikipedia page you used.

- Click "View history."

- Find the version you used and copy the revision ID.

- Save a snapshot using Archive.today or Wayback Machine.

- Write down the date and time you accessed it.

- Save your code or scripts in a public folder.

It takes five minutes per page. But it turns your research from guesswork into something others can trust.

Reproducibility isn’t about being perfect. It’s about being honest about what you did-and giving others the chance to check it.

Why can’t I just cite the regular Wikipedia URL without the revision ID?

Because Wikipedia pages change constantly. A URL like https://en.wikipedia.org/wiki/Climate_change points to the current version, not the one you saw. Without a revision ID, you’re not citing a specific version-you’re citing a moving target. Reviewers and other researchers can’t verify your claims if they see different content. The revision ID locks your source in time.

Is it okay to use Wikipedia as a primary source in academic research?

Wikipedia is not a primary source-it’s a secondary summary. But that doesn’t mean it can’t be the subject of research. You can study how Wikipedia covers topics, how content evolves, or what biases appear in its articles. Just don’t cite Wikipedia as evidence for a scientific fact. Cite the original sources Wikipedia links to. Your research question should be about Wikipedia itself, not the topic it describes.

What if I’m using a lot of pages-do I really need to archive every single one?

Yes, if you’re doing quantitative analysis. If you sampled 500 articles, you need 500 snapshots. If you’re doing qualitative research on 10 articles, archive all 10. There’s no shortcut. If you skip this, your findings aren’t reproducible. Tools like WikiExtractor can automate bulk exports. The work is upfront-but it saves you from having your paper retracted later.

Can I use Wikipedia data without violating copyright?

Yes. Wikipedia content is licensed under CC BY-SA 4.0, which allows reuse as long as you give credit and share any derivative work under the same license. You must cite Wikipedia and link to the license. If you modify the text or extract data for analysis, you still need to credit it. Most academic publishers accept this. Just include a footnote: "Data extracted from Wikipedia, licensed under CC BY-SA 4.0."

What’s the difference between Wikidata and Wikipedia for research?

Wikipedia is for human-readable articles. Wikidata is a structured database behind it. If you need to analyze things like birth dates, locations, or relationships between people, Wikidata is better. It’s versioned, machine-readable, and has APIs designed for research. Wikipedia is great for text analysis. Wikidata is better for numbers. Use both when needed.

Next Steps for Researchers

Start small. Pick one article you’re citing. Archive it now. Save the revision ID. Add it to your reference list. Do that for every source. In a week, you’ll have a habit that makes your work stronger.

Ask your advisor or journal editor: "Do you require revision IDs for Wikipedia citations?" If they say no, show them the 2023 retraction case. If they say yes, you’re already ahead.

Reproducibility isn’t about perfection. It’s about integrity. And in a world where misinformation spreads fast, being able to prove what you did is the only defense you have.