Research on Wikipedia: What Scholars Are Learning About the World's Largest Encyclopedia

When you think of research on Wikipedia, the systematic study of how knowledge is created, contested, and maintained on the world’s largest online encyclopedia. Also known as Wikipedia scholarship, it’s not just about counting edits—it’s about understanding how trust is built without editors being paid, how bias slips in despite good intentions, and why millions of people still rely on it more than academic journals. This isn’t theoretical. Real people—students, librarians, journalists, and activists—are shaping what shows up on the screen, and researchers are watching closely to figure out how it all works.

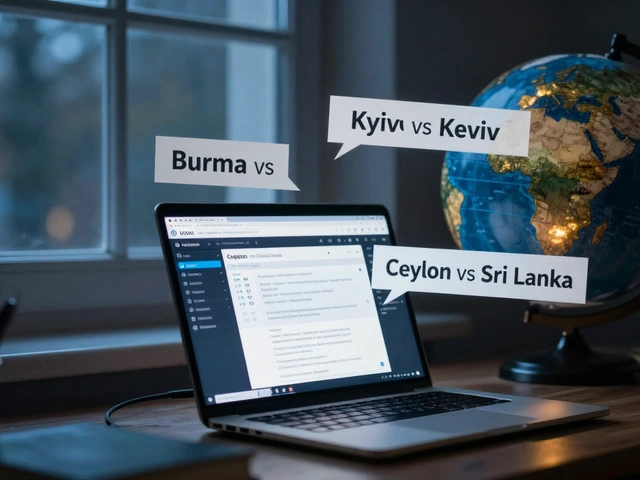

One major area of Wikipedia scholars, academics who study the platform’s structure, community, and impact on public knowledge. Also known as Wikipedia researchers, they’ve uncovered that the top 1% of editors produce nearly half of all content. That’s not because they’re experts—it’s because they’re persistent. Meanwhile, Wikipedia community, the network of volunteer editors, admins, and bots who enforce rules, resolve disputes, and maintain quality. Also known as Wikipedia editors, they’re the ones who catch vandalism, debate neutrality, and fight for reliable sourcing. Without them, Wikipedia wouldn’t just be flawed—it would be useless. And then there’s Wikipedia editing, the act of adding, fixing, or removing content, which seems simple but involves complex social and technical systems. Also known as contributing to Wikipedia, it’s where policy meets practice: a student rewriting a section for class credit, a librarian adding citations from local archives, or a bot fixing broken links every minute.

These aren’t isolated pieces. Research shows that Wikipedia editing is shaped by the community’s rules, which are in turn shaped by the scholars who study them. For example, studies on conflict of interest revealed that paid editors often hide their ties—so the policy got tighter. Research on mobile editing showed that most new contributors use phones, so the interface changed. Even AI misinformation is being fought using Wikipedia’s sourcing model, because it’s the only large-scale system that forces verifiable evidence over confidence. This is why research on Wikipedia matters: it doesn’t just describe the platform—it fixes it.

What you’ll find below isn’t a list of academic papers. It’s a collection of real stories from inside the system: how bots keep spam out, how librarians quietly improve articles, how A/B tests change what you click on without you noticing, and how editors in Ukraine or Nigeria fight to keep their history visible. These aren’t abstract ideas—they’re daily battles over truth, access, and control. And they’re happening right now, in real time, on a site you probably use every week.

How Signposts Guide Academic Research on Wikipedia

Wikipedia signposts guide researchers to reliable information by flagging gaps in citations, bias, or quality. Learn how these community tools help academic work and how to use them effectively.