Vandalism Reversion: How Wikipedia Stops Edit Sabotage

When someone tries to delete a page, add nonsense, or insert false info on Wikipedia, vandalism reversion, the process of undoing harmful edits to protect content integrity. Also known as reverting vandalism, it’s the frontline defense that keeps Wikipedia reliable—even with millions of anonymous edits every day. This isn’t just about deleting bad content. It’s about speed, consistency, and community trust. Every minute a false edit stays up, it can mislead readers, spread misinformation, or damage reputations. That’s why reversion isn’t optional—it’s built into the system.

Wikipedia doesn’t rely on paid staff to catch this stuff. It’s volunteers—editors who monitor recent changes, patrol new edits, and use bots that scan for obvious spam or profanity. Tools like Anti-Vandalism Tools, automated systems that flag or block suspicious edits in real time work alongside human eyes. Some bots can undo a vandalized edit within seconds. Others learn from past patterns, getting smarter over time. Meanwhile, experienced editors track repeat offenders, apply blocks, and sometimes escalate to Wikipedia administrators, trusted volunteers with extra powers to block users and protect pages when things get out of hand. These aren’t secret operations. The whole process is public. You can see every reversion in the edit history, and anyone can review why an edit was removed.

It’s not always simple. Sometimes, what looks like vandalism is actually a well-intentioned but misguided edit. Other times, editors disagree over content and turn it into an edit war, a cycle of back-and-forth reversions between opposing editors. That’s when policies kick in: discussion pages, mediation, and protection rules. The goal isn’t to win arguments—it’s to protect the article from being turned into a battleground. The system works because it’s transparent. You don’t need to be an expert to see what happened. You just need to check the history.

Behind every clean Wikipedia page is a quiet army of reversion actions. Thousands happen daily. Most go unnoticed. But when they fail—when a hoax slips through, or a false claim goes viral—it’s because the system was overwhelmed, not broken. That’s why tools keep improving, why training for new editors focuses on spotting bad edits early, and why the community keeps refining its rules. This isn’t about censorship. It’s about keeping knowledge honest.

Below, you’ll find real examples of how Wikipedia handles sabotage, what tools make reversion faster, and how volunteers stay one step ahead of those trying to break it.

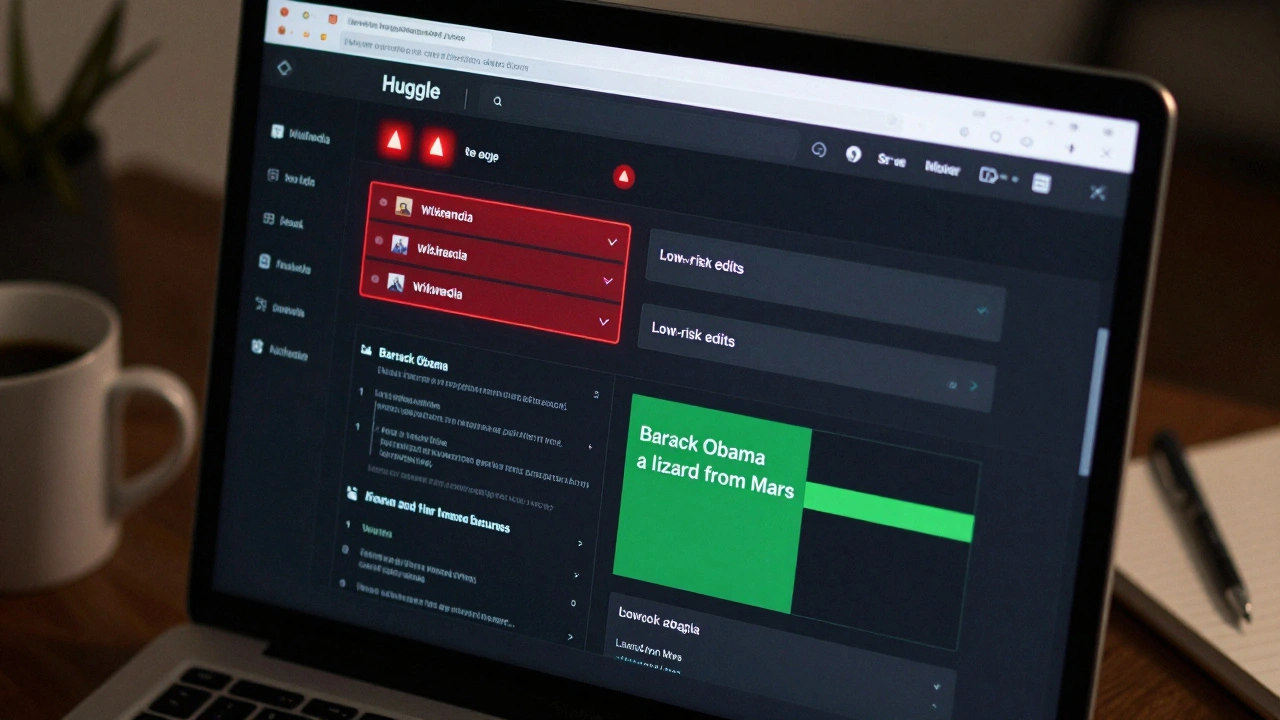

Huggle for Wikipedia: Fast Vandalism Reversion Workflow

Huggle is a fast, browser-based tool used by Wikipedia volunteers to quickly identify and revert vandalism. It filters out noise and highlights suspicious edits in real time, letting users revert spam and malicious changes in seconds.