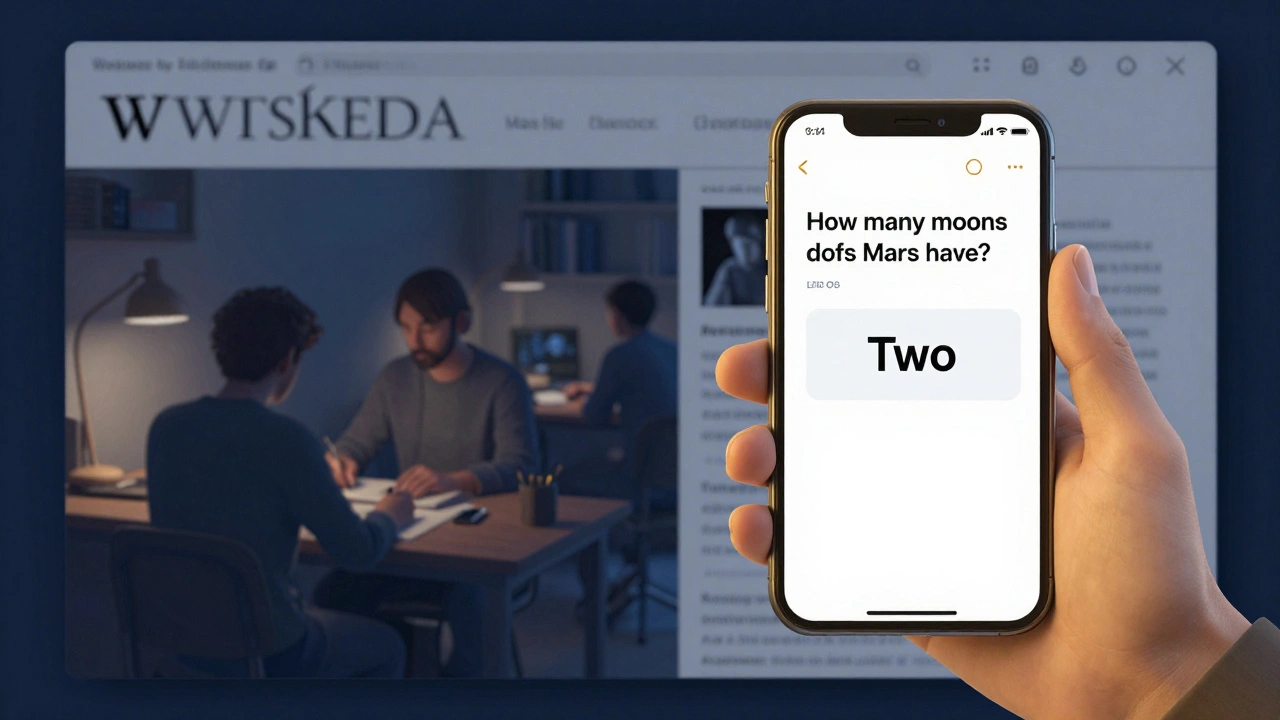

When you ask Google a question like "How many moons does Mars have?", you don’t click through to Wikipedia anymore. You get the answer right on the search page: two. No scrolling, no ads, no navigation. It’s fast. It’s convenient. But what happens to the websites that used to get all that traffic?

The Quiet Decline of Wikipedia Clicks

Wikipedia saw its highest traffic in 2021, with over 1.7 billion unique visitors per month. By late 2024, that number dropped by 18%-not because people stopped using it, but because they stopped clicking into it. The same questions that once led millions to Wikipedia pages now get answered by AI-powered snippets from Google, Bing, and Perplexity. These answers come from training data that includes Wikipedia, but they don’t send users back to the source.It’s not just minor questions. Even complex ones-"What is the cause of climate change?" or "How does the human immune system work?"-are now answered in a single paragraph. Wikipedia’s article on climate change still gets 2.3 million views a month. But in 2020, that same article got 3.9 million. The drop isn’t random. It lines up exactly with when AI answers became mainstream on major search engines.

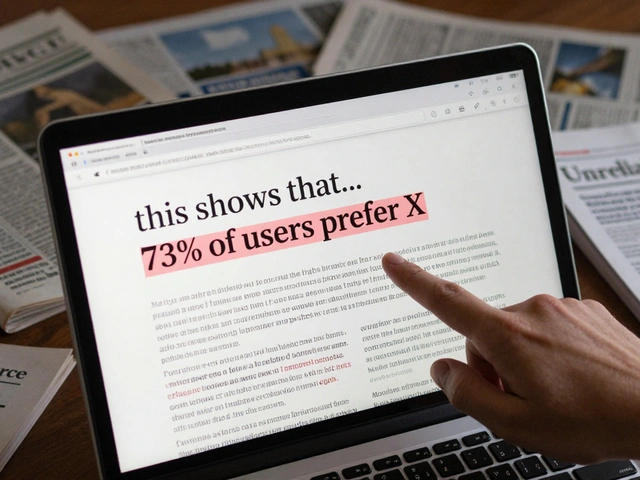

How AI Answers Work-and Why They Steal Traffic

AI answers aren’t just summaries. They’re synthesized responses pulled from dozens of sources, then rewritten in plain language. Google’s AI Overviews, for example, use models trained on public data, including Wikipedia, Reddit, and academic papers. The system picks the most confident, concise answer and displays it at the top of the page. No link. No attribution. Just the answer.This is called traffic displacement. It’s not theft, technically. The AI didn’t copy the whole article. But it took the value-the knowledge-and gave it away without sending users to the original creator. For Wikipedia, which relies on donations and doesn’t run ads, this is a quiet crisis. Every click counts. Every visit helps fund servers, editors, and translation tools. Fewer clicks mean fewer donations.

Wikipedia’s own data shows that 70% of its traffic comes from search engines. And of that, over half used to come from queries that are now answered directly by AI. That’s not a small fraction. That’s millions of visits disappearing each month.

Who Benefits? Who Loses?

The winners are obvious: Google, Microsoft, and other tech giants. They keep users on their platforms longer. They collect more data. They show more ads. They control the flow of information.The losers? The creators. Wikipedia volunteers. Independent journalists. Small educational sites. These are the people who spend hours verifying facts, citing sources, and writing clear explanations. They don’t get paid. They don’t get traffic. And now, they don’t even get credit.

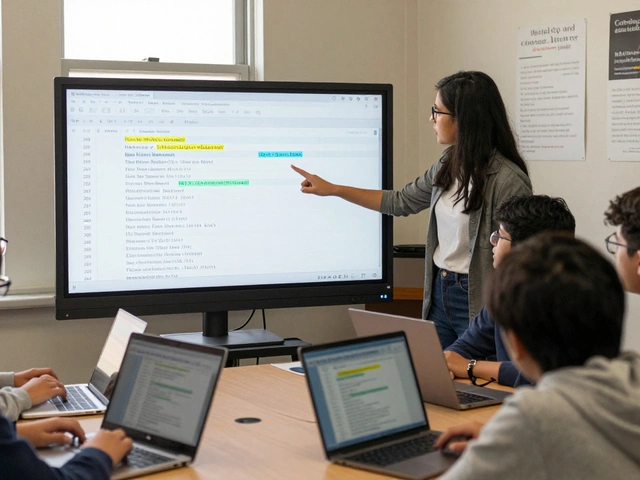

Here’s a real example: In 2023, a Wikipedia editor named Maria from Toronto spent six months expanding the article on "Neuroplasticity in children". She added peer-reviewed studies, translated foreign research, and cleaned up outdated claims. The article became one of the most cited on the topic. In 2022, it got 1.1 million views. In 2025, it got 380,000. Why? Because Google’s AI now pulls the key points and displays them as a standalone answer. No link. No attribution. No thanks.

Wikipedia’s Response: Silence, Then Strategy

Wikipedia has been quiet about this. No press releases. No public outcry. That’s because they can’t afford to fight Google. They depend on Google for traffic. They don’t have legal standing to demand attribution. And they can’t compete with AI speed.But behind the scenes, they’ve started testing small changes. In late 2024, Wikipedia began adding structured data tags to high-traffic articles-using Schema.org markup to help AI systems recognize them as authoritative sources. They’re also working with academic libraries to push for citation standards in AI responses. If an AI says "According to Wikipedia," at least users might know where to look.

They’ve also quietly launched a campaign called "Click to Learn More" on their mobile app. When an AI answer pulls from a Wikipedia article, the app now shows a small banner: "This answer is based on Wikipedia. Read the full article." It’s not perfect. But it’s a start.

What This Means for the Future of Knowledge

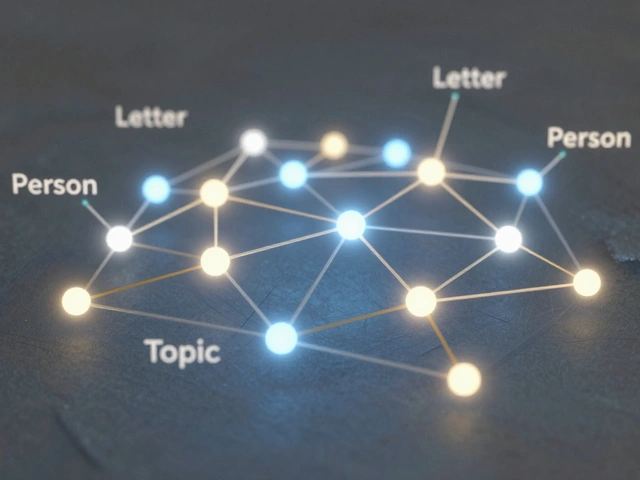

The real danger isn’t that AI answers are wrong. Most of them are accurate-sometimes more accurate than Wikipedia’s older edits. The danger is that we stop seeing knowledge as something built by people. We stop valuing depth. We stop clicking through. We stop learning how to verify.When you get a one-sentence answer, you don’t learn about the debate around it. You don’t see the citations. You don’t find the counterarguments. You don’t discover related topics. That’s how misinformation spreads-not because AI lies, but because it simplifies too much.

Think about it: If you ask "Why is the sky blue?", an AI might say: "Rayleigh scattering causes shorter blue wavelengths to scatter more." That’s correct. But if you’d clicked through to Wikipedia, you’d have read about Lord Rayleigh, the history of the theory, how it applies to sunsets, and why Mars has a reddish sky. That’s context. That’s understanding.

What You Can Do

You don’t have to accept this as inevitable. Here’s what you can do right now:- When you get an AI answer, ask: "Where did this come from?" Then search for the source.

- Use Wikipedia as your second step, not your last resort.

- Support Wikipedia directly. Even $5 helps keep the servers running.

- Share articles you find on Wikipedia. Link to them. Talk about them.

- Teach kids to click through. Show them how to read beyond the first line.

Knowledge isn’t just data. It’s a community effort. And if we stop visiting the places that build it, we won’t have anything left to learn from.

Frequently Asked Questions

Do AI answers make Wikipedia obsolete?

No. AI answers are summaries, not replacements. Wikipedia still holds the most comprehensive, cited, and peer-reviewed information online. AI pulls from it-but doesn’t improve on it. If Wikipedia disappeared, AI answers would lose their most reliable source. They need Wikipedia more than Wikipedia needs them.

Why doesn’t Wikipedia block AI from scraping its content?

Because Wikipedia is built on openness. Blocking AI would go against its core mission. Also, most AI tools follow robots.txt rules. Even if they didn’t, Wikipedia can’t afford legal battles with Google. Instead, they focus on encouraging attribution and improving structured data so AI systems know where to credit them.

Are AI answers more accurate than Wikipedia?

For simple facts, yes-sometimes. But for complex topics, Wikipedia is far more reliable. AI answers can hallucinate, blend sources incorrectly, or omit key context. A 2024 Stanford study found that AI answers were factually correct 89% of the time on basic questions, but only 67% on nuanced topics. Wikipedia’s accuracy rate, after edits and moderation, is 95%+.

Can I tell if an AI answer came from Wikipedia?

Not always. But if the answer sounds like a Wikipedia summary-neutral tone, clear structure, cites studies without hype-it likely came from there. Look for phrases like "according to research," "as of 2023," or "the consensus is." These are signs of Wikipedia-style writing. Try pasting a sentence into Google in quotes. If it leads to a Wikipedia page, that’s your source.

What happens if Wikipedia traffic keeps falling?

If donations drop, Wikipedia may have to reduce its language support, cut volunteer tools, or slow down updates. Some language versions might disappear. The encyclopedia could become less diverse, less updated, and less reliable. It’s not a hypothetical. The Italian and Polish versions already reduced translation projects in 2024 due to funding gaps. This isn’t just about one site-it’s about the future of free, open knowledge.