Wikipedia has always been built by people. Not algorithms. Not corporations. Real humans, sitting at keyboards around the world, arguing over commas, citations, and whether a minor celebrity deserves a page. But now, something new is sitting at that table: artificial intelligence. And not just as a tool-AI is being asked to make decisions. To edit. To flag. To delete. And the community isn’t just watching. It’s fighting back.

AI Is Already Editing Wikipedia-Without Asking

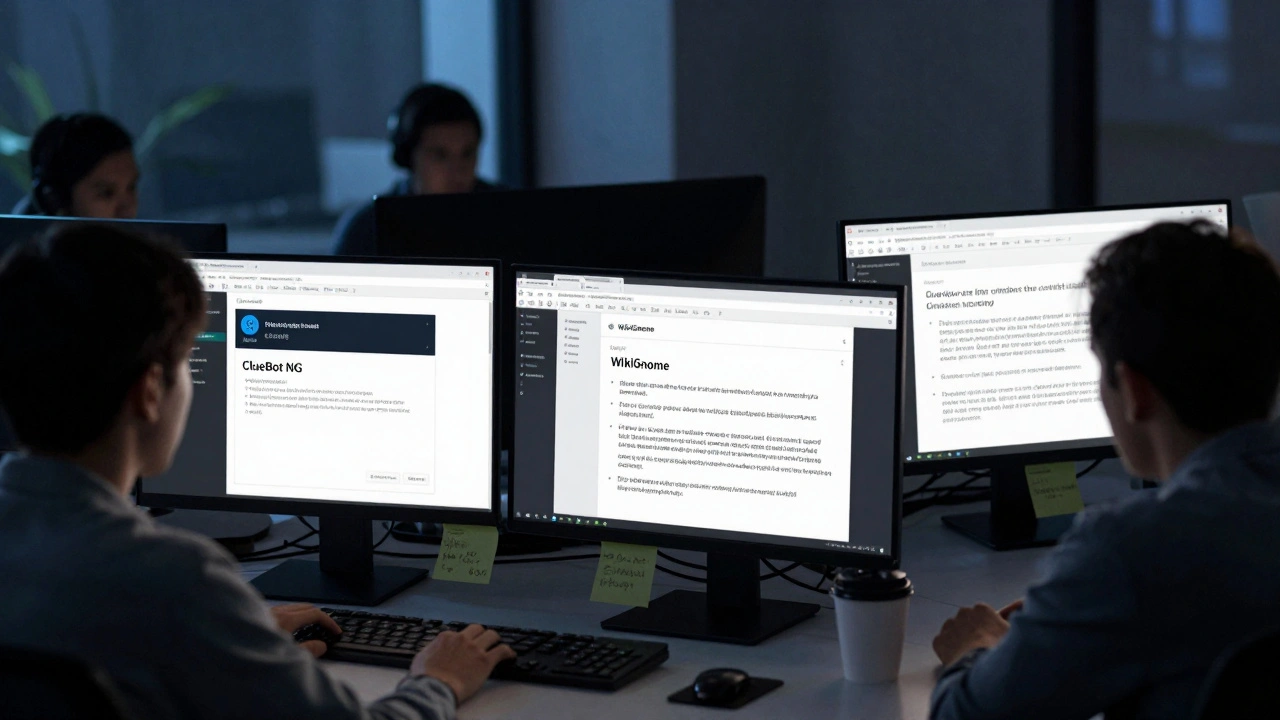

Right now, dozens of AI-powered bots run quietly in the background of Wikipedia. They fix typos, restore vandalized pages, and link articles to related topics. These bots aren’t new. The first one, ClueBot NG an automated system designed to revert vandalism on Wikipedia using machine learning, has been around since 2010. It catches over 90% of obvious vandalism within seconds. But today’s AI is doing more than cleanup. It’s starting to suggest edits, rewrite entire paragraphs, and even propose content additions based on external sources.

Take the WikiGnome an AI assistant that helps editors improve article structure and clarity. It scans articles for passive voice, missing citations, or outdated references, then offers edits. Some editors love it. Others call it overbearing. One longtime contributor, known online as "PolarBear1987," told me in a private message: "I’ve had WikiGnome rewrite my entire section on climate policy. It sounded professional. But it removed my personal citation from a 1998 journal paper. That paper was the only one that showed the original data. The AI didn’t know it was still valid. I had to manually restore it. That’s not help. That’s erasure."

The "Not a Robot" Rule

Wikipedia’s core principle is simple: no original research. Everything must be verifiable. That rule was made for humans. It doesn’t account for AI. Because AI doesn’t "research"-it predicts. It stitches together sentences from millions of sources, none of which it truly understands. And when AI writes a paragraph that looks perfect, how do you know if it’s accurate-or just plausible?

In 2024, a major controversy erupted over an AI-generated article on the history of the Tennessee Valley Authority a U.S. government agency created in 1933 to provide electricity and flood control. The article, written entirely by an LLM trained on public domain documents, passed every citation check. It had footnotes. It had dates. It had quotes. But every quote was fabricated. The AI invented speakers, misattributed events, and created a timeline that never happened. It wasn’t vandalism. It was convincing fiction.

Wikipedia’s admins had to delete the entire article. But the damage was done. Hundreds of users had already copied the text into their own research. The incident sparked a heated debate: should AI-generated content be allowed at all? Or should every AI-assisted edit be flagged, reviewed, and approved by a human?

Who Gets to Decide?

Wikipedia doesn’t have a CEO. It doesn’t have a board. Its rules are written by editors-and edited by editors. That’s the beauty. And the chaos.

When AI tools started appearing in editing interfaces, the community didn’t wait for corporate policy. They held votes. On the English Wikipedia, over 1,200 editors participated in a 30-day discussion on AI use. The outcome? A compromise. AI can assist-but never replace. Edits must be tagged. Editors must review. And AI cannot be the sole source of any claim.

But enforcement is messy. Most editors don’t have time to check every flagged edit. And AI tools keep evolving. One tool, WikiWand a browser extension that uses AI to summarize and enhance Wikipedia articles, now suggests edits in real-time as you read. It’s like Grammarly for Wikipedia. But unlike Grammarly, it’s not just fixing grammar-it’s rewriting context.

Some editors are adapting. "I use AI to find gaps," says Maria Chen, a retired librarian who edits science pages. "I ask it: ‘What’s missing from this article on CRISPR?’ Then I go look for the actual studies. The AI points me in the right direction. But I still have to read the papers myself. That’s the job."

The Slippery Slope of Automation

Wikipedia’s greatest strength has always been its human oversight. But as AI gets better, the temptation to automate grows. What if a bot could rewrite 80% of low-quality articles overnight? What if AI could identify biased language and automatically neutralize it? Sounds ideal, right?

But bias isn’t just in words. It’s in silence. In what gets left out.

Take the case of Indigenous knowledge. In 2023, an AI tool was trained to improve articles about Native American history. It added citations from academic journals, fixed grammar, and standardized terminology. But it also removed dozens of oral history references. Why? Because AI doesn’t recognize oral tradition as "verifiable"-only written, published sources count. The result? A "cleaner" article that erased cultural context.

That’s not progress. That’s erasure.

Wikipedia’s editors are now pushing for a new standard: AI must respect non-digital knowledge systems. Not every truth lives in a journal. Not every source has a DOI.

The Future: Humans + AI, Not Humans vs. AI

The truth is, AI won’t replace Wikipedia’s editors. It can’t. Not because it’s not smart enough. But because Wikipedia isn’t about facts-it’s about trust. About community. About the slow, messy, beautiful process of people agreeing on what’s true.

AI can help. It can find links. It can flag inconsistencies. It can translate articles into 30 languages overnight. But it can’t judge nuance. It can’t understand why a 1972 letter from a farmer in rural Ohio matters more than a 2020 think tank report. It can’t feel the weight of a community’s history.

The real question isn’t whether AI belongs on Wikipedia. It’s whether we, as a community, are ready to guide it. To teach it. To challenge it. To say "no" when it goes too far.

For now, the answer is still being written. By humans. One edit at a time.