This month, Wikipedia’s editing backlog hit a new high. Over 300,000 unreviewed edits are sitting in the queue across language versions, with English Wikipedia alone holding more than 150,000 pending changes. That’s not just a number-it’s a sign that the system is straining under the weight of its own success. Volunteers who spend hours checking edits, fixing vandalism, and verifying sources are stretched thinner than ever. And it’s not because people are editing less. It’s because they’re editing more-and faster-than the community can keep up with.

Why the Backlog Is Growing

Wikipedia’s open-editing model is its greatest strength and its biggest weakness. Anyone can edit. That means good-faith contributors add new facts, update statistics, and fix typos every minute. But it also means bots, spammers, and trolls throw in garbage just as fast. The system relies on human reviewers to sort through it all. And there aren’t enough of them.

Since 2020, the number of active editors on English Wikipedia has dropped by nearly 25%. That’s not because people lost interest. It’s because the process got harder. New editors face strict guidelines, confusing tools, and hostile feedback. One study from the University of Minnesota found that 70% of new contributors make their first edit and never return. Many get discouraged by automated warnings, edit reverts, or comments like “Read the manual” without any help.

Meanwhile, the volume of edits keeps rising. Wikipedia now gets over 1.5 million edits per day. That’s more than 500 edits every minute. The tools haven’t kept pace. The pending changes queue still works the same way it did in 2012. Reviewers have to click through page after page, comparing old and new versions manually. No AI assistant helps flag likely good edits. No priority system surfaces high-traffic articles first.

What Gets Left Behind

Not all edits are equal. A typo in a biography of a living person gets fixed quickly. But a factual update to a niche topic-say, the latest research on Antarctic krill populations-can sit for weeks. That’s because reviewers tend to focus on high-profile pages. Pop culture, celebrities, politics, and current events get attention. Science, history, and local topics? Not so much.

Take the article on “Polar Ice Core Dating Methods.” It had a major update in November 2025 with new data from the European Project for Ice Coring in Antarctica. That update was accurate, sourced, and well-written. But it stayed in the pending queue for 47 days. Why? Because no one was watching it. The article gets maybe 15 views a day. The reviewers are busy checking edits on Taylor Swift’s discography or the latest U.S. election results.

This creates a dangerous imbalance. Wikipedia becomes a mirror of what’s trending, not what’s important. Knowledge gaps grow in areas that don’t attract clicks. Students using Wikipedia for research end up with outdated or incomplete information on subjects that aren’t “sexy.”

The Human Cost

Behind every queued edit is a volunteer. These aren’t paid staff. They’re teachers, retirees, students, engineers-people who care enough to spend their free time making sure Wikipedia stays reliable. But burnout is real.

A survey of 2,100 active Wikipedia editors in late 2025 found that 62% felt overwhelmed by the volume of edits. Over half said they’d reduced their editing time in the past year. One editor in Germany, who’s been reviewing for 14 years, told a researcher: “I used to check 50 edits a day. Now I do 10. I just can’t keep up. I feel like I’m letting people down.”

And it’s not just about time. The emotional toll matters too. Many reviewers face harassment. A simple edit reversion can trigger a flood of angry messages. Some editors report being doxxed, threatened, or accused of bias just for rejecting a bad edit. The community’s culture of “no mercy” for newcomers has become toxic for longtime contributors.

What’s Being Done

The Wikimedia Foundation knows the problem. In November 2025, they launched a pilot program called “FastTrack Review.” It uses simple machine learning to flag edits that are likely safe-like grammar fixes, date updates, or citations added to low-risk articles. These edits get auto-approved if they pass a checklist: no new claims, no removed sources, no controversial language.

Early results show a 38% reduction in review time for those flagged edits. But the system only works on a small subset. It can’t handle new facts, complex rewrites, or disputed topics. That still needs human eyes.

Some communities are experimenting with new roles. In Spanish Wikipedia, “trusted reviewers” now have extended privileges to approve edits on specific topics they specialize in. In French Wikipedia, a “buddy system” pairs new editors with experienced ones for their first five edits. It’s not perfect, but it’s a start.

There’s also talk of introducing a “reviewer incentive” program-small digital badges, recognition on contributor profiles, maybe even limited access to advanced tools. Nothing financial. The culture of Wikipedia is built on volunteerism. But even volunteers need to feel seen.

What You Can Do

If you’ve ever thought about editing Wikipedia, now is the time. The backlog isn’t going away. But every review you do helps. You don’t need to be an expert. You just need to care enough to check a few edits.

Here’s how to start:

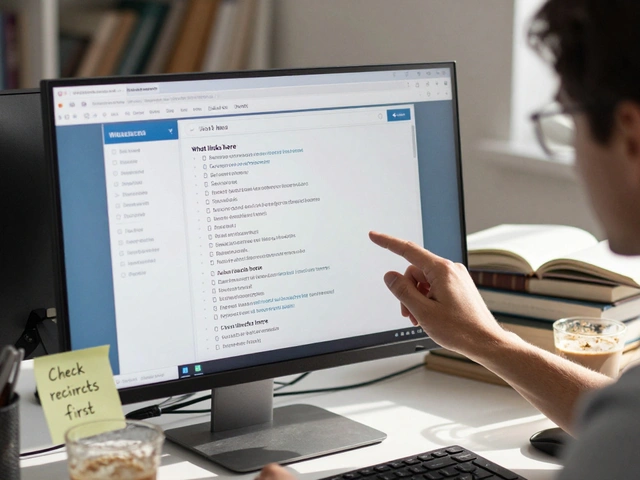

- Go to Special:RecentChanges on English Wikipedia.

- Click “Show pending changes” in the sidebar.

- Look for edits marked “minor” or “bot” first-they’re usually safe.

- Check the diff: Did they add a source? Remove a claim? Fix a spelling error?

- If it looks good, click “Approve.” If not, click “Revert” and leave a polite note.

You can also join the Wikipedia Teahouse, a friendly space for new editors to ask questions. Or visit the WikiProject X for topics you care about-history, science, local events-and help review edits in that area.

It takes five minutes. But those five minutes could mean the difference between a student getting accurate information-or being misled by a stale, unreviewed edit.

The Bigger Picture

Wikipedia isn’t just a website. It’s one of the last open, community-run knowledge systems on the internet. Unlike corporate platforms that lock content behind paywalls or algorithms, Wikipedia is free for everyone. But it only works if people show up.

When the backlog grows, it’s not just a technical problem. It’s a cultural one. It says: “We can’t keep up with what we’ve built.” And if we don’t fix it, we risk losing the very thing that makes Wikipedia unique: its trustworthiness.

The next time you use Wikipedia to check a fact, remember: someone spent time making sure that fact was right. Now it’s your turn to help.

Why is Wikipedia’s editing backlog growing so fast?

The backlog is growing because the number of edits per day has increased dramatically-over 1.5 million daily-while the number of active volunteer reviewers has dropped by nearly 25% since 2020. New editors often leave after facing strict rules and hostile feedback, and the tools for reviewing edits haven’t improved in over a decade. This creates a gap between how much is being edited and how much can be checked.

Are bots helping reduce the backlog?

Bots help with simple tasks like fixing formatting, removing spam, and flagging vandalism, but they can’t review new factual claims, complex rewrites, or disputed content. That still requires human judgment. A new pilot called FastTrack Review uses AI to auto-approve low-risk edits, which has cut review time by 38% for those cases-but it only applies to a small fraction of all edits.

Why do some edits stay unreviewed for weeks?

Edits to low-traffic articles-like niche scientific topics or local history-get ignored because reviewers focus on high-profile pages. A change to a celebrity’s biography might be reviewed in hours. An update to a lesser-known species’ habitat data could sit for months. This creates knowledge gaps in areas that don’t attract clicks, even if they’re important.

Can I help review Wikipedia edits even if I’m new?

Yes. You don’t need to be an expert. Start by checking minor edits on low-traffic pages. Look for spelling fixes, added sources, or date updates. If the edit is accurate and follows Wikipedia’s guidelines, approve it. If it’s unclear or suspicious, revert it and leave a polite note. The Teahouse and WikiProjects offer friendly support for new reviewers.

What’s being done to support volunteer editors?

The Wikimedia Foundation is testing tools like FastTrack Review and role-based privileges for trusted reviewers. Some language communities are using buddy systems to guide new editors. There’s also discussion of recognition programs-badges, profile highlights, and tool access-to reward reviewers without paying them. The goal is to make reviewing less overwhelming and more rewarding.

Wikipedia’s future depends on people who care enough to act. Not just to read-but to review, to correct, to help. The backlog won’t clear itself. But with a few hundred more people stepping in, it can start to shrink.