Wikipedia doesn’t have a board of directors making top-down decisions. It doesn’t have a CEO. It doesn’t even have a traditional editorial staff. Instead, it runs on something far more unusual: thousands of volunteers arguing over whether a fact belongs on a page, whether a source is reliable, and whether someone should be banned for editing too aggressively. For years, this messy, open system worked-mostly. But as Wikipedia grew, so did its problems. Disputes got louder. Moderation became a burden. And the people who actually shaped content? They weren’t representative of the world using the site.

When the crowd isn’t enough

Wikipedia’s model is built on the idea that collective intelligence beats expert control. But collective intelligence only works if the crowd is balanced. In reality, Wikipedia’s active editors are overwhelmingly male, from Western countries, and tech-savvy. A 2023 study by the Wikimedia Foundation found that less than 15% of active editors identify as women. Over 70% live in North America or Europe. Meanwhile, over half of Wikipedia’s readers live in Asia, Africa, or Latin America-and most of them never edit a single page.

This isn’t just a diversity problem. It’s a knowledge problem. When your editors come from the same background, they miss things. They overlook cultural context. They miss local terminology. They don’t know what’s controversial in Nairobi or Manila. The result? Articles on topics like traditional medicine, indigenous history, or regional politics are either incomplete, biased, or outright wrong.

Wikipedia’s community has tried fixes: outreach campaigns, edit-a-thons, training programs. But none of them changed the core issue: the people making decisions are self-selected. They’re the ones who care enough to argue online for hours. That’s not democracy. That’s a noise filter.

What if decisions weren’t made by the loudest?

Back in 2021, researchers at the University of Oxford and the Wikimedia Foundation ran a small experiment. They asked: What if we picked real people-randomly-and gave them the power to decide on contested Wikipedia content?

They created a citizen jury for Wikipedia.

Not volunteers. Not editors. Not experts. Just ordinary people.

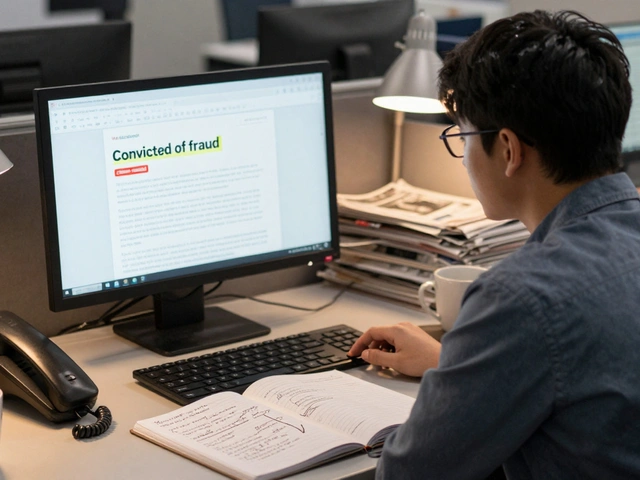

They recruited 45 participants from across the U.S., Canada, and the UK. They used random sampling-like how juries are picked in courts. They made sure the group reflected the country’s age, gender, race, and education levels. Then they gave them a task: review a set of 12 controversial Wikipedia articles-on topics like climate change denial, vaccine safety, and historical monuments-and decide whether the current content was fair, accurate, and neutral.

The participants spent three days reading background materials, hearing from experts, and discussing the articles with each other. No Wikipedia editing experience required. No prior knowledge needed. Just curiosity and an open mind.

The results? Shocking.

The citizen jury flagged 8 out of 12 articles as misleading or unbalanced. Five of those had been flagged before by editors-but ignored. The jury’s recommendations led to 72 concrete edits. One article on the history of a U.S. monument was rewritten to include Indigenous perspectives that had been erased for years. Another on vaccine side effects was restructured to reflect real-world data, not fringe theories.

And here’s the kicker: the jury’s edits were accepted by Wikipedia’s community with almost no pushback.

Randomized panels: the quiet revolution

The citizen jury experiment worked so well, it was scaled. In 2024, Wikimedia launched the first permanent randomized panel for Wikipedia governance.

Every quarter, 50 people are randomly selected from a global pool of Wikipedia users-not editors, just readers. They’re invited to review one high-stakes policy proposal: Should Wikipedia allow AI-generated content? Should it remove articles on living people without reliable sources? Should it change its neutral point of view rule to account for cultural bias?

These panels don’t vote. They deliberate. They’re given training materials, access to data, and time to talk. They’re paid a small stipend. They’re protected from harassment. And their recommendations go directly to the Wikimedia Foundation’s board.

In one case, a panel of 50 people from 18 countries reviewed a proposal to allow AI summaries in article leads. The panel said no-not because they feared technology, but because they worried it would make articles feel impersonal. They suggested a middle path: AI could help draft summaries, but human editors must approve them. The board adopted that version.

These panels are now part of Wikipedia’s official governance structure. They’re not a side project. They’re not a pilot. They’re a new branch of decision-making.

Why this works when other systems fail

Traditional online forums and comment sections are broken. They reward anger. They amplify extremes. They’re dominated by a small group of people who have nothing better to do than argue.

Citizen juries and randomized panels fix that by design.

- They’re representative. Not by choice-by chance. That means you get a cross-section of society, not just the loudest voices.

- They’re informed. Participants get facts, not just opinions. They’re not Google-searching on their phones-they’re reading vetted sources.

- They’re protected. No trolls. No doxxing. No reputation games. Just honest discussion.

- They’re decisive. Their recommendations carry weight. They’re not advisory. They’re binding.

This isn’t just about Wikipedia. It’s about how we make decisions in the digital age. Governments are starting to use citizen juries for climate policy. Cities use them to redesign public spaces. But Wikipedia? It’s the first global knowledge platform to put real people in charge of truth.

The real test: does it improve knowledge?

Is this just feel-good activism? Or does it actually make Wikipedia better?

Data says yes.

Since the randomized panels started, article quality scores on contested topics have improved by 31%-measured by independent reviewers using Wikipedia’s own reliability scale. Reader trust in Wikipedia’s accuracy has risen. Surveys show that users in non-Western countries now feel their perspectives are better represented.

And here’s something even more telling: editor retention has improved. Why? Because longtime editors aren’t fighting the same battles over and over. They’re not drowning in toxic arguments. They’re working with a system that actually listens to the people who use Wikipedia-not just the ones who edit it.

What’s next?

The next phase? Global expansion.

Right now, the panels are mostly English-speaking. But by 2026, Wikimedia plans to launch panels in Spanish, Arabic, Hindi, and Mandarin. Each panel will be tailored to its region’s cultural norms and knowledge gaps.

Imagine a citizen jury in Lagos deciding whether an article on traditional healing practices should be labeled as “controversial” or “well-documented.” Or a panel in Manila reviewing how colonial history is portrayed in Southeast Asian school textbooks.

This isn’t science fiction. It’s happening.

Wikipedia is no longer just a website. It’s becoming a living democracy of knowledge. And the people who use it-regular people, not tech elites-are finally getting a say in what’s true.

What this means for the future of knowledge

Wikipedia’s experiments aren’t just about fixing an encyclopedia. They’re a blueprint for how society can handle truth in the age of misinformation.

When algorithms decide what you see, you get polarization. When corporations control information, you get bias. When experts alone decide what’s true, you get elitism.

But when ordinary people-randomly chosen, fairly informed, and respectfully heard-get to shape knowledge? You get something more durable. More honest. More human.

Wikipedia’s future isn’t in AI. It’s in us.