When a major news outlet issues a correction, it doesn’t just fix a mistake-it can trigger a chain reaction on Wikipedia. You might think Wikipedia is a static archive, but it’s not. It’s a living document that reacts to real-time events, especially when those events come from trusted sources like The New York Times, BBC, or Reuters. If a news article gets updated with a correction, editors on Wikipedia often follow suit-sometimes within hours. This isn’t just about being accurate. It’s about credibility. Wikipedia’s entire system depends on reliable sourcing, and when the sources change, the encyclopedia has to change too.

Why News Corrections Matter to Wikipedia

Wikipedia doesn’t allow original reporting. Everything must be backed by a published, verifiable source. That means if a news story says a politician said something they didn’t, and later retracts it, Wikipedia’s entry on that politician must be updated. Otherwise, the false claim stays live indefinitely, misleading readers. This isn’t theoretical. In 2023, a BBC correction about a misquoted statistic in a climate report led to over 20 Wikipedia articles being edited within 48 hours. Those weren’t minor tweaks. They were full rewrites of key data points in entries about policy, economics, and public health.

Wikipedia editors don’t just copy-paste corrections. They analyze them. They check if the correction changes the meaning, the scale, or the context. A typo in a name? Probably not worth changing unless it’s a public figure. But a misreported death toll? That’s urgent. The community has guidelines: “Cite the most reliable source available,” and “If a source retracts or corrects a claim, update the article accordingly.” These aren’t suggestions. They’re rules enforced by thousands of volunteer editors.

How Corrections Travel from Newsrooms to Wikipedia

The path from a news correction to a Wikipedia edit is rarely direct. Most of the time, it’s driven by readers. Someone reads the original article, then sees the correction days later. They notice the Wikipedia entry still has the wrong info. They flag it. Sometimes, they edit it themselves. Other times, they post a note on the article’s talk page. From there, an experienced editor picks it up, verifies the correction in the original news source, and makes the change.

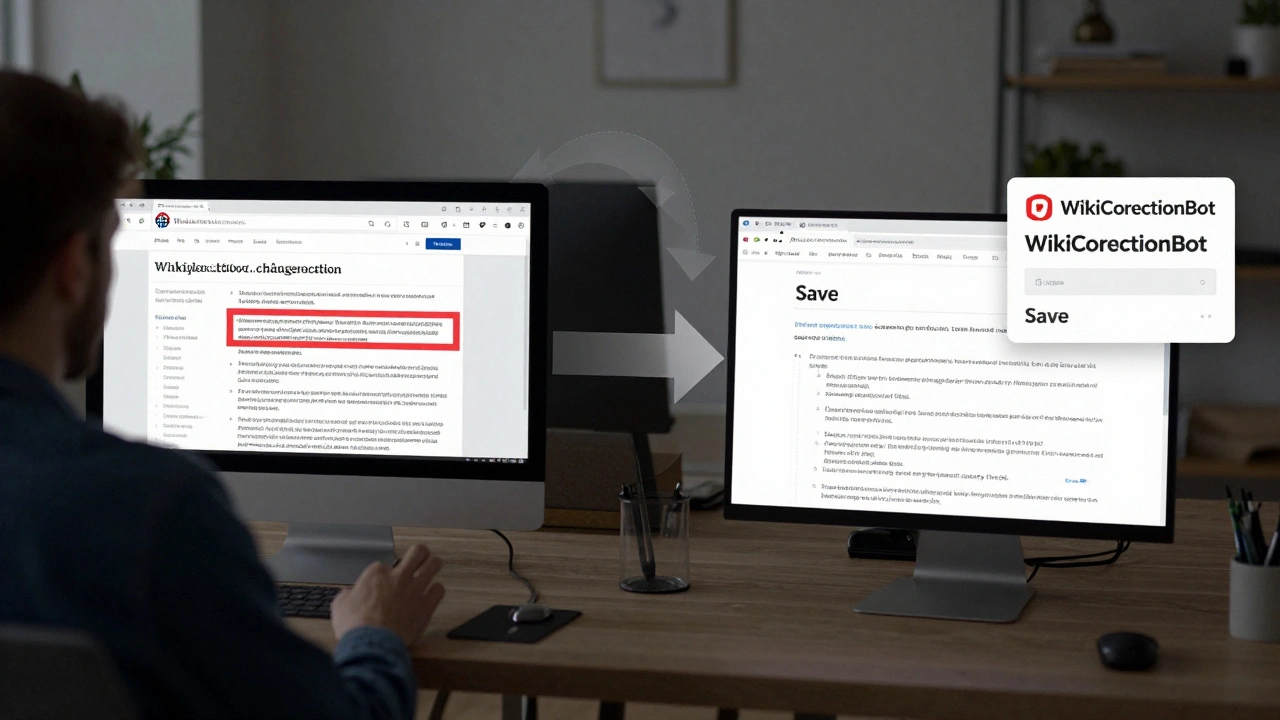

There are also automated tools that help. The Wikipedia bot “WikiCorrectionBot” monitors major news outlets for retractions and corrections. It doesn’t edit directly-it alerts human editors with links to the updated article. In 2024, this bot flagged over 1,200 potential corrections across English Wikipedia. About 78% of them led to actual edits. The rest were rejected because the correction was too minor, or the source wasn’t considered reliable enough by Wikipedia’s standards.

Not all news corrections are treated equally. A correction from a local newspaper might not trigger any action. But a correction from a global outlet like AP or AFP? That’s a red flag. Wikipedia’s reliability scale favors high-visibility, high-standards sources. The more widely read the original article, the more likely it is to prompt a Wikipedia edit.

When News Corrections Go Wrong

It’s not always clean. Sometimes, news outlets issue corrections that are vague, incomplete, or even contradictory. In 2022, a Reuters article corrected its reporting on a corporate merger-saying the deal had been “scaled back,” but never clarifying by how much. Wikipedia editors spent weeks debating whether to remove the original figure, keep it with a note, or replace it with “unclear.” The final version included a detailed footnote explaining the ambiguity. That’s rare. Most corrections are straightforward. But when they’re not, Wikipedia becomes a battleground of interpretation.

Another problem: some news outlets correct errors without publicly archiving the original version. That makes it impossible for Wikipedia editors to prove what was wrong. Wikipedia requires traceable evidence. If the original claim can’t be verified, editors often leave the content unchanged-not out of stubbornness, but out of policy. Without a public record of the error, they can’t justify the edit.

There’s also the issue of timing. Some corrections come days or weeks after the original story. By then, the Wikipedia entry has been cited in academic papers, blog posts, and even textbooks. Changing it means undoing a ripple effect. Editors have to weigh the risk of disrupting existing references against the need for accuracy. It’s a slow, cautious process.

The Ripple Effect: How One Correction Changes Everything

A single correction in a major news article can trigger edits across dozens of Wikipedia pages. For example, when The Guardian corrected its report on the number of people affected by a food safety scandal in 2023, it didn’t just change one article. It affected entries on public health policy, regulatory agencies, food industry statistics, and even related Wikipedia pages in other languages. Editors in Spanish, French, and German Wikipedias noticed the change and updated their versions too.

This cross-language effect is one of Wikipedia’s most powerful features. It’s not just a single encyclopedia. It’s a global network of interconnected knowledge. A correction in English can spark revisions in 15 other languages within a week. That’s why newsrooms that understand Wikipedia’s influence now include “Wikipedia impact” in their editorial review checklists. Some even assign a staff member to monitor how their stories appear on Wikipedia after publication.

It’s not just about fixing mistakes. It’s about controlling narrative drift. If a false claim spreads on Wikipedia, it can become accepted as fact-especially if it’s cited by other sites. A 2021 study by the University of Oxford found that 63% of Wikipedia claims about public figures that originated in news media were still present in Wikipedia entries even after the original source had been corrected. That’s a huge gap between media accountability and encyclopedia accuracy.

What This Means for Readers

As a reader, you should never assume Wikipedia is perfect. But you should assume it’s one of the most responsive reference tools out there. When you see a citation to a news article in a Wikipedia entry, check if that article has been updated. Many Wikipedia pages now include links to the version of the source used at the time of editing. You can compare it to the current version. If there’s a mismatch, you’re seeing a gap-and you can help fix it.

Wikipedia’s transparency is its strength. Every edit is recorded. Every change has a reason. If you’re unsure about a fact, click the “View history” tab. You’ll see who changed it, when, and why. Often, you’ll find a link to the news correction that prompted it. That’s more than most reference sources offer.

For journalists, this is a wake-up call. Your corrections don’t just fix your reputation-they fix the world’s understanding. If you get something wrong, and don’t correct it properly, you’re not just misleading your readers. You’re misleading millions who rely on Wikipedia as their first stop for truth.

How You Can Help

You don’t need to be an expert to help keep Wikipedia accurate. If you spot a Wikipedia article that cites a news story you know was corrected, you can make a difference. Here’s how:

- Find the Wikipedia article with the outdated claim.

- Open the news article’s correction page and copy the URL.

- Go to the Wikipedia article’s “Talk” page and post a note: “The source cited here was corrected on [date]. See: [link].”

- If you’re comfortable editing, make the change yourself. Cite the corrected source. Add a note in the edit summary: “Updated per news correction: [link].”

Wikipedia doesn’t reward fame. It rewards accuracy. And every small edit adds up.

Do news corrections always lead to Wikipedia edits?

No. Only corrections from sources Wikipedia considers reliable-like major newspapers, wire services, or academic journals-trigger edits. Minor corrections, typos, or changes from low-traffic outlets often don’t meet Wikipedia’s threshold for action. Editors also need clear, traceable evidence of the correction to justify a change.

Can Wikipedia editors change content without a news correction?

Yes, but only if they have another reliable source that contradicts the current claim. Wikipedia doesn’t allow edits based on personal knowledge or unpublished information. Even if you know a news story was wrong, you still need a credible published source to back up your edit.

Why does Wikipedia take so long to update after a correction?

Because editors prioritize accuracy over speed. They verify the correction, check if it’s widely reported, assess whether it changes the meaning of the claim, and sometimes wait to see if other sources confirm it. Rushing changes can introduce new errors. The system is designed to be cautious, not slow.

Are there tools that automatically update Wikipedia when news is corrected?

Yes, but they’re assistants, not replacements. Bots like WikiCorrectionBot monitor news outlets for retractions and alert human editors. They don’t make edits themselves. Human judgment is still required to determine if a correction is significant enough to warrant a change on Wikipedia.

What happens if a news outlet never issues a correction?

If the original claim remains uncorrected, Wikipedia keeps it as is-unless another reliable source proves it wrong. Wikipedia doesn’t police news organizations. It only reflects what’s published. If a false claim isn’t retracted, Wikipedia may carry it indefinitely, which is why reader vigilance is so important.