When a breaking story hits the news, journalists don’t just check their sources-they check Wikipedia. It’s not because they think it’s perfect. It’s because it’s fast, public, and often the first place people turn to understand what’s happening. And that’s where the real influence begins.

Wikipedia as a Real-Time News Tracker

On January 6, 2021, Wikipedia’s page on the U.S. Capitol riot was edited over 1,200 times in the first hour. By noon, it had more edits than most major news outlets had published articles. Journalists monitoring that page noticed patterns: which facts were being added, which were being removed, which sources were being cited. Some used those edits to spot emerging narratives before they appeared in traditional press.

It’s not just about speed. Wikipedia acts like a live feed of public understanding. If a rumor is spreading quickly but hasn’t been confirmed by official sources, it often shows up on Wikipedia first-then gets flagged, debated, and sometimes corrected. Editors at major newsrooms in New York, London, and Berlin now have alerts set up for high-traffic changes on pages related to breaking events.

How Editors Use Wikipedia to Validate or Challenge Stories

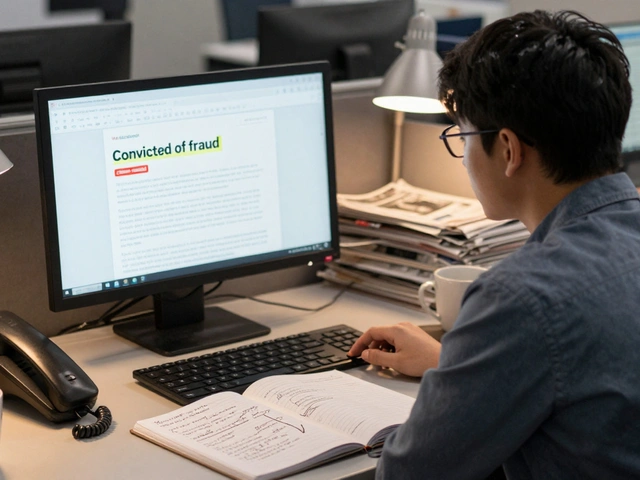

Here’s how it works in practice: a reporter gets a tip about a government official resigning. Before calling the press office, they check the Wikipedia page for that person. If the page suddenly has a new section titled “Resignation,” with multiple citations from local blogs and Twitter threads-but no major outlets reporting it yet-that’s a red flag. It might mean the story is unverified. Or it might mean the official press release hasn’t come out yet.

At The Guardian, editors started using Wikipedia as a preliminary verification tool after a 2022 incident where a false rumor about a minister’s resignation spread across social media. The Wikipedia page had been edited with fabricated quotes. The editorial team saw it, cross-checked with their own sources, and held the story. They later published a piece explaining how misinformation spread via Wikipedia edits.

Wikipedia doesn’t replace journalism. It acts as a pressure test. If a claim survives hours of public scrutiny on Wikipedia-without being reverted or tagged as disputed-it gains a kind of credibility that’s hard to fake.

The Reverse Influence: How News Coverage Changes Wikipedia

The flow isn’t one-way. When a major outlet like Reuters or AP publishes a story, Wikipedia editors rush to update relevant pages. In 2023, after The New York Times broke the story about the CEO of a major tech firm stepping down due to internal misconduct, Wikipedia’s page for that company was updated within 17 minutes. The change included direct quotes from the article, links to the original report, and a note citing the source.

But here’s the catch: Wikipedia doesn’t just copy. It filters. Editors require reliable sources. They reject opinion pieces, press releases, and unverified blogs. That means if a news outlet publishes something without solid sourcing, Wikipedia won’t reflect it-even if the story goes viral on social media.

This creates a strange dynamic: journalism shapes Wikipedia, but Wikipedia also shapes what journalism gets to be considered legitimate. If a story isn’t deemed newsworthy enough to make it onto Wikipedia’s stable version, it often fades from broader public awareness-even if it’s reported in smaller outlets.

Editorial Bias and the “Wikipedia Effect”

Wikipedia isn’t neutral. It reflects who edits it. Studies from the University of Oxford show that pages on U.S. politics are more likely to be edited by people in coastal cities, while pages on global conflicts are often shaped by Western media perspectives. That bias leaks into newsrooms.

Reporters covering international events sometimes rely on Wikipedia summaries written by editors who’ve never been to the region. A 2024 survey of 142 journalists across 12 countries found that 38% had used Wikipedia as a background reference for stories about regions they weren’t familiar with. Of those, 22% admitted they didn’t verify whether the Wikipedia content reflected local viewpoints.

That’s dangerous. In 2023, a major news organization ran a feature on protests in a Southeast Asian country, using Wikipedia’s summary as a foundation. The article portrayed the movement as “anti-government.” But local editors on Wikipedia had spent weeks correcting that framing, arguing the movement was “pro-democracy.” The correction hadn’t made it into the mainstream version of the page yet. The newsroom published the wrong narrative-and didn’t correct it for three days.

When Wikipedia Becomes a Gatekeeper

Some editors at Bloomberg and The Washington Post now treat Wikipedia’s “stable version” as a threshold. If a topic doesn’t have a Wikipedia page-or if the page is flagged as “incomplete” or “needs citations”-they treat it as too volatile to report on. They wait until Wikipedia stabilizes.

That’s not laziness. It’s risk management. Publishing a story on a topic that’s still being debated on Wikipedia means you might be chasing a false consensus. It also means you might be amplifying misinformation that’s still being contested.

One editor at The Atlantic told me: “We don’t wait for Wikipedia to be right. We wait for it to be settled. If the edits stop, the citations hold, and the talk page calms down-that’s when we feel safe to write.”

The Ethical Tightrope

Journalists are trained to be skeptical. But Wikipedia blurs the line between source and subject. When a reporter cites Wikipedia as a reference, they’re not citing a person-they’re citing a crowd. And that crowd doesn’t have accountability.

Some newsrooms now have internal policies: “Do not cite Wikipedia directly. Use it to find primary sources.” Others are more relaxed. A 2025 internal audit at NPR found that 11% of online articles referenced Wikipedia in footnotes-even though their editorial guidelines forbid it.

The real issue isn’t citation. It’s influence. When editors at major outlets start shaping their stories based on what’s trending on Wikipedia, they’re outsourcing part of their judgment to an open-editing platform. That’s not inherently bad-but it’s not neutral either.

What This Means for the Future of Journalism

Wikipedia isn’t replacing journalists. But it’s changing how they work. The speed of news now depends on how quickly information stabilizes across platforms-and Wikipedia is one of the fastest indicators of that stability.

Newsrooms that ignore Wikipedia are missing a real-time pulse of public understanding. Newsrooms that rely on it blindly are risking bias and inaccuracy. The smart ones treat it like a live dashboard: useful, but never final.

The next time you see a headline about a major event, ask yourself: Did the journalist check Wikipedia? And if they did, did they understand what they were seeing?

Does Wikipedia influence what news outlets report?

Yes, indirectly. Journalists use Wikipedia to track emerging narratives, spot misinformation, and identify which stories are gaining traction. If a topic stabilizes on Wikipedia with reliable citations, it’s more likely to be covered by major outlets. But Wikipedia doesn’t dictate headlines-it helps journalists decide what’s worth covering.

Can Wikipedia be trusted as a news source?

No, not directly. Wikipedia is an encyclopedia, not a news outlet. Its strength is synthesis: pulling together verified information from credible sources. Journalists use it to find those sources, not to quote them. Relying on Wikipedia as a primary source risks spreading unverified claims or outdated edits.

Why do journalists check Wikipedia before verifying a story?

Because it’s fast and public. If a rumor appears on Wikipedia with multiple citations, it’s a signal that others are investigating it too. If the edit history shows constant reversions and disputes, it’s a warning that the story is still contested. It’s a diagnostic tool, not a conclusion.

Do newsrooms have policies about using Wikipedia?

Many do. Major outlets like The New York Times, BBC, and Reuters prohibit citing Wikipedia directly in published work. Instead, they use it to locate primary sources-like official statements, academic papers, or verified interviews. Some smaller outlets have looser policies, which increases the risk of error.

Can Wikipedia edits be manipulated by media organizations?

Yes, and it happens. PR teams, political groups, and even journalists have been caught editing Wikipedia to shape narratives. Wikipedia’s community actively fights this with edit wars, protection levels, and oversight tools. But manipulation still occurs, especially on controversial topics. That’s why journalists treat Wikipedia as a signal-not a source.