Wikipedia background research: How knowledge is built, verified, and defended

When you do Wikipedia background research, the process of digging into how information on Wikipedia was created, sourced, and debated before it appeared on the page. Also known as Wikipedia source tracing, it’s not just checking citations—it’s following the trail of edits, talk page arguments, and policy decisions that shaped what you see. Most people think Wikipedia is a static database, but it’s really a living conversation between thousands of volunteers, each bringing their own knowledge, biases, and rules. The article you read today might have been rewritten ten times because of a dispute over a single source, a bot fixing a broken link, or a volunteer spending hours tracking down a local newspaper from 2007.

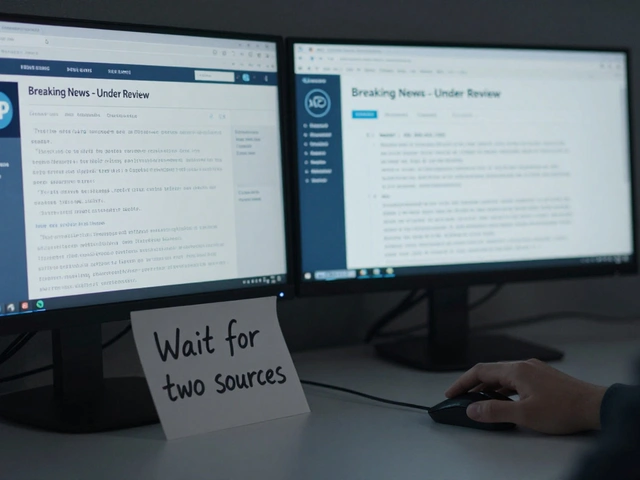

This kind of research connects directly to Wikipedia sources, the published materials editors use to verify claims, ranging from peer-reviewed journals to regional news outlets. Not all sources are equal. Secondary sources like academic reviews or major newspapers are preferred because they analyze events, not just report them. But in places without strong media, like rural Africa or small island nations, editors struggle to find any reliable sources at all. That’s where Wikipedia policies, the community-written rules that govern what gets included, how disputes are settled, and who gets to decide become critical. Policies like neutral point of view and notability aren’t just bureaucracy—they’re the guardrails that keep misinformation from taking over. And when those policies clash—like when paid editors push for favorable coverage, or when bots auto-revert edits faster than humans can explain them—that’s when background research becomes essential to understand why something changed, or disappeared.

Behind every Wikipedia article is a story of collaboration, conflict, and sometimes, quiet persistence. You’ll find editors who spend years refining a single page, volunteers who monitor talk pages for signs of bias, and communities using Wikimedia grants to build knowledge in languages no one else is documenting. Some of these efforts are public, like the WikiProject COVID-19 that turned Wikipedia into the world’s most trusted pandemic resource. Others happen in the shadows: a single editor tracking down a citation from a defunct blog, or a bot fixing 500 broken links overnight. The Wikipedia reliability, how trustworthy the information is based on source quality, editorial oversight, and community consensus isn’t guaranteed—it’s earned, one edit at a time.

What you’ll find below isn’t a list of how-to guides. It’s a collection of real stories about how Wikipedia’s knowledge gets made, challenged, and protected. From sockpuppet investigations to student editors improving articles for credit, from African language projects to AI literacy campaigns—you’ll see the human work behind the screen. This isn’t about memorizing rules. It’s about understanding the system so you can use it better, question it wisely, and maybe even help fix it.

Wikipedia as Background Material: The Journalist's Reference Guide

Wikipedia isn't a source-but for journalists, it's one of the most powerful research tools available. Learn how to use it to find leads, verify facts, and uncover stories without ever quoting it.