Online Encyclopedias: How Wikipedia Stays Trusted While AI Rises

When you think of online encyclopedias, digital reference platforms that collect and organize knowledge for public access. Also known as digital reference works, they’ve evolved from static CD-ROMs to live, constantly updated systems powered by people—or algorithms. Among them, Wikipedia, a free, collaboratively edited encyclopedia run by volunteers and supported by the Wikimedia Foundation stands out. It’s not the fastest, and it’s not the fanciest, but surveys show people still trust it more than AI-generated encyclopedias for accurate, verifiable facts. Why? Because every edit leaves a trace. Every claim has a source. And every change can be questioned, reviewed, or reverted by someone who actually read the material.

Wikimedia Foundation, the nonprofit that supports Wikipedia and its sister projects doesn’t run ads or sell data to advertisers. Instead, it fights for open knowledge—pushing back against copyright takedowns that erase history, demanding transparency from AI companies that scrape Wikipedia without credit, and training editors to spot bias. Meanwhile, AI encyclopedias, automated knowledge systems that generate answers using large language models look slick. They answer fast. But their citations? Often fake. Their sources? Sometimes made up. And their version of "consensus"? Just whatever the algorithm learned from the most popular, not the most accurate, data.

Behind the scenes, Wikipedia’s strength comes from its rules—not laws, but living practices. Reliable sources are the backbone. Due weight keeps minority views from being drowned out. The watchlist helps editors catch vandalism before it spreads. And projects like Wikidata connect facts across 300+ languages so a fact updated in Spanish shows up in English, too. This isn’t magic. It’s messy, human work. Thousands of volunteers spend hours every day checking citations, fixing grammar, and arguing over wording—all because they believe knowledge should be free, accurate, and open to all.

Some online encyclopedias chase clicks. Wikipedia chases truth. That’s why you’ll find stories here about how the Signpost picks its news, how Indigenous voices are being added back into articles, and how copy editors cleared over 12,000 articles in a single volunteer drive. You’ll also see how AI is creeping in—not as a helper, but as a threat to the integrity of what we call fact. This collection doesn’t just report on Wikipedia. It shows you how it works, why it matters, and who keeps it alive when no one’s watching.

How Wikipedia Handles Pseudoscience vs. Mainstream Science

Wikipedia doesn't declare what's true-it reports what reliable sources say. Learn how it distinguishes mainstream science from pseudoscience using citations, consensus, and proportional representation.

Mass Deletions and G13 Expired Drafts on Wikipedia: Cleanup Tips

Learn how to prevent your Wikipedia drafts from being deleted under G13 policy. Simple steps to save, revive, and submit your work before it disappears forever.

Infobox and Template Standards for High-Quality Wikipedia Articles

Infoboxes and templates are essential for high-quality Wikipedia articles, ensuring consistency, accuracy, and machine-readability. Learn how to use them correctly to improve article quality and avoid common mistakes.

Environmental Journalism: How Wikipedia Is Driving Sustainability Through Green Initiatives

Wikipedia is quietly shaping environmental journalism by providing accurate, sourced, and globally accessible information on climate change, pollution, and sustainability-backed by green infrastructure and community-driven editing.

Grokipedia and AI-Generated Encyclopedia Content: The Challenge to Collaborative Knowledge

Grokipedia is an AI-generated encyclopedia that produces content at lightning speed-but without human oversight. While it's fast and polished, it lacks accountability, transparency, and the ability to correct bias. Understanding how it differs from collaborative platforms like Wikipedia is critical for using AI knowledge responsibly.

How to Build Wikidata Bots for Updating Wikipedia Infoboxes

Learn how to build automated bots that update Wikipedia infoboxes using live data from Wikidata. Save time, reduce errors, and keep encyclopedia facts accurate with Python and pywikibot.

How to Build a New Wikipedia: Incubator Projects and Launch Milestones

Learn how to launch a new multilingual Wikipedia using the Wikimedia Incubator. Discover the five key milestones, common pitfalls, and real examples of successful language projects that went from zero to live.

Breaking News Sourcing Standards on Wikipedia Articles

Wikipedia doesn't break news-it verifies it. Learn how strict sourcing rules ensure accuracy during breaking events, why unverified reports are rejected, and what counts as a reliable source.

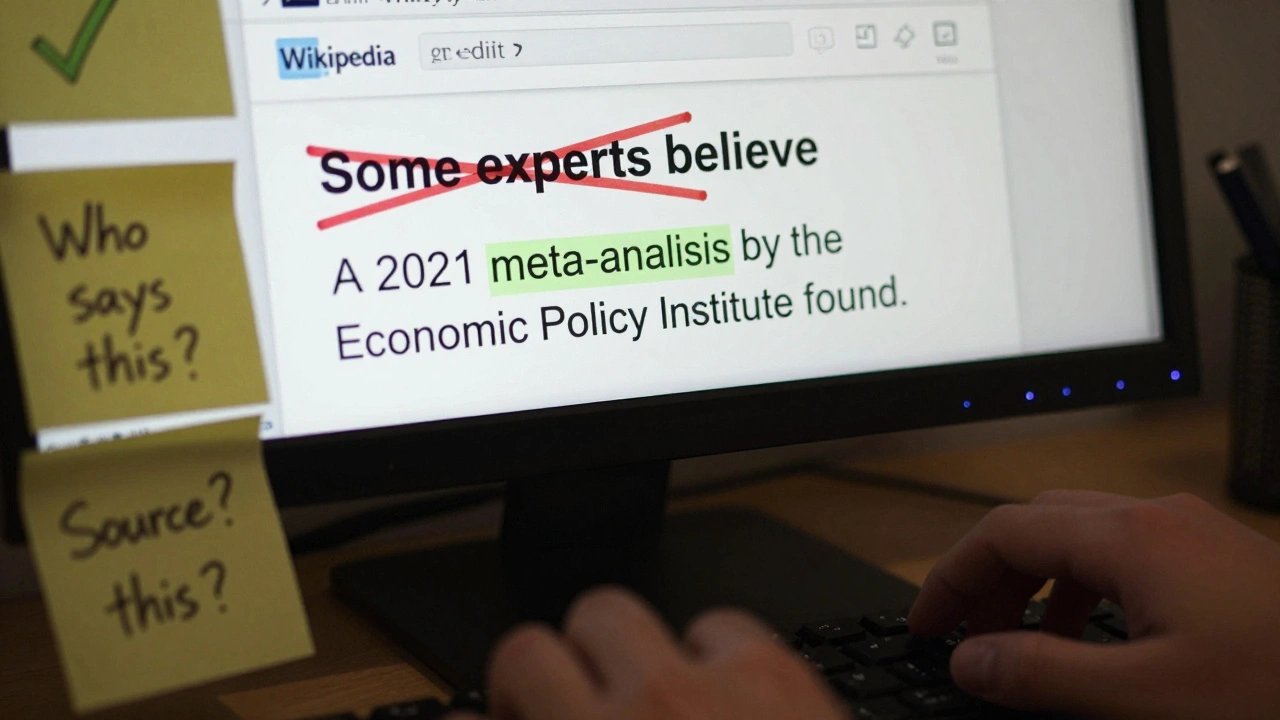

How to Avoid Weasel Words and Vague Language on Wikipedia

Learn how to spot and remove weasel words and vague language on Wikipedia to improve article accuracy, meet editorial standards, and build trust with readers using clear, sourced statements.

Using Wikidata to Standardize Sources on Wikipedia

Wikidata helps standardize citations on Wikipedia by storing source details in a central database, making citations consistent, verifiable, and automatically updatable across all articles.

Why WikiProject Inactive Projects Fade: The Real Reasons Collaborations Die on Wikipedia

Why do WikiProjects on Wikipedia fade away? It's not lack of interest-it's poor support systems, burnout, and no clear path for new editors. Learn what keeps a few thriving-and how you can help revive others.

How to Write Neutral Lead Sections on Contentious Wikipedia Articles

Learn how to write neutral lead sections for contentious Wikipedia articles using verified facts, proportionality, and clear sourcing-without taking sides or using loaded language.