Community Guidelines: How Wikipedia’s Rules Keep the Encyclopedia Fair and Reliable

When you read a Wikipedia article, you’re seeing the result of millions of small decisions made by volunteers following a set of shared rules—community guidelines, a collection of unwritten and written rules that govern how edits are made, disputes are settled, and trust is maintained on Wikipedia. These aren’t laws passed by a government, but agreements forged by editors over two decades of trial and error. They’re what keep the site from becoming a free-for-all, and they’re why a random person’s edit can be trusted as much as a scholar’s. Without these guidelines, Wikipedia wouldn’t work. Anyone can edit, but not everyone can edit however they want. That’s the balance.

One of the most important conflict of interest, a policy requiring editors to disclose personal ties to topics they edit, like working for a company or being a family member of a subject rule is the conflict of interest policy. If you work for Apple, you can’t rewrite Apple’s Wikipedia page to make it look better—unless you clearly say so and let others review your changes. This rule keeps corporate PR and personal agendas out of the encyclopedia. It’s not about stopping people from helping—it’s about making sure help doesn’t come with hidden strings. The same goes for Wikipedia moderation, the process by which editors review, revert, or block harmful edits using tools like diff views, bots, and talk page discussions. Moderation isn’t censorship. It’s cleanup. Bots catch spam, humans spot bias, and talk pages turn arguments into agreements. This system works because it’s transparent. Every edit is logged. Every change can be questioned. Every user is accountable.

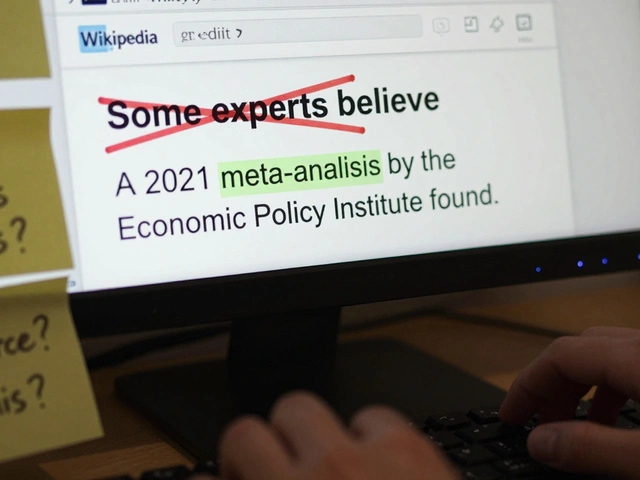

These guidelines also shape how articles are written. The Wikipedia editing rules, a set of core principles including neutral point of view, verifiability, and no original research that all editors must follow mean that every claim needs a source. No guessing. No opinions. Just facts backed by reliable books, journals, or official reports. That’s why Wikipedia articles feel different from blogs or social media—they’re built to be checked, not just read. And when people disagree? The guidelines give them a path forward: talk it out, cite better sources, or ask for help from neutral editors. This isn’t perfect, but it’s the best system we have for building knowledge together.

What you’ll find in the posts below are real stories of how these rules play out in practice—from how edit-a-thons train new editors to follow them, to how legal threats test their limits, to how tools like TemplateWizard help beginners avoid breaking them by accident. These aren’t abstract policies. They’re living practices, used every day by people who care enough to fix typos, challenge bias, and defend the encyclopedia from spam, lies, and pressure. You don’t need to be an expert to follow them. You just need to care about getting it right.

How Wikipedia Policies Are Developed and Approved

Wikipedia policies are created and updated by volunteers through open discussion, not top-down decisions. Learn how consensus, transparency, and community experience shape the rules behind the world's largest encyclopedia.