Fact-Checking AI: How AI Tools Are Changing Wikipedia's Accuracy Efforts

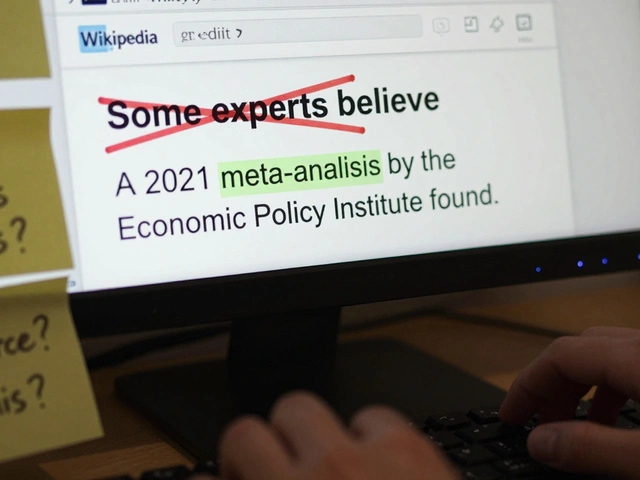

When you hear fact-checking AI, automated systems designed to verify claims using data, logic, and pattern recognition. Also known as automated verification tools, it isn’t replacing editors—it’s helping them sort through millions of edits faster. But here’s the catch: AI doesn’t understand context. It can spot a copied sentence or a missing citation, but it can’t tell if a source is biased, outdated, or culturally irrelevant. That’s where human editors still win.

Wikipedia already uses bots to catch vandalism, fix broken links, and revert spam—Wikipedia bots, automated scripts that handle repetitive maintenance tasks—but fact-checking AI, a newer layer of automation focused on truth verification is stepping in for more complex jobs. It scans for claims that lack references, flags potential plagiarism, and even detects subtle tone shifts that suggest bias. Still, these tools rely on training data built mostly from English-language sources, which means they’re less reliable for topics tied to non-Western regions or local news. That’s why editorial control, the human ability to judge nuance, context, and intent remains non-negotiable. A bot might miss that a quote was taken out of context from a local newspaper that closed last year. Only a human who knows the region will catch that.

And then there’s AI bias, when automated systems reinforce existing inequalities because their training data reflects historical imbalances. If most of the verified facts on Wikipedia come from U.S. or European sources, AI will assume those are the default standards. That’s why recent efforts focus on giving editors more control—letting them tweak AI suggestions, override false positives, and train models on diverse datasets. The goal isn’t to automate truth. It’s to make truth easier to find.

What you’ll find in this collection aren’t hype pieces about AI taking over Wikipedia. They’re real stories from the front lines: how bots handle spam, how editors use AI tools to catch copyvios, how community feedback is shaping smarter verification systems, and why the most reliable edits still come from people who care more about accuracy than speed. This isn’t the future of Wikipedia. It’s the present—and it’s messy, imperfect, and deeply human.

How Wikipedia’s Sourcing Standards Fix AI Misinformation

AI often generates false information because it lacks reliable sourcing. Wikipedia’s strict citation standards offer a proven model to fix this-by requiring verifiable sources, not just confident-sounding guesses.