Media Bias on Wikipedia: How Accuracy and Neutrality Are Maintained

When you read a Wikipedia article, you’re not just getting facts—you’re seeing the result of a constant battle against media bias, the tendency of news outlets to frame stories in ways that favor certain perspectives, often unintentionally. Also known as journalistic bias, it’s one of the biggest threats to Wikipedia’s credibility—and one of its most actively managed problems. Unlike search engines or social feeds, Wikipedia doesn’t rank content by clicks or outrage. It demands proof. Every claim must be backed by a reliable, independent source. That’s why editors don’t just copy headlines—they dig into archives, compare multiple outlets, and flag anything that smells like spin.

That’s where reliable sources, publications with editorial standards, fact-checking teams, and a track record of accountability. Also known as primary sources, they are the backbone of every Wikipedia article. A newspaper like The New York Times or a peer-reviewed journal gets more weight than a blog or a press release. And if a story only appears in one outlet? It’s flagged. Editors use tools like the SIFT method to trace claims back to their origin. Did the original source say what the media claimed? Or was it twisted? This isn’t just theory—it’s daily work done by thousands of volunteers who treat Wikipedia like a library, not a forum.

And then there’s Wikipedia neutrality, the rule that articles must present all significant viewpoints fairly, without favoring any side. Also known as neutral point of view, it’s not about being boring—it’s about being fair. If a political figure is controversial, their supporters’ views and critics’ views both get space—based on how much coverage they’ve received in reliable sources. No one gets erased. No one gets elevated. That’s why Wikipedia articles on heated topics often feel oddly balanced. It’s not because the editors are wishy-washy. It’s because they’re following a strict, transparent process.

Behind every neutral article is a history of edits, talk page debates, and sometimes, fierce disagreements. Editors don’t just fix typos—they argue over wording, source selection, and tone. And when media outlets themselves are the subject—like when a news organization is accused of bias—Wikipedia has its own set of rules to avoid becoming part of the problem. That’s why you’ll rarely see phrases like "controversial" or "alleged" unless they’re directly cited from a reliable source. The goal isn’t to judge the media. It’s to report what the media says, and what others say about what the media says.

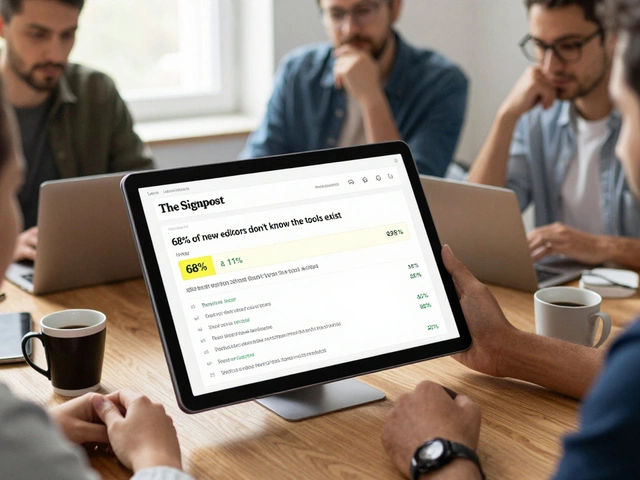

What you’ll find below is a collection of real, practical guides from editors who live this work every day. From how to spot a weak citation to how to use Wikipedia’s own tools to review edits, these posts show you exactly how the system works—not in theory, but in practice. Whether you’re a student, a researcher, or just someone tired of misinformation, you’ll see how Wikipedia stays honest when so much of the internet doesn’t.

Media Criticism of Wikipedia: Common Patterns and How Wikipedia Responds

Media often criticizes Wikipedia for bias and inaccuracies, but its open model allows rapid correction. This article explores common criticisms, how Wikipedia responds, and why it remains the most transparent reference tool online.

How Technology Media Covers Wikipedia: What Gets Highlighted and What’s Ignored

Technology media often portrays Wikipedia as unreliable and chaotic, but real data shows it's accurate, widely used, and quietly powerful. This article breaks down what gets covered - and what's ignored.