Wikipedia content standards: How the world's largest encyclopedia ensures accuracy and trust

When you read a Wikipedia article, you're seeing the result of Wikipedia content standards, a set of enforced rules that require all information to be verifiable, neutral, and properly sourced. Also known as Wikipedia policies, these standards are what separate Wikipedia from random websites and make it a trusted starting point for millions. Unlike search engines that rank pages by clicks or ads, Wikipedia demands proof. Every claim must link to a reliable source. Every opinion must be balanced. Every edit must follow clear guidelines — or it gets undone.

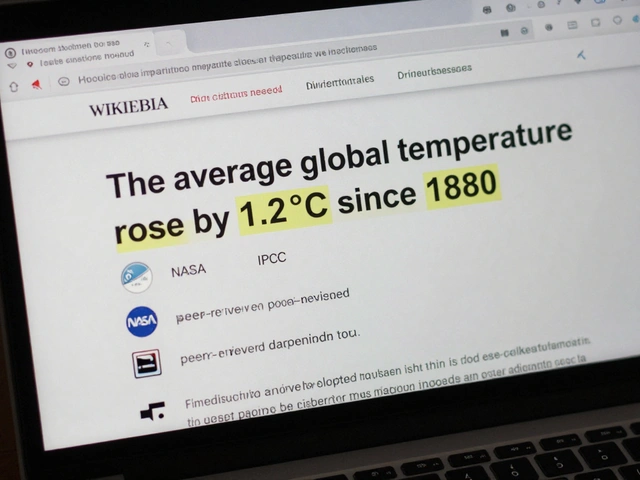

These standards don’t exist in a vacuum. They’re shaped by real people — editors, librarians, researchers — who fight to keep bias out and facts in. For example, the conflict of interest policy, a rule requiring editors to disclose personal ties to topics they edit. Also known as COI policy, it stops companies, politicians, and even well-meaning family members from rewriting articles to serve their own agendas. Then there’s Wikipedia sourcing, the requirement that all information be backed by published, independent sources. Also known as verifiability policy, it’s why Wikipedia rejects AI-generated text, blog posts, and social media rumors — even if they sound convincing. And behind all of it is Wikipedia neutrality, the principle that articles must present all significant viewpoints fairly, without favoring any side. Also known as neutral point of view, it’s the reason why articles on heated topics like climate change or geopolitics don’t become propaganda battles — at least, not officially. These three pillars — sourcing, conflict disclosure, and neutrality — are the backbone of every article you trust.

These rules aren’t just theory. They’re enforced daily by bots that flag copy-pasted content, volunteers who patrol talk pages for bias, and editors who debate policy changes on Village Pump. When a journalist uses Wikipedia to find leads, they’re not citing it — they’re using its standards as a filter. When a student writes a paper, they’re learning how to verify sources because Wikipedia taught them how. And when AI tries to fake facts, Wikipedia’s sourcing rules are one of the few defenses left.

What you’ll find below is a collection of real stories about how these standards work — and sometimes, how they break. From how editors handle geopolitical edit wars, to how TemplateWizard helps beginners avoid mistakes, to how bots catch spam before it even loads — this isn’t just about rules. It’s about the people and systems that make sure Wikipedia stays useful, even when the world tries to twist it.

WikiProject Assessment Guidelines: How to Align Your Wikipedia Edits with Official Quality Standards

Learn how Wikipedia's WikiProject assessment guidelines work to improve article quality, meet community standards, and move your edits from stub to featured status.