Wikipedia detection systems: Tools that keep the encyclopedia honest

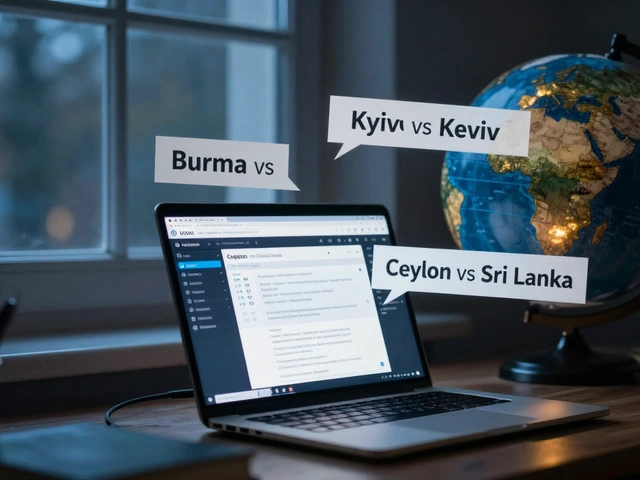

When you read a Wikipedia article, you’re seeing the result of dozens of Wikipedia detection systems, automated and human-driven tools that identify and block false, stolen, or manipulated content. Also known as content integrity tools, these systems run silently in the background—scanning edits, flagging copied text, and catching fake accounts—so the encyclopedia stays trustworthy.

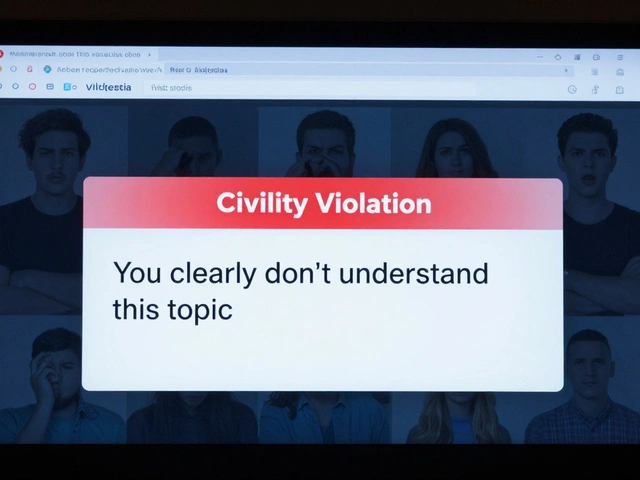

These systems don’t work alone. They rely on copyvio detection, tools that compare new edits against millions of published sources to find uncredited text, and sockpuppetry detection, methods that track patterns in editing behavior to uncover hidden accounts controlled by the same person. Bots, for example, revert vandalism within seconds, fix broken links, and flag articles with missing citations. Human editors then review the flagged content, often using tools like The Signpost, Wikipedia’s community-run newsletter that highlights policy violations and emerging threats to stay ahead of coordinated manipulation. These systems evolved because Wikipedia’s open model makes it vulnerable—anyone can edit, and bad actors know it. So the platform built layers of defense: automated filters, community oversight, and clear policies that require transparency.

One of the biggest challenges today is AI misinformation, false information generated by large language models that sound convincing but lack real sources. Wikipedia’s answer? Strict sourcing rules. If an edit can’t be backed by a published, reliable source, it gets removed—no matter how well-written it is. That’s why AI-generated content often fails on Wikipedia: it can’t cite a newspaper, a book, or a peer-reviewed study. The detection systems don’t target AI itself—they target the absence of verifiable evidence. This is why Wikipedia remains one of the few major platforms where truth still has to earn its place.

Behind every flagged edit, every blocked account, and every rewritten paragraph is a mix of code and human judgment. You won’t see the systems at work—but you’ll feel their effect every time you find accurate, well-sourced information. The collection below shows how these tools operate in practice: from how bots fix typos at scale, to how editors use specialized tools to catch copied content, to how the community responds when someone tries to game the system. These aren’t theoretical debates—they’re real, daily battles to protect what millions rely on.

How Wikipedia Stops Spam: Inside Its Detection and Filtering Systems

Wikipedia stops millions of spam edits daily using automated bots, pattern detection, and volunteer editors. Learn how its layered system keeps the encyclopedia clean and reliable.