Wikipedia politics: How governance, policies, and community debates shape the world's largest encyclopedia

When you think of Wikipedia politics, the internal decision-making processes, policy debates, and community power structures that determine what content survives on Wikipedia. Also known as Wikipedia governance, it's not about elections or politicians—it's about thousands of volunteers arguing over neutrality, sourcing, and who gets to edit what. This isn't bureaucracy. It's real-time knowledge democracy, where a single edit can spark a month-long debate, and a policy change can rewrite how millions access information.

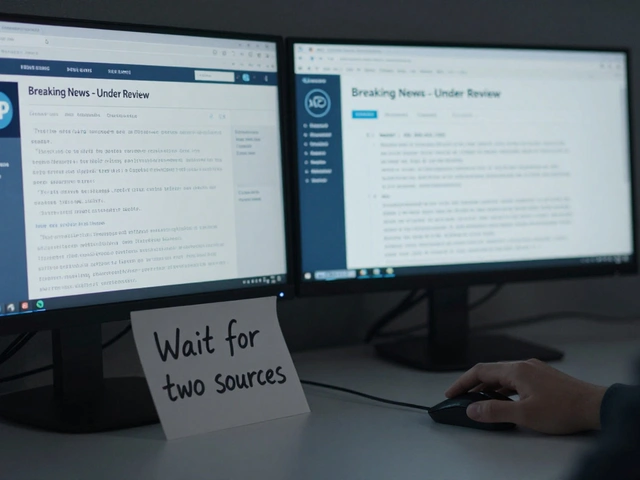

Behind every article you read, there's a hidden layer of rules and conflicts. The conflict of interest, a core Wikipedia policy requiring editors to disclose personal ties to topics they edit. Also known as COI, it exists because paid editors, companies, and activists have tried to manipulate content—and the community learned the hard way that trust breaks fast without transparency. Then there's community consensus, the informal but powerful process where editors debate and agree on policies through talk pages, Village Pump threads, and Signpost discussions. Also known as consensus building, it's how Wikipedia makes decisions without bosses—sometimes slowly, sometimes messily, but always publicly. These aren't theoretical ideas. They're the reason some articles get deleted, others get locked, and why AI-generated text gets flagged before it even loads.

Wikipedia politics isn't just about rules—it's about power. Who gets to be heard? Why do some editors get blocked for minor mistakes while others push controversial edits for years? Why do local news sources vanish from articles in developing countries, while Western media dominates? These aren't accidents. They're outcomes of policy debates that started years ago and still shape what you see today. The fight over notability, sourcing, and neutrality isn't behind closed doors—it's on public talk pages, where anyone can join, argue, or fix a mistake.

You'll find posts here that show how these systems work in practice: how sockpuppet accounts get caught, how editors use Village Pump proposals to change rules, how bots help enforce policies, and how safety tools protect volunteers who edit dangerous topics. You'll see how librarians and educators bring structure to chaos, how AI misinformation is fought with citation standards, and how small UI changes are tested without breaking trust. This isn't a collection of news—it's a map of the living, breathing system that keeps Wikipedia honest. What you read next isn't just about editing—it's about who controls knowledge, and how you can help shape it.

Geopolitical Edit Wars on Wikipedia: High-Profile Cases

Wikipedia's open-editing model is being exploited in geopolitical edit wars, where nations and groups manipulate articles on Ukraine, Taiwan, Partition, and Iraq to control global narratives. These battles shape how history is remembered.