Community Governance on Wikipedia: How Editors Decide What Stays and What Goes

When you think of Wikipedia, you might picture a lone editor fixing a typo. But behind every article is a system of community governance, a decentralized system where volunteers create, debate, and enforce rules that keep Wikipedia accurate and fair. Also known as Wikipedia governance, it’s not run by a corporation or government—it’s run by thousands of unpaid editors who vote on policies, investigate abuse, and decide what counts as reliable knowledge. This isn’t bureaucracy. It’s real-time democracy, with arguments played out on talk pages, not in courtrooms.

At the heart of this system are tools and policies built by editors, not engineers. Conflict of interest policy, a rule requiring editors to disclose personal ties to topics they edit keeps paid promoters and PR teams from rewriting history. Sockpuppetry, the use of fake accounts to manipulate discussions is tracked down by volunteer investigators who analyze editing patterns like detectives. And when disputes get heated—like over articles about Ukraine, Taiwan, or religious figures—Wikipedia editors, the core group of long-term contributors who shape content standards step in to mediate, often using formal processes like arbitration committees. These aren’t abstract ideas. They’re daily practices that keep Wikipedia from collapsing under its own scale.

What makes this work isn’t technology—it’s trust. Editors don’t follow rules because they’re forced to. They follow them because they believe in the mission: a free, accurate, and open encyclopedia. That’s why tools like community governance are constantly being tested. A/B tests on the interface? They’re designed to reduce friction without undermining fairness. Bots that revert vandalism? They’re trained by humans who’ve seen the same spam patterns for years. Even the signposts and assessment guidelines are written by editors who’ve been burned by biased edits and want to make sure it doesn’t happen again.

There’s no headquarters dictating this. No CEO. No board of directors. Just people—librarians, students, journalists, retirees—who show up every day to argue over citations, block trolls, and protect the integrity of knowledge. And if you’ve ever wondered how Wikipedia stays reliable despite being open to anyone, the answer isn’t algorithms. It’s community governance.

Below, you’ll find real stories from inside this system: how policies are made, how conflicts are resolved, and how everyday editors keep the encyclopedia from falling apart.

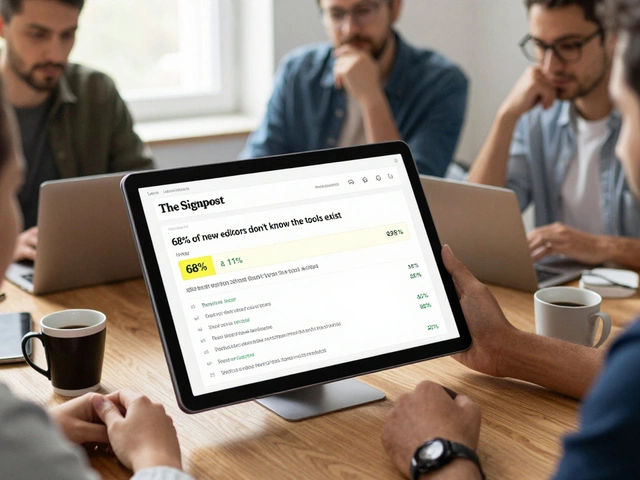

Special Issues of The Signpost: Elections, Wikimania, and More

The Signpost's special issues cover Wikipedia's elections, Wikimania, and major community decisions - offering unmatched insight into how the world's largest encyclopedia really works.

Living Policy Documents: How Wikipedia Adapts to New Challenges

Wikipedia's policies aren't static rules-they're living documents shaped by community debate, real-world threats, and constant adaptation. Learn how volunteers keep the encyclopedia accurate and trustworthy.

How Wikipedia Handles Vandalism Conflicts and Edit Wars

Wikipedia handles vandalism and edit wars through a mix of automated bots, volunteer moderators, and strict sourcing rules. Conflicts are resolved by community consensus, not votes, and persistent offenders face bans. Transparency and accountability keep the system working.

Community Governance on Wikinews: How Admins, Reviewers, and Volunteers Keep the Site Running

Wikinews runs on volunteers-not paid staff. Learn how admins, reviewers, and everyday contributors maintain accuracy, enforce policies, and keep independent journalism alive through community governance.

How to Seek Consensus on Wikipedia Village Pump Proposals

Learn how to build consensus on Wikipedia's Village Pump to get policy proposals approved. Avoid common mistakes and use proven strategies to make your ideas stick.