Archive: 2025/12 - Page 8

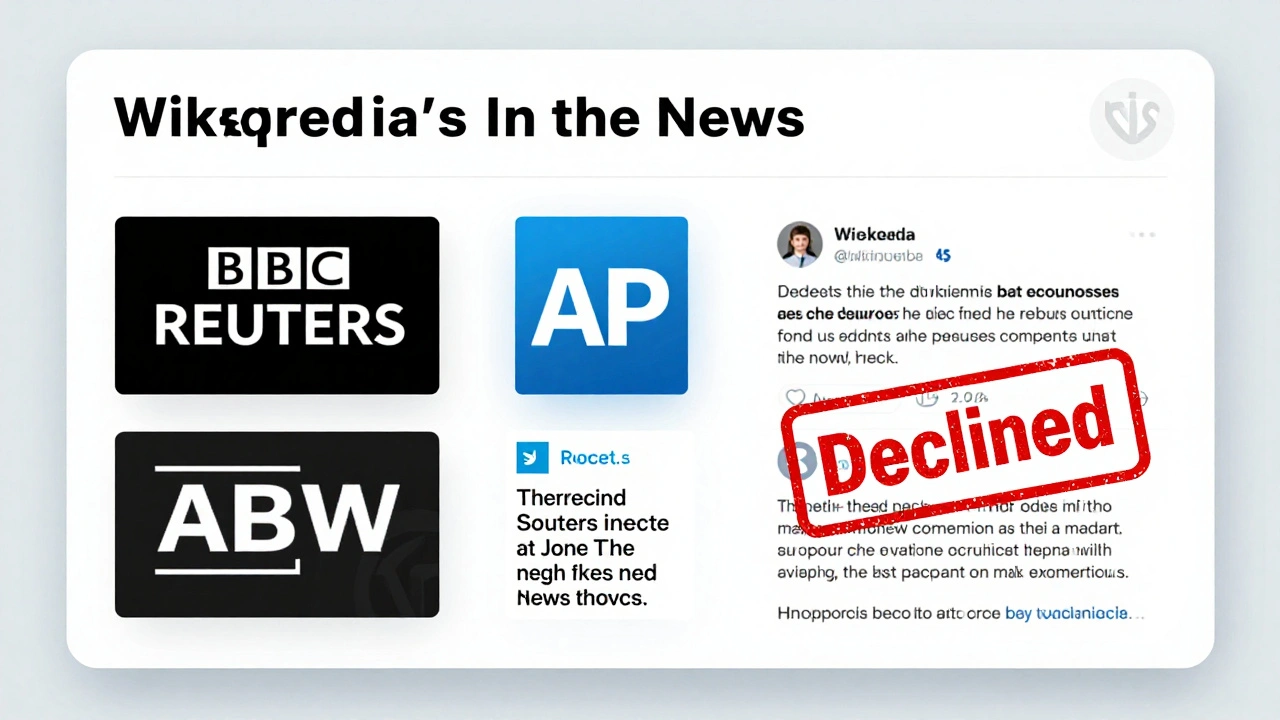

How In the News Candidates Are Nominated and Reviewed on Wikipedia

Wikipedia's In the News section doesn't follow breaking news-it documents verified events with multiple reliable sources. Here's how nominations work, what gets approved, and why it's trusted by millions.

Recent Wikipedia Admin Actions Against Abusive Users

Wikipedia admins have intensified efforts against abusive users in 2025, using AI tools and stricter bans to combat vandalism, misinformation, and coordinated manipulation. Learn how the platform protects its integrity and what users can do to help.

Blocking Policy Case Studies from Wikipedia Administrator Noticeboards

Real case studies from Wikipedia's administrator noticeboards show how blocks are decided, appealed, and refined. Transparency, policy, and community oversight drive moderation-not secrecy.

The Challenge of Mobile Wikipedia: Responsive Design Issues

Wikipedia's mobile site loads slowly and breaks on low-end devices because it's built on outdated desktop code. Despite 70% of users accessing it via mobile, the design hasn't been truly rethought for small screens.

Peer Review Through WikiProjects: How Wikipedia Volunteers Improve Article Quality

WikiProjects are volunteer groups on Wikipedia that improve article quality through peer review. Learn how they work, why they matter, and how you can join to help make Wikipedia more accurate and reliable.

Disambiguation Pages on Wikipedia: Hidden Traffic Hubs

Disambiguation pages on Wikipedia are quiet traffic hubs that handle millions of searches daily by clarifying ambiguous terms like 'Java' or 'Washington.' They're not articles-but they keep the encyclopedia working.

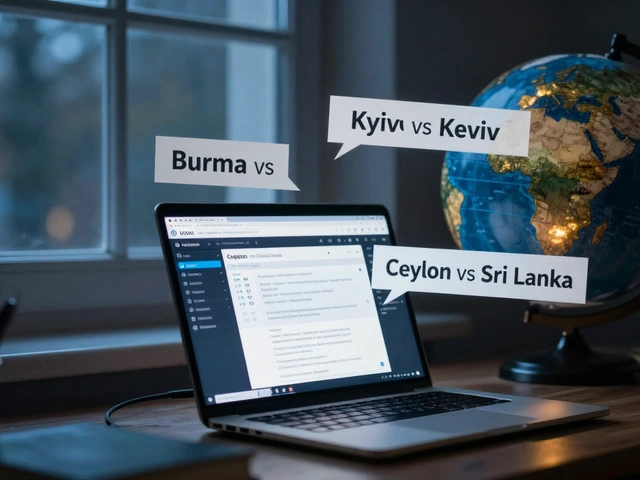

How to Reduce Cultural Bias in Wikipedia Biographies and History Articles

Wikipedia's biographies and history articles often reflect cultural bias, favoring Western, male, and elite figures. Learn how systemic gaps form-and how anyone can help make history more inclusive through editing, sourcing, and language changes.

Human-in-the-Loop Workflows: How Real Editors Keep Wikipedia Accurate Amid AI Suggestions

Human-in-the-loop workflows keep Wikipedia accurate by combining AI efficiency with human judgment. Editors review AI suggestions, ensuring neutrality, sources, and consensus guide every change.

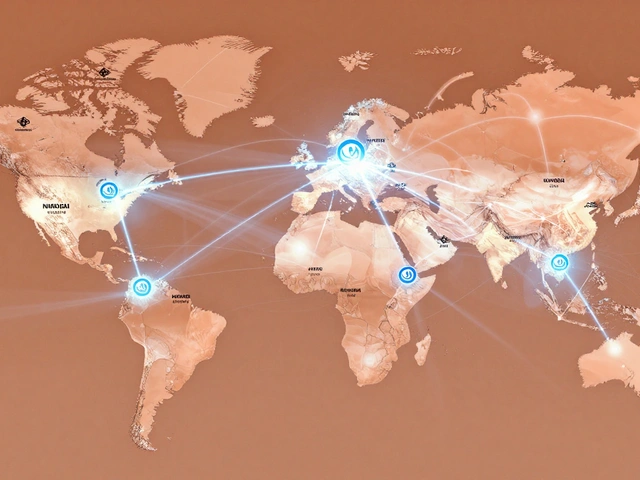

Data Journalism and Wikipedia: Visualizing Editing Patterns and Demographics

Data journalism reveals how Wikipedia's editing patterns reflect global inequalities in knowledge production, showing who edits, what gets changed, and why some voices remain silent despite the platform's open promise.

How Branding and Trust Seals Influence Encyclopedia Adoption

Branding and trust seals are critical for online encyclopedias to gain user trust and adoption. Clear identity and verified credentials make users more likely to cite and return to a platform.

Community Governance on Wikinews: How Admins, Reviewers, and Volunteers Keep the Site Running

Wikinews runs on volunteers-not paid staff. Learn how admins, reviewers, and everyday contributors maintain accuracy, enforce policies, and keep independent journalism alive through community governance.

Moderator Self-Care and Burnout Prevention on Wikipedia

Wikipedia moderators fight vandalism daily without pay or recognition - leading to widespread burnout. Learn how to protect your mental health, set boundaries, and stay in the game longer.